Web scraping helps online learning platforms in the U.S. gather and organize data from websites to improve how they deliver courses and resources. It saves time, reduces errors, and ensures platforms stay updated with trends, reviews, and curriculum changes. Here's why it matters and how it works:

-

Key Benefits:

- Collects course details, reviews, and trends to improve content.

- Keeps materials current with automated updates.

- Enhances accessibility for diverse learners.

- Scales with growing data needs efficiently.

-

Common Challenges:

- Dynamic content (e.g., JavaScript-loaded pages).

- Anti-scraping measures like CAPTCHAs.

- Website changes causing scraper failures.

-

Solutions:

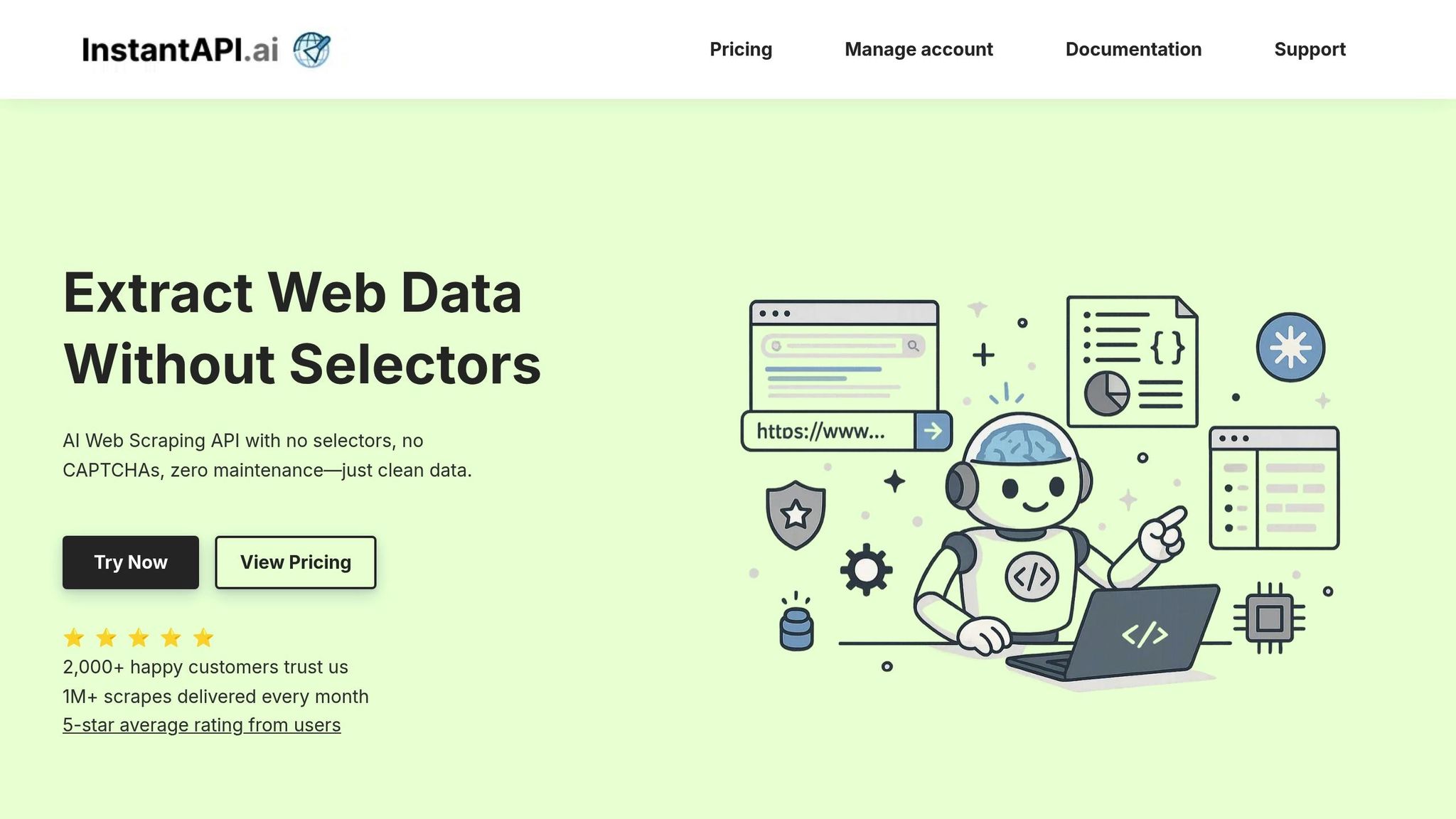

- Tools like InstantAPI.ai simplify scraping with features like proxy rotation, CAPTCHA solving, and automatic updates.

- Pay-as-you-go pricing ($2 per 1,000 pages) makes it cost-effective for fluctuating needs.

Web scraping is transforming education by making learning more personalized, accessible, and aligned with industry demands. Platforms can now focus on improving user experiences while staying compliant with U.S. regulations.

Python AI Web Scraper Tutorial - Use AI To Scrape ANYTHING

Common Web Scraping Challenges for Online Learning Platforms

Understanding the hurdles involved in web scraping is key to finding smarter, more reliable ways to extract data from online learning platforms.

These platforms often face significant challenges when trying to extract data from interactive and well-protected websites. Issues like complex site structures, dynamic content, and strict anti-scraping measures frequently cause traditional scraping methods to fail.

The situation becomes even trickier when dealing with U.S.-based educational content, where compliance requirements add another layer of complexity. Many institutions have wasted time and resources on scraping solutions that ultimately couldn't deliver.

Technical Problems in Educational Web Scraping

One of the biggest obstacles is dynamic content loading. Many online learning platforms use JavaScript and AJAX to load key information - like course materials, student reviews, and curriculum details - after the initial page load. This means tools that rely on basic HTML scraping often miss critical data, leaving gaps.

Akamai's research highlights the issue further: 70% of website traffic on average comes from bots, and some educational platforms see this rise to over 82.3% on their most popular course pages. To combat this, many sites have implemented advanced anti-scraping measures.

CAPTCHAs and bot detection systems are another major roadblock. These tools are designed to distinguish between real users and automated scrapers. Once triggered, they can bring data extraction to a complete halt, often requiring manual intervention to resolve.

Infinite scroll and pagination also present challenges. Features like endless scrolling on course catalogs or student forums require scrapers to mimic human behavior, but many tools fail to do this effectively, resulting in incomplete datasets.

Then there’s selector drift, an ever-present maintenance headache. As Disney's Software Engineering Manager Justin Kennedy puts it:

"Websites are changing all the time, both when websites change 'naturally' during the development process and when they change specifically to try to prevent scraping. This means constant refinement of scraping strategy is needed to ensure you can continually gather updated data sources."

Other technical hurdles include IP blocking and rate limiting, which occur when scrapers send too many requests too quickly, and browser fingerprinting, where sites detect and block scrapers based on browser settings, user agents, or unusual patterns of behavior.

These challenges expose the limitations of traditional scraping methods.

Why Traditional Approaches Fall Short

Despite their initial promise, conventional scraping techniques often fail to meet the demands of modern educational platforms. The landscape is littered with examples of scraping solutions that worked well in theory but fell apart in real-world conditions.

Take home-grown Python and Scrapy stacks, for example. While libraries like BeautifulSoup or Scrapy can handle small-scale projects during proof-of-concept phases, they often break down in production. Proxy bans, for instance, can bring operations to a standstill, and maintaining headless browsers like Chrome eats up valuable developer resources.

No-code scraping tools offer simplicity but struggle with the dynamic nature of modern websites. Features like infinite scroll or CAPTCHA protections often render these tools ineffective, requiring technical fixes that defeat their purpose.

Standalone proxy and CAPTCHA services can bypass certain blocks, but they only solve part of the problem. Engineers still need to build and maintain the extraction logic, which fragments the overall process.

Even traditional scraping SaaS platforms fall short. Their fixed pricing models often don’t align with the fluctuating needs of educational institutions. For example, data scraping might spike during enrollment periods but drop off during breaks, making fixed monthly fees or seat-based licensing impractical.

| Challenge | Real-World Impact |

|---|---|

| Dynamic content (JavaScript-rendered) | Missing key information like course details and reviews |

| Anti-bot protections (Cloudflare, CAPTCHA) | Blocked access, silent failures during critical moments |

| Infinite scroll pagination | Incomplete data capture, leaving out course listings and feedback |

| Website structure changes | Scraper breakdowns that go unnoticed until data quality suffers |

The core problem with traditional methods is that they treat scraping as a straightforward task, ignoring the complexity involved. As David Sénécal, Principal Product Architect at Akamai, explains:

"Web scraping is a complex problem. While some scraping activity is essential to a website's success, it can be challenging to determine the value of other activities - and the intention of the entity initiating the scraping."

To keep up with modern demands, online learning platforms need scraping solutions that can adapt to changing site structures, handle advanced anti-scraping defenses, and scale efficiently with their needs. Traditional methods just aren't built to handle this level of complexity.

Web Scraping Tools and Technologies

Having the right tools is crucial when it comes to scraping dynamic educational websites, especially while adhering to US educational data standards.

Knowing the available options can help you pick tools that align with your technical needs and budget. These range from open-source libraries that demand significant development effort to full-service platforms that manage the entire scraping process. Let’s explore both traditional tools and the ways InstantAPI.ai simplifies this process.

Standard Web Scraping Tools

BeautifulSoup is a reliable option for parsing static HTML and XML. However, it struggles with JavaScript-heavy pages, which are common in modern learning platforms for things like course details and interactive student features.

Scrapy is a Python-based framework designed for larger-scale data extraction. It supports forms, cookies, and HTTP authentication, making it suitable for scraping student portals and course systems. That said, it has a steep learning curve and requires ongoing maintenance as websites evolve.

Selenium is ideal for sites that rely heavily on JavaScript. It controls real web browsers, allowing it to interact with dynamic elements like enrollment buttons, expandable syllabi, and infinite-scroll course catalogs. The downside? It’s resource-intensive due to its reliance on browser automation.

While these tools are powerful, they come with shared challenges in real-world use. Educational websites frequently change their layouts, requiring constant updates to scraping scripts. Developers also spend significant time handling errors and ensuring compliance with US educational standards. These issues make traditional tools less practical for complex, large-scale projects.

How InstantAPI.ai Simplifies Web Scraping

InstantAPI.ai offers a streamlined alternative to traditional scraping tools, tackling many of the challenges they face while improving efficiency and reducing maintenance.

One standout feature is its no-selector approach. Instead of manually managing selectors, you simply describe your data needs in JSON, and InstantAPI.ai handles the rest. This is particularly useful for educational platforms where course layouts change frequently. Traditional scrapers often break when a learning management system updates its interface, but InstantAPI.ai adapts automatically, ensuring uninterrupted data flow.

Another advantage is its built-in handling of anti-bot measures and dynamic content. Features like proxy rotation, CAPTCHA solving, and JavaScript rendering are integrated, so you don’t have to juggle separate tools to bypass site protections. This automation ensures your scraping operations run smoothly, even when websites implement new defenses.

The pricing model is also straightforward. At $2 per 1,000 pages, you only pay for what you process. This is ideal for educational projects with fluctuating data needs, such as spikes during enrollment periods and slower activity during breaks. It eliminates the rigid monthly fees or licensing tiers that are common with other services.

| Feature | Traditional Tools | InstantAPI.ai |

|---|---|---|

| Setup Time | Days to weeks of development | Minutes with API calls |

| Maintenance | Frequent selector updates | Automatic site adaptation |

| Anti-Bot Handling | Requires separate tools | Built-in protections |

| JavaScript Support | Needs headless browser setup | Native JavaScript rendering |

| Pricing Model | Fixed fees or complex tiers | $2 per 1,000 pages |

| Error Handling | Manual fixes needed | Automatic retries and fallbacks |

InstantAPI.ai also includes specialized endpoints tailored for educational scraping. The /links endpoint identifies course catalog pages using plain-English descriptions, while /next automatically finds pagination URLs in course listings. For search result data, the /search endpoint provides location-aware Google SERP extraction with anti-bot measures baked in.

US-based developers can shift their focus from maintaining scraping logic to analyzing data. With simple HTTPS calls, InstantAPI.ai integrates seamlessly into existing workflows, supporting tools like Airflow, Spark, or custom Python scripts - all without locking you into a specific vendor.

For educational platforms facing the complexities of modern web scraping, InstantAPI.ai offers a solution that reduces operational headaches, keeps costs manageable, and scales effortlessly with your needs.

sbb-itb-f2fbbd7

Step-by-Step Guide to Data Extraction and Integration

Following the challenges and tools outlined earlier, here's a practical guide to extracting and integrating educational data effectively.

Extracting Course Data and Reviews

Start by defining your data schema in JSON format to specify the fields you need, such as course title, instructor, duration, price (in USD), enrollment numbers, ratings, and more. Submit this schema through the API, and InstantAPI.ai will adapt automatically to changes in HTML structure, saving you from manual updates.

import requests

api_key = "your_api_key_here"

url = "https://api.instantapi.ai/scrape"

payload = {

"url": "https://example-learning-platform.com/courses",

"data": {

"course_title": "string",

"instructor": "string",

"duration_hours": "number",

"price_usd": "number",

"enrollment_count": "number",

"average_rating": "number",

"review_count": "number",

"course_description": "string",

"prerequisites": "array",

"learning_outcomes": "array"

}

}

response = requests.post(url, json=payload, headers={"Authorization": f"Bearer {api_key}"})

course_data = response.json()

To locate individual course pages, use the /links endpoint, which can automatically discover relevant URLs. Instead of manually navigating site structures, you can simply describe what you're searching for in plain language:

links_payload = {

"url": "https://example-platform.com/catalog",

"description": "individual course detail pages with enrollment information"

}

links_response = requests.post("https://api.instantapi.ai/links", json=links_payload, headers={"Authorization": f"Bearer {api_key}"})

course_urls = links_response.json()["links"]

For reviews, define the structure you need - such as reviewer name, rating, date, helpful votes, and comments - and InstantAPI.ai will handle the complexities of extracting this data, regardless of the platform's formatting.

Integrating Data into US-Based Pipelines

Once you've extracted the data, the next step is integrating it into your systems while adhering to US formatting standards. This includes displaying prices in USD (e.g., $1,299.99), dates in the MM/DD/YYYY format, and numbers with proper comma separators. Tools like Apache Airflow can simplify this process.

formatted_payload = {

"url": "target_course_page",

"data": {

"course_price": "format as USD currency with $ symbol",

"start_date": "format as MM/DD/YYYY",

"duration": "convert to hours if given in other units",

"enrollment_count": "format with comma separators for thousands"

}

}

To integrate with ETL pipelines, InstantAPI.ai works seamlessly with major US-based platforms. For example, Apache Airflow users can create DAGs to schedule and automate scraping jobs:

from airflow import DAG

from airflow.operators.python_operator import PythonOperator

import requests

def scrape_courses():

# Your InstantAPI.ai scraping logic here

response = requests.post("https://api.instantapi.ai/scrape", json=payload)

return response.json()

def load_to_warehouse(ti):

data = ti.xcom_pull(task_ids='scrape_task')

# Load to your data warehouse (Snowflake, BigQuery, etc.)

dag = DAG('course_data_pipeline', schedule_interval='@daily')

scrape_task = PythonOperator(task_id='scrape_task', python_callable=scrape_courses, dag=dag)

load_task = PythonOperator(task_id='load_task', python_callable=load_to_warehouse, dag=dag)

When working with educational data, compliance is critical. FERPA regulations, for instance, require careful handling of student-related information. Ensure your processes include anonymization and audit logging to meet these standards. InstantAPI.ai's pricing - $2 per 1,000 pages - makes it cost-effective to set up separate workflows for compliance-sensitive tasks.

For real-time integration, you can use webhook-style systems to monitor course catalog updates. This ensures your internal systems always reflect the latest information without manual intervention.

Solving US-Specific Technical Issues

US educational platforms often implement strict anti-bot measures, especially during peak enrollment periods. To address this, InstantAPI.ai automates CAPTCHA resolution, handles rate limits, and manages infinite scrolling. Scheduling scraping during off-peak hours (e.g., 2 AM EST) can further optimize costs and improve data capture.

import schedule

import time

def off_peak_scraping():

# Schedule large scraping jobs for 2 AM EST

# when educational platforms have lower traffic

scrape_large_catalog()

schedule.every().day.at("02:00").do(off_peak_scraping)

Infinite scroll, common on course catalog pages, is another challenge. InstantAPI.ai can detect when additional content loads and continue scraping until all data is captured:

dynamic_payload = {

"url": "university_course_search_results",

"wait_for": "course listings to fully load",

"data": {

"courses": "array of course objects with full details"

}

}

Error recovery is essential for large-scale data extraction. While InstantAPI.ai includes automatic retries with exponential backoff, it's wise to implement monitoring systems to track success rates. Be aware of platform maintenance schedules, often during weekends, to avoid unnecessary retries.

These strategies enhance the reliability of data extraction and integration for US educational institutions. By automating complex tasks and leveraging intelligent retry systems, you can focus on analyzing data rather than troubleshooting. At $2 per 1,000 pages, this approach remains budget-friendly, even for complex platforms requiring multiple attempts or specialized handling.

Improving User Experience with AI-Driven Web Scraping

AI-driven web scraping is transforming how educational platforms serve their users, taking data extraction and integration to the next level. By gathering information from multiple sources, these systems enable smarter, more responsive services that make learning more tailored, inclusive, and affordable.

Personalized Learning Through Accurate Data

Today’s learners expect course recommendations that align with their career goals and skill levels. AI-driven web scraping helps meet this demand by collecting data like course titles, reviews, and enrollment trends to create customized suggestions.

Using methods like content-based and collaborative filtering, platforms can analyze this data to offer highly specific recommendations. For instance, a software engineer in Austin might be recommended advanced Python courses tailored to the local job market and the success of similar learners. One platform even saw a surge in enrollments and engagement after incorporating trending courses identified through AI-driven scraping.

AI also addresses challenges like language barriers and knowledge gaps. If a learner struggles with advanced material, the system might suggest foundational courses or provide multilingual content to bridge the divide.

Cost-Efficiency and Scalability with InstantAPI.ai

Educational organizations often experience fluctuating demand, with heightened activity during enrollment periods and slower months in between. InstantAPI.ai’s pay-as-you-go model is designed to handle these shifts efficiently, avoiding monthly minimums or fixed licensing fees. Research shows that AI-powered scraping tools can reduce data extraction time by 30–40%, and some businesses have reported cutting operational costs by as much as 40% by reallocating resources from maintenance to analysis.

AI systems also excel at processing large volumes of data simultaneously. They can manage course catalogs, reviews, and enrollment data from multiple sources in parallel, which is especially critical during peak enrollment times. Real-time updates on course availability, pricing, and competitive offerings are handled seamlessly. Plus, InstantAPI.ai requires zero maintenance - its ability to adapt to changes in website structures ensures uninterrupted data flow without the need for developer intervention. These features directly enhance the user experience by delivering timely and accurate content.

Improving Accessibility and Compliance

Educational platforms in the U.S. must adhere to strict accessibility regulations like the Americans with Disabilities Act (ADA) and Section 508 of the Rehabilitation Act. AI tools not only streamline data extraction but also ensure that content remains inclusive and compliant. Automated accessibility checks flag issues such as missing captions or improper document formatting, helping platforms maintain high standards for all users.

AI-driven scraping tools boast data accuracy rates of up to 99.5% when handling complex or unstructured information. Staying proactive with compliance monitoring can help institutions avoid hefty ADA fines, which can reach up to $100,000, while also ensuring content is accessible to diverse user groups. For example, AI can recommend adding audio descriptions, offering multilingual options, or optimizing navigation for screen readers based on user behavior and enrollment data.

As accessibility guidelines evolve, such as the anticipated WCAG 2.2 updates, automated monitoring ensures that platforms stay ahead of regulatory changes. This not only protects institutions from compliance risks but also reinforces their commitment to inclusive education, benefiting both learners and educators.

Key Takeaways for Web Scraping in Online Learning

Advanced web scraping is transforming how educational content is delivered online. For educational organizations striving to keep up in the fast-paced world of online learning, web scraping has become a game-changer. It’s no longer just about gathering data - it’s about redefining how platforms operate and serve their students.

AI-powered scraping tools are leading the charge with their efficiency and dependability. Unlike traditional scrapers, which often require significant development time to manage issues like selector drift and proxy handling, tools like InstantAPI.ai simplify the process. By automatically managing tasks like proxy rotation, CAPTCHA solving, and JavaScript rendering, these tools free up educational teams to focus on creating value rather than dealing with technical headaches.

This efficiency is driving rapid market growth. According to a report by Future Market Insights, AI web scraping applications are expected to grow at an annual rate of 17.8% between 2023 and 2033, reaching $3.3 billion. This growth highlights how the technology is streamlining data extraction while minimizing the need for constant manual intervention.

For educational organizations, the benefits are clear. Real-time curriculum updates and personalized learning experiences are now achievable on a large scale. Automated accessibility checks also help reduce compliance risks, ensuring content remains available to a broad range of users and keeping institutions ahead of regulatory requirements.

The rise of Data-as-a-Service models is another major shift. Instead of investing in complex scraping infrastructures, organizations can now access clean, structured data through simple API calls. This eliminates the need for hiring specialized web scraping professionals, who earn an average of $59.01 per hour in the U.S., while delivering more consistent and scalable outcomes.

As we look to the future, the combination of AI and big data is set to drive even greater advancements in web scraping. These tools will continue to help educational platforms adopt smarter, more scalable data strategies to meet the demands of evolving learning environments.

FAQs

How can web scraping help online learning platforms deliver personalized content?

Web scraping allows online learning platforms to gather and analyze data like user preferences, engagement patterns, and feedback. This data helps platforms design personalized learning paths, suggest relevant courses, and compile resources that match each learner's unique needs.

With this approach, platforms can boost user engagement and make learning more effective, offering students a tailored and impactful educational experience.

How can online learning platforms effectively handle challenges like CAPTCHAs and dynamic content when scraping data?

To overcome obstacles like CAPTCHAs and dynamic content, online learning platforms can leverage browser automation tools such as Selenium, Playwright, or Puppeteer. These tools mimic real user behavior, allowing seamless navigation through complex websites and even tackling CAPTCHA challenges. For added ease, integrating CAPTCHA-solving services can automate this process further, saving time and effort.

When dealing with dynamic content, headless browsers come in handy. They execute JavaScript, ensuring that all webpage elements, including those loaded dynamically, are accessible for data extraction. These strategies make data scraping more reliable and efficient, even when faced with advanced web technologies.

How does InstantAPI.ai make web scraping easier and more efficient for educational platforms?

InstantAPI.ai simplifies web scraping for educational platforms with its AI-driven, no-code solution that adjusts automatically to website updates. Traditional scraping methods often demand constant tweaking and troubleshooting, but InstantAPI.ai effortlessly tackles hurdles like CAPTCHA bypass, JavaScript rendering, and geotargeting, cutting down on time and effort.

By removing the need for custom scrapers or manual interventions, InstantAPI.ai ensures quicker and more dependable data extraction. This makes it a great fit for institutions aiming to collect course details, reviews, or curriculum information efficiently - without driving up costs or dealing with technical headaches.