AI is transforming web scraping by making it faster, more accurate, and adaptable to complex tasks. Here's how:

- Dynamic Content: AI tools like PromptLoop and ScrapingBee handle JavaScript-rendered content and website changes automatically.

- Pattern Recognition: Tools like ScrapeGraphAI use machine learning to detect and extract patterns from diverse website structures.

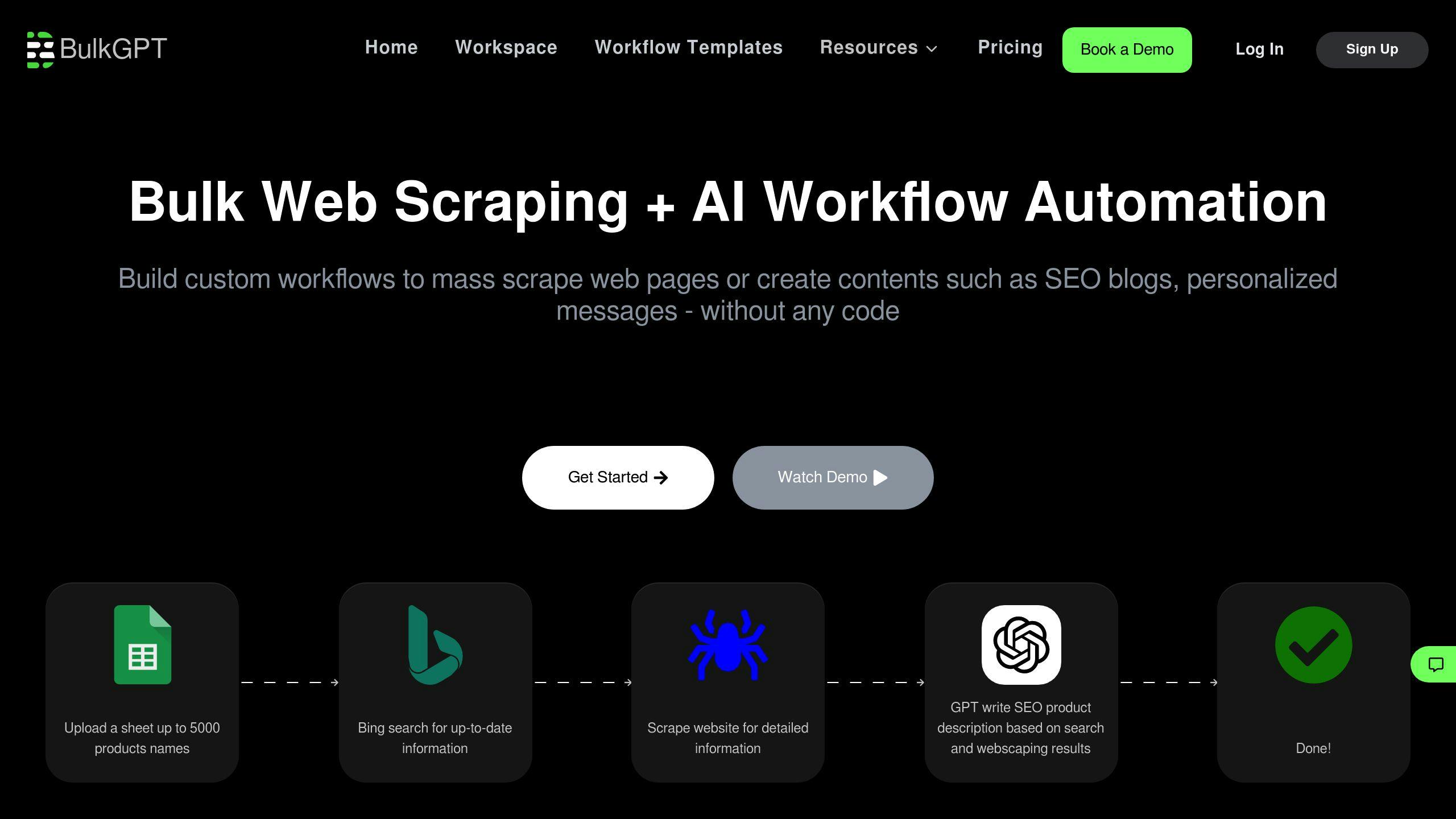

- Custom Workflows: Platforms like BulkGPT and Otio allow real-time adjustments, no-code automation, and seamless API integration for large-scale data collection.

How to Scrape Websites Using LLM Technology

Ways AI Improves Web Scraping Processes

AI has transformed web scraping by introducing advanced features that make data extraction faster and more dependable. Here's how AI is reshaping web scraping workflows.

Handling Dynamic Content

Many modern websites use JavaScript and dynamic content loading, which can be tricky for traditional scraping methods. AI-powered tools like PromptLoop and ScrapingBee tackle this challenge effectively. PromptLoop uses machine learning to adapt to asynchronous content loading, making it easier to scrape data from dynamic, multi-page websites. Meanwhile, ScrapingBee's API handles JavaScript rendering and dynamic content loading, delivering clean and organized data through a user-friendly interface.

AI doesn't just manage dynamic content - it also adjusts to complex data patterns with little need for manual intervention.

Recognizing Patterns Automatically

AI's ability to recognize patterns has cut down the manual work involved in web scraping. Tools like ScrapeGraphAI use Large Language Models (LLMs) to detect data patterns across a variety of website layouts. This ensures accurate data extraction even as site designs change. These tools automatically:

- Detect patterns in different website structures

- Maintain consistent accuracy over time

This technology allows teams to customize their workflows on the fly, making web scraping more precise and efficient.

Customizing Workflows in Real Time

AI tools now make it easier than ever to tailor web scraping workflows. For example, BulkGPT offers no-code automation, enabling dynamic workflow adjustments, API integration, and automated data formatting. This lets teams adapt to changing project needs while ensuring reliable results.

Similarly, Otio's AI-powered platform simplifies large-scale data collection. Researchers can easily tweak scraping settings and analyze massive datasets without sacrificing speed or accuracy.

sbb-itb-f2fbbd7

Best AI Tools for Web Scraping

AI-driven web scraping tools have made data extraction smoother and more efficient. These tools simplify the process, letting users extract and manage data without needing advanced technical skills.

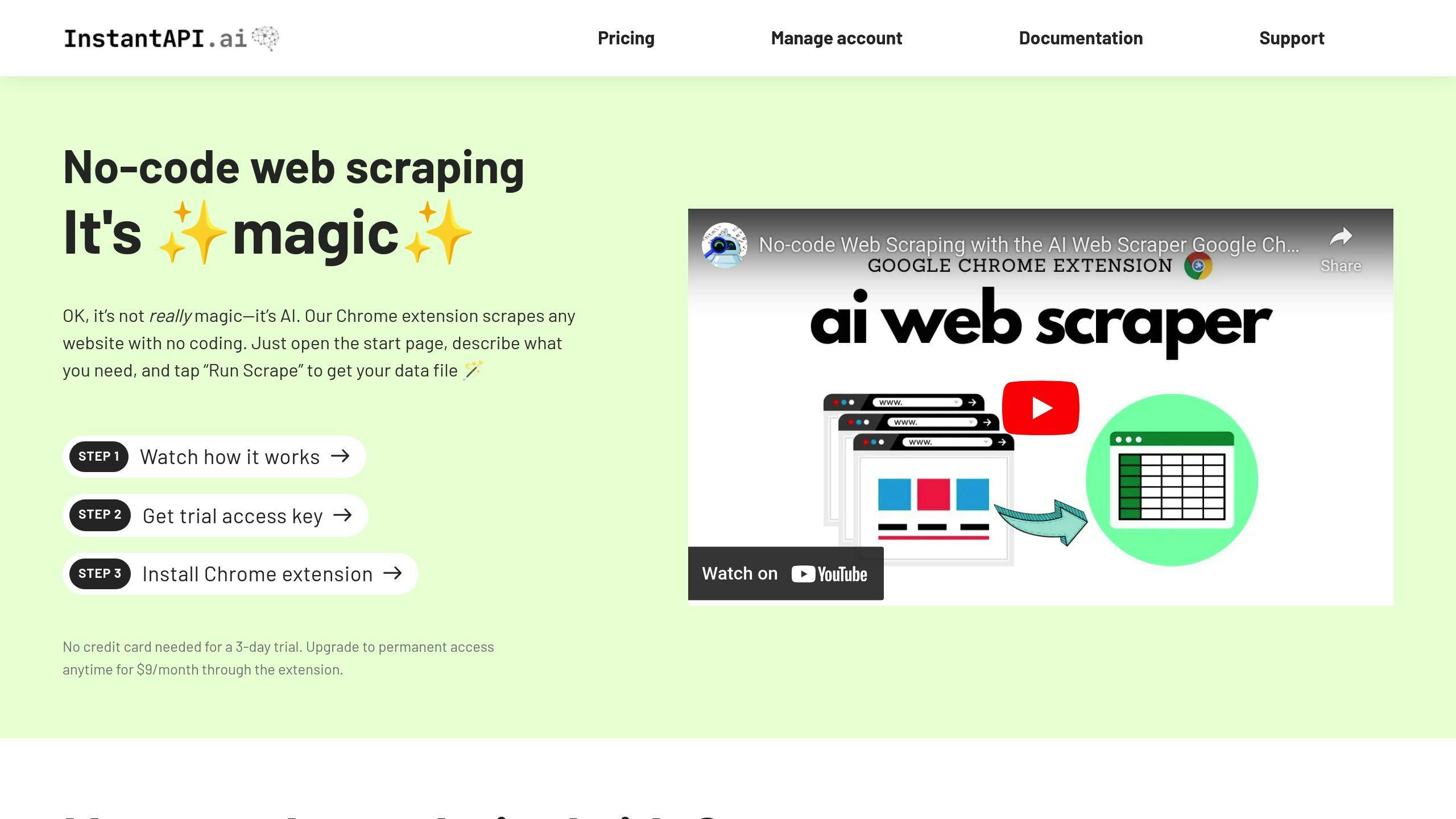

InstantAPI.ai: No-Code and API Scraping

InstantAPI.ai offers a dual solution for web scraping: a Chrome extension and an API. Developed by Anthony Ziebell, this tool eliminates the need for coding while delivering professional-grade functionality. The Chrome extension supports unlimited scraping, automated pattern recognition, and JavaScript rendering.

One standout feature is its ability to handle dynamic content without requiring xPath expertise. Even when websites change, InstantAPI.ai updates its scraping patterns automatically, ensuring continuous data extraction. For businesses, the platform provides custom API solutions, dedicated account management, and tailored data strategies.

Zyte AI Scraping: Automated Crawling and Parsing

Zyte excels at handling complex scraping tasks with its advanced crawling and parsing capabilities. Its AI system can:

- Adjust to changes in website structures automatically

- Seamlessly work with JavaScript-rendered content

- Navigate anti-bot systems effectively

- Process and clean data in real-time

Zyte is a great choice for large-scale projects, offering customizable solutions to meet specific data extraction demands. It’s particularly useful for enterprise-level operations requiring high accuracy and scalability.

BulkGPT: Simplifying Bulk Data Scraping

BulkGPT integrates with tools like Google Sheets, making workflows more efficient and allowing immediate use of extracted data. Beyond basic scraping, BulkGPT can:

- Organize and structure large datasets automatically

- Clean and format data for instant usability

- Extract data from a variety of websites

- Ensure accuracy with AI-powered validation

These tools cater to different user needs, from small projects to large-scale enterprise applications. Choosing the right one depends on your project goals, technical skills, and scalability requirements.

Each of these platforms offers unique benefits, helping you tackle data extraction tasks effectively while overcoming common hurdles.

Tips for Using AI in Web Scraping

Streamlining Workflows and API Connections

AI tools can simplify large-scale data extraction by automating complex tasks like pattern recognition and dynamic content processing. For instance, tools such as BulkGPT can connect with platforms like Google Sheets or handle CSV files, cutting down manual tasks into just a few minutes of automated work.

To make your workflows smoother, focus on:

- Automating tasks like data cleaning, validation, and formatting

- Setting up strong error-handling systems with real-time updates

- Using tools that adjust to changes in website structures automatically

Integrating APIs into your process can also improve efficiency. By linking scraped data directly to your business systems, you enable real-time processing and ensure data remains consistent and accurate across platforms.

Staying Compliant and Following Best Practices

While improving workflows and integrating APIs, it’s crucial to stay compliant with legal and ethical standards. Here’s how you can ensure compliance:

- Use encryption for sensitive data and secure storage solutions

- Respect website rules like robots.txt and adhere to rate limits

- Keep detailed records of your data collection methods and retention policies

For larger operations, you might want to explore compliance management services that align with industry regulations. These services can help you maintain high performance while ensuring legal and ethical standards are met.

When using AI-driven tools, it’s important to balance automation with ethical considerations. Regularly monitor and document your data usage to maintain transparency and build trust, all while benefiting from the efficiency of AI in web scraping.

Conclusion: AI's Role in the Future of Web Scraping

AI has transformed web scraping from a tedious manual process into an intelligent, efficient approach. Tools like Zyte AI Scraping and InstantAPI.ai showcase how AI can manage complex data extraction with precision while staying compliant with regulations.

These tools are shaping the future of web scraping by enabling advanced, personalized workflows. With the ability to process data quickly and accurately, AI-powered tools have simplified tasks for non-technical users while offering advanced features for more complex needs.

Platforms like Diffbot are taking things further by using natural language processing to automatically identify, organize, and structure web data in ways that were previously out of reach. Such developments point to a future where web scraping adapts in real-time to shifting website designs and data formats.

As AI technology progresses, data extraction will become smarter, more efficient, and better suited to a variety of business demands - all while maintaining the necessary balance between automation and compliance.