Educational platforms are transforming how they collect and organize learning materials using web scraping. This process automates the gathering of resources like research papers, e-books, and online courses, making them easier to access and manage. By turning scattered online content into structured databases, web scraping helps platforms keep materials updated, track trends, and save time compared to manual methods.

Here’s how web scraping benefits education:

- Centralized Resources: Collects Open Educational Resources (OERs), academic papers, and courses from multiple websites into one searchable database.

- Cost-Effective: Reduces expenses compared to traditional data collection methods like surveys.

- Real-Time Updates: Automatically tracks new resources and changes in teaching trends.

- Improved Searchability: Organizes metadata (e.g., author, subject, difficulty) for easier navigation.

However, platforms must address challenges like legal compliance (e.g., copyright laws, GDPR), ethical scraping practices, and anti-scraping mechanisms. Tools range from no-code browser extensions to AI-powered solutions, which can adapt to dynamic websites and better classify data.

Web scraping is reshaping education by making resources more accessible and tailored to students’ needs - when done ethically and responsibly.

Scrapy Course – Python Web Scraping for Beginners

How Educational Platforms Use Web Scraping

Educational platforms rely on web scraping to gather learning materials from across the internet, automating the process of creating searchable databases. This method allows institutions to provide students and educators with access to a far greater range of educational resources than could ever be collected manually. By extracting structured data from various websites and storing it in local databases or spreadsheets, platforms make it possible to search multiple sources through a single interface. This automation plays a key role in improving access to resources and supporting student achievement. Below, we’ll explore how web scraping specifically helps in collecting Open Educational Resources (OERs), academic papers, and online courses.

Collecting Open Educational Resources (OERs)

Open Educational Resources (OERs) provide free learning materials but are often scattered across numerous websites, making them difficult to find and access. Web scraping tackles this problem by gathering OERs from multiple sources and organizing them into centralized collections. Educational platforms use this technique to extract materials like textbooks, lecture notes, videos, and interactive tools from various OER repositories.

The process involves identifying websites that host open educational content and extracting metadata such as subject area, difficulty level, author details, and licensing information. This metadata is essential for organizing and categorizing resources, making it easier for users to find what they need.

Web scraping also ensures collections stay up to date by detecting and adding new resources as they’re published online. By pulling in resources from a variety of sources worldwide, platforms can offer a more diverse selection of educational content that reflects different perspectives and teaching styles. This same approach is also applied to academic research materials.

Gathering Research Papers and E-Books

Academic research is spread across platforms like JSTOR, ProQuest, and EBSCO, as well as repositories such as ResearchGate and Academia.edu. These platforms often have complex structures, but modern web scraping tools are designed to navigate them and extract valuable content.

Educational platforms use web scraping to gather entire documents and pull metadata like author names, publication dates, citation counts, abstracts, and keyword tags. This detailed metadata improves search and filtering functions, helping students and researchers quickly locate the materials they need. Scheduled scraping routines keep collections current as academic databases are updated with new publications.

Finding Online Courses

The sheer number of online courses across platforms can make it hard for students to find the right ones. Web scraping simplifies this by collecting and organizing course information from various sources, enabling educational platforms to create comprehensive course catalogs. These catalogs allow students to explore learning opportunities that match their goals and skill levels.

Scraping tools extract key course details, such as topics, duration, and enrollment requirements, which help platforms make tailored recommendations. Additionally, these tools can identify trends in course design, such as the rise of modular learning, gamification, and the use of AI or virtual reality in education. By monitoring these trends, institutions can adapt their offerings to align with modern teaching methods and delivery formats, ensuring they stay relevant in an ever-changing educational landscape.

Web Scraping Tools and Methods for Education

Educational institutions have access to a range of web scraping tools designed to meet various data collection needs. The right tool depends on factors like the volume of data, available technical skills, and budget. Over time, scraping solutions have adapted to help educational platforms gather learning materials from diverse online sources effectively.

Web Scraping Tools for Educational Platforms

Educational platforms can leverage different categories of web scraping tools to aggregate resources. Each type offers distinct benefits, making it easier to address specific requirements:

- SaaS platforms: These provide all-in-one solutions with advanced features such as proxy management and headless browsing. For example, InstantAPI.ai offers a budget-friendly pay-per-use model at $2 per 1,000 web pages scraped, with no minimum spend - ideal for institutions looking to manage costs.

- No-code browser extensions: Perfect for institutions with limited technical expertise, these tools run within a browser and can handle dynamic content without needing programming skills. Users can often start with free local data extraction tools, while cloud-based features typically cost around $50 per month.

- Desktop scraper applications: Best for occasional data collection projects, these tools feature simple, point-and-click interfaces. They often include free options for small-scale tasks, with paid plans starting at approximately $119 per month.

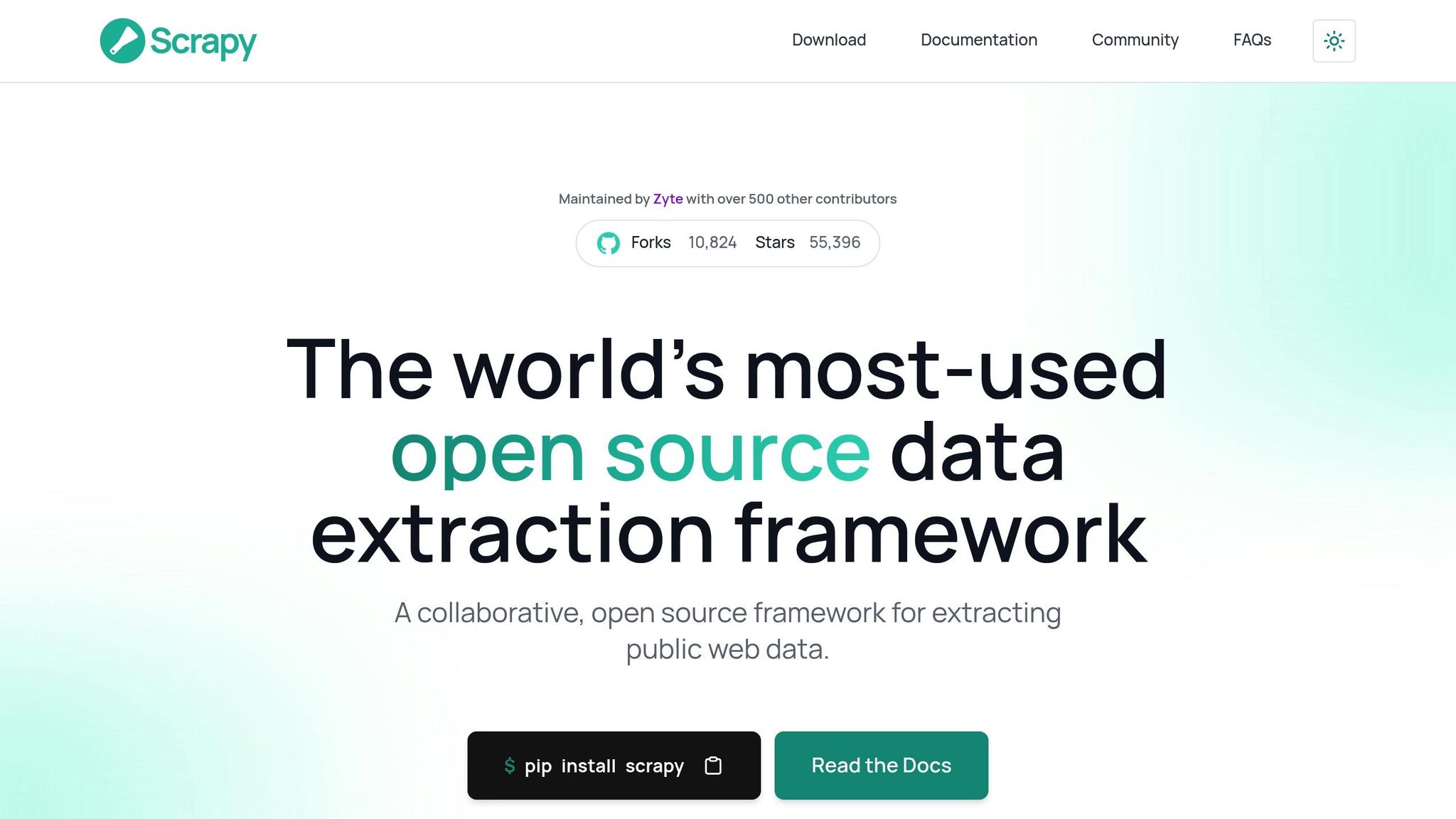

- Open-source frameworks: For institutions with in-house programming expertise, open-source tools offer unmatched flexibility. These frameworks are ideal for large-scale, highly customized scraping needs that pre-built tools can't address.

- AI-powered tools: These tools simplify data collection by allowing users to describe their needs in plain English. They’re particularly helpful for non-technical teams looking to streamline the scraping process.

Python Example: Extracting Educational Data

For those with programming skills, Python is a popular choice for building custom scraping solutions. Its extensive library ecosystem and straightforward syntax make it well-suited for educational projects. Below is a Python script that demonstrates how to extract course information from an educational platform:

import requests

from bs4 import BeautifulSoup

import csv

import time

def scrape_course_data(base_url, pages_to_scrape):

courses = []

for page in range(1, pages_to_scrape + 1):

url = f"{base_url}?page={page}"

# Add delay to respect server resources

time.sleep(2)

# Set headers to mimic browser behavior

headers = {

'User-Agent': 'Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36'

}

response = requests.get(url, headers=headers)

soup = BeautifulSoup(response.content, 'html.parser')

# Extract course containers

course_elements = soup.find_all('div', class_='course-card')

for course in course_elements:

course_data = {

'title': course.find('h3', class_='course-title').text.strip(),

'instructor': course.find('span', class_='instructor-name').text.strip(),

'duration': course.find('span', class_='duration').text.strip(),

'level': course.find('span', class_='difficulty-level').text.strip(),

'enrollment_count': course.find('span', class_='enrollment').text.strip(),

'rating': course.find('span', class_='rating-score').text.strip()

}

courses.append(course_data)

return courses

# Save extracted data to CSV for further analysis

def save_to_csv(courses, filename):

with open(filename, 'w', newline='', encoding='utf-8') as csvfile:

fieldnames = ['title', 'instructor', 'duration', 'level', 'enrollment_count', 'rating']

writer = csv.DictWriter(csvfile, fieldnames=fieldnames)

writer.writeheader()

writer.writerows(courses)

This script highlights key practices in web scraping, such as adding delays to avoid overwhelming servers and using headers to simulate human browsing. It also organizes the collected data into a structured format, making it easier to analyze and integrate into educational systems.

Best Practices for Resource Collection

For effective and ethical web scraping in education, it’s important to follow these best practices:

- Rate limiting: Add 2–3 second delays between requests to avoid overloading servers and maintain positive relationships with content providers.

- Pagination handling: Many educational websites spread content across multiple pages. Scrapers should navigate these pages or manage infinite scroll systems to ensure comprehensive data collection.

- Duplicate content detection: When aggregating resources from multiple sources, use techniques like content fingerprinting to identify and eliminate duplicates for a clean database.

- Metadata organization: Transform raw data into useful resources by extracting details like subject categories, difficulty levels, intended audience, licensing, and quality indicators. This helps enable advanced search and recommendation features.

- Error handling and monitoring: Educational websites frequently change their structures. Implement error detection, logging, and automated alerts to maintain consistent scraping results.

- Caching and refreshing: Cache previously scraped pages to reduce server load and improve efficiency. Schedule refresh cycles to keep data up to date.

Institutions should tailor their scraping schedules to their needs. Rapidly changing data may require frequent updates, while static content can be collected less often. The choice between different scraping tools and approaches ultimately depends on the scale of the project, technical capabilities, and available budget.

sbb-itb-f2fbbd7

Legal and Ethical Guidelines for Educational Web Scraping

Web scraping for educational purposes demands a careful balance between adhering to legal requirements and maintaining ethical standards. In the United States, this process is particularly complex due to multiple federal laws that regulate educational data collection and protect student privacy. Educational institutions must navigate these regulations while ensuring their data practices safeguard both their organizations and the students they serve.

US Education Law Compliance

Educational platforms operating in the U.S. must align their scraping activities with key federal laws. Among these, the Family Educational Rights and Privacy Act (FERPA) is crucial. FERPA governs how institutions handle student records and personally identifiable information, meaning platforms must avoid collecting data protected under this law when scraping educational content.

For platforms serving children under 13, the Children's Online Privacy Protection Act (COPPA) adds another layer of responsibility. COPPA requires parental consent before collecting personal information from children, making it essential for platforms to implement robust filters to prevent the accidental capture of such data.

The Technology, Education and Copyright Harmonization (TEACH) Act also plays a role in regulating the use of copyrighted materials in digital learning environments. While the act provides some leeway for educational use, platforms must still respect copyright boundaries when aggregating content.

Many educators are unaware of these legal requirements, which highlights the importance of institutional policies that guide ethical and compliant data collection. These regulations not only protect privacy but also ensure that web scraping practices remain secure and responsible.

Following Website Policies and Data Rights

Beyond legal compliance, ethical web scraping requires strict adherence to website policies. The robots.txt file is a key tool for communicating a website's scraping permissions. Educational platforms must respect these directives, even if the content they seek appears publicly accessible.

Terms of service agreements often include clauses about automated data collection. While these agreements may not always carry the same legal weight as federal laws, violating them can lead to service disruptions, access bans, or even legal action, all of which can hinder educational operations.

Scrapers must also distinguish between public and restricted content. While general information like course catalogs is usually accessible, content such as student-generated posts, discussion forums, and assessment materials often requires stricter access controls. Platforms should use filtering systems to ensure they only scrape appropriate data. Additionally, introducing delays of 2–3 seconds between requests can reduce server strain and help maintain good relationships with content providers.

Protecting Student Privacy and Data Security

The protection of student privacy is a fundamental responsibility for educational platforms. Even when scraping publicly accessible websites, platforms must filter out personally identifiable information to avoid privacy violations.

To safeguard data, platforms should implement measures like anonymization, encryption (both in transit and at rest), and role-based access controls. These steps are especially important when dealing with data from forums or collaborative platforms where students might inadvertently share identifying details. Adding multi-factor authentication provides an extra layer of security, particularly given the widespread use of personal devices by educators for storing and accessing student data.

Monitoring and security systems are critical for ensuring data remains protected. Tools like intrusion detection systems (IDS) can flag unusual activity, while robust logging practices create audit trails to support compliance. Institutions should also establish clear incident response plans to address potential breaches, including steps for preparation, identification, containment, and recovery.

Regular security audits of scraping tools and practices can identify vulnerabilities before they become problems. Additionally, clear data retention policies - defining how long scraped content is stored and when it is securely deleted - help meet privacy regulations, reduce risks, and maintain responsible data management practices in educational web scraping efforts.

Future Trends: AI and API Integration in Educational Data Collection

AI and API-based systems are reshaping how educational resources are gathered and utilized. These tools are no longer just about automating tasks - they’re creating intelligent systems that learn, adapt, and deliver personalized educational content on a large scale. This shift is paving the way for smarter data classification and tailored resource delivery.

The growth of the integration platform as a service (iPaaS) industry highlights this transformation. Forecasts suggest it will grow from $12.87 billion in 2024 to $78.28 billion by 2032, with a compound annual growth rate of 25.3% [1]. This rapid expansion reflects a fundamental change in how educational platforms manage data collection and integration.

AI for Data Classification and Personalization

AI is taking web scraping to the next level, offering capabilities that traditional methods can’t match. Unlike older techniques, AI-enhanced web scraping can recognize changes in website structures and adjust automatically, making it a powerful tool for educational platforms that rely on dynamic and evolving online resources.

These advanced tools also handle multimedia content with impressive precision. AI can extract text from images, recognize objects, and even analyze video content to classify educational materials. This means platforms can now gather and organize a broader range of resources, from infographics to instructional videos, with greater accuracy.

What sets AI apart is its ability to provide insights during the scraping process. While traditional methods simply collect data, AI systems can analyze it in real time - performing tasks like sentiment analysis, trend detection, and content categorization. Educational platforms can use this to automatically sort resources by difficulty level, subject, or learning objectives, eliminating the need for manual sorting.

Here’s how AI-enhanced scraping stacks up against traditional methods:

| Feature | Traditional Scraping | AI-Enhanced Scraping |

|---|---|---|

| Data Handling | Limited to structured data | Processes unstructured and semi-structured data (e.g., PDFs, images) |

| Adaptability | Struggles with dynamic content | Adjusts to changing website structures |

| Insights | Basic data extraction | Provides sentiment and trend analysis, and content categorization |

| Anti-Scraping Bypass | Limited capabilities | Overcomes advanced anti-scraping measures |

| Cost | Lower | Moderate to higher |

For platforms dealing with dynamic content or large-scale data, AI scraping is a game-changer. However, for static websites with consistent structures, traditional scraping remains a cost-effective option.

API-Based Systems for Platform Integration

While AI enhances adaptability, APIs bring precision and security to data collection. APIs are becoming the backbone of educational platforms, offering structured and reliable access to data that traditional scraping often struggles to achieve. In fact, over 83% of enterprises now use API integrations to maximize the return on their digital assets [2]. Educational platforms are following suit, leveraging APIs to build more secure and efficient systems.

APIs simplify data collection by delivering structured information through predefined endpoints, removing the need for complex parsing. They also come with built-in security features, ensuring that only authorized platforms can access sensitive academic resources. This approach not only streamlines operations but also enhances the learning experience for students and educators.

The move toward an API-first development model is becoming the norm in educational technology. This strategy focuses on integration from the start, allowing platforms to evolve alongside emerging technologies and easily connect with future solutions. With real-time data integration, educational platforms can update curricula and assessments immediately, ensuring access to the latest resources.

Services like InstantAPI.ai are making this process even more accessible. For instance, they offer structured data extraction from web pages at $2 per 1,000 pages scraped. This pay-as-you-go model allows institutions of all sizes to access advanced scraping tools without hefty upfront costs.

"AI and ML will play a crucial role in enhancing integration solutions by providing advanced analytics and insights. By analyzing data from various sources, these technologies can identify trends, predict customer behavior, and suggest actionable strategies to improve business outcomes." - Clint, Marketing Entrepreneur, Cazoomi

The combination of AI and API integration is transforming how educational platforms operate. With 82% of companies already utilizing or exploring AI [3], the education sector is increasingly adopting these technologies to create smarter, more responsive learning environments. However, as these tools advance, it’s essential to prioritize strong security measures and adhere to data privacy regulations. Together, AI and APIs offer exciting possibilities for resource collection, but they also demand careful management to ensure ethical and legal compliance in educational data practices.

Conclusion: Web Scraping's Impact on Educational Resource Access

Web scraping has reshaped how educational institutions gather and manage learning materials. It's no longer just about collecting data - it’s now a powerful tool for shaping curricula, improving student success, and offering tailored learning experiences.

Take this example: In 2024, a top university used web scraping to track emerging technologies and industry trends. This allowed them to revamp their computer science program to include sought-after programming languages and methodologies. The result? Graduates left with career-ready skills, boosting both their employability and the university's enrollment numbers. Similarly, an online learning platform analyzed student interaction data through web scraping, enabling them to create personalized learning paths. This approach led to higher engagement and better academic results.

The long-term advantages extend well beyond simply gathering resources. Educational institutions can now track industry trends in real time, ensuring their programs align with job market needs. This creates a continuous cycle where schools stay relevant, and students leave equipped with skills that employers value. By pulling together resources from multiple sources, web scraping has also made it easier to create centralized platforms that offer comprehensive and accessible learning tools.

Of course, ethical considerations are key to making this all work. As Sandro Shubladze, CEO and Founder of Datamam, puts it:

"Commitment to ethical data collection fosters trust and reliability and positions businesses as leaders in responsible innovation. By embracing these principles, organizations can harness the power of data without compromising their reputation or legal standing."

For educational institutions, this means adhering to best practices like respecting robots.txt files, following Terms of Service, setting appropriate crawl rates, and steering clear of personal data collection. These steps not only build trust but also ensure that web scraping remains a sustainable and effective tool.

Looking ahead, integrating AI and APIs into web scraping will make resource aggregation smarter and more secure. As the education sector increasingly relies on data to drive better outcomes and streamline operations, institutions that adopt these technologies responsibly will be in the best position to serve their students and communities.

FAQs

What legal and ethical factors should educational platforms consider when using web scraping to gather resources?

Educational platforms venturing into web scraping must tread carefully, balancing both legal and ethical obligations.

From a legal standpoint, platforms must respect the terms of service outlined by the websites they scrape. Violating these terms or infringing on copyright and intellectual property laws can lead to serious legal repercussions. For instance, extracting content that is protected without obtaining the necessary permissions is not just risky - it’s unlawful.

On the ethical side, there are several best practices that platforms should uphold. These include honoring robots.txt files, which signal a website’s preferences regarding automated access, and ensuring their scraping activities don’t overload or disrupt the source site’s servers. Transparency plays a key role here as well. Platforms should clearly communicate their data collection methods and strictly follow privacy regulations like GDPR or CCPA, particularly when dealing with personal information.

By addressing these legal and ethical concerns head-on, educational platforms can responsibly leverage web scraping to improve access to valuable learning resources while maintaining trust and integrity.

How do AI-powered web scraping tools make it easier for educational platforms to gather and organize learning resources?

AI-driven web scraping tools are changing the game for educational platforms by streamlining the way they gather and organize learning materials. These tools can automatically pull data from various sources - like e-books, research articles, and online courses - in real time. The result? A treasure trove of resources that are always current and relevant.

What makes these tools stand out is their ability to navigate ever-changing website structures and handle dynamic content seamlessly. This not only saves time but also reduces manual effort. On top of that, they analyze data patterns during the scraping process, uncovering trends and insights about resource availability. For educators and institutions, this means making smarter decisions and ensuring students have access to the most up-to-date learning materials.

What challenges do educational platforms face when using web scraping, and how can they address them?

Educational platforms face a range of challenges when it comes to web scraping, including legal risks, privacy concerns, and technical hurdles. Legal risks often stem from violating a website's terms of service, which could result in lawsuits or being blocked from accessing the site. Privacy concerns arise when scraping personal data, potentially clashing with laws like GDPR or FERPA. On the technical side, issues like overloading servers or pulling inaccurate data can disrupt operations and reduce effectiveness.

To navigate these obstacles, platforms should focus on responsible and compliant scraping practices. This means creating clear policies, controlling request rates to prevent server overload, and anonymizing data to safeguard privacy. Regularly reviewing scraping activities and employing tools like CAPTCHAs can further promote ethical and efficient use of web scraping technologies.