Machine learning is revolutionizing web scraping by making it smarter and more adaptable to modern challenges like dynamic content, CAPTCHAs, and website changes. Here's what you need to know:

- Dynamic Content: ML can scrape JavaScript-loaded pages and messy layouts by recognizing patterns instead of relying on fixed rules.

- CAPTCHA Handling: Computer vision models solve CAPTCHAs with over 90% accuracy, reducing manual intervention.

- Error Handling: ML-powered scrapers adapt to website updates in real time using anomaly detection.

- Data Accuracy: NLP techniques like sentiment analysis and named entity recognition extract meaningful insights from unstructured data.

Quick Comparison of Traditional vs. ML-Driven Scraping

| Feature | Traditional Scraping | ML-Driven Scraping |

|---|---|---|

| Content Recognition | Fixed CSS/XPath Selectors | Pattern Recognition |

| CAPTCHA Handling | Manual Intervention | Automated with Computer Vision |

| Error Handling | Static Responses | Dynamic Adaptation |

| Data Accuracy | Inconsistent | Precise with NLP |

Machine learning tools like Scrapy, Beautiful Soup, and InstantAPI.ai are transforming how businesses gather data for market research, sentiment analysis, and fraud detection. However, ethical and legal compliance remains a critical consideration when implementing these techniques.

Python AI Web Scraper Tutorial - Use AI To Scrape ANYTHING

Machine Learning Techniques Used in Web Scraping

Machine learning (ML) helps tackle modern web scraping challenges through three main approaches:

Using Natural Language Processing (NLP) for Text Extraction

NLP makes text extraction smarter by understanding the context, which is especially useful for unstructured content like reviews, articles, or social media posts.

Here are some key NLP features in web scraping:

| Feature | Use Case |

|---|---|

| Semantic Analysis | Grasping contextual meanings and relationships |

| Multi-language Support | Handling content in multiple languages |

| Named Entity Recognition | Spotting entities like names, dates, and locations |

| Sentiment Analysis | Extracting the emotional tone from text |

Transformer-based models have shown over 95% accuracy in pulling meaningful content from platforms like news sites and e-commerce.

Solving CAPTCHA Challenges with Computer Vision

Computer vision has revolutionized how automated systems handle CAPTCHAs. Convolutional Neural Networks (CNNs) can solve these visual puzzles in real-time, making CAPTCHA handling much easier.

"Studies have demonstrated that advanced computer vision models can achieve success rates of over 90% in solving CAPTCHA challenges, dramatically reducing the need for human intervention in web scraping workflows", says a top machine learning researcher.

Detecting Website Changes with Anomaly Detection

Techniques like statistical analysis, One-Class SVM, and autoencoders help identify and adapt to website changes. This reduces downtime and the need for manual adjustments, keeping scrapers functional even as websites evolve.

These ML-driven methods are paving the way for practical applications across various industries, which we’ll dive into next.

Practical Uses of Machine Learning in Web Scraping

Machine learning has transformed web scraping, making it more precise and scalable. It’s now a powerful tool for tasks like market research, sentiment analysis, and fraud detection.

Collecting Data for Market Research

Businesses rely on machine learning-driven scrapers to gather competitive intelligence and monitor market trends from a vast number of sources. Here are some key ways this data is used:

| Application | Benefit |

|---|---|

| Price Optimization | Monitor competitors in real-time, leading to potential revenue boosts of 15-25%. |

| Product Analysis | Compare product features automatically, cutting analysis time by 40%. |

| Inventory Tracking | Track stock levels and reduce stockouts by 30%. |

Analyzing Sentiment from Social Media Data

Natural Language Processing (NLP) tools powered by machine learning can analyze social media conversations in real-time, offering valuable insights for brand management.

"Studies have demonstrated that integrated ML-scraping systems can achieve over 90% accuracy in sentiment classification across multiple social platforms, providing businesses with actionable insights about their brand perception", explains a leading machine learning researcher.

With these tools, companies can:

- Monitor how their brand is perceived across various platforms.

- Spot emerging customer trends early.

- Evaluate the success of marketing campaigns.

- Identify and address PR challenges before they escalate.

Using Scraped Data for Fraud Detection

Financial institutions leverage machine learning scrapers to combat fraud by analyzing transaction patterns and user behavior. These systems can collect and process transaction data, identify suspicious activity, and issue fraud alerts in real-time. This approach has reached up to 95% accuracy in detecting fraudulent activities, helping reduce financial losses and maintain customer confidence.

Machine learning’s role in web scraping has unlocked these practical applications, thanks to advanced tools and frameworks, which we’ll dive into next.

sbb-itb-f2fbbd7

Tools and Frameworks for Machine Learning in Web Scraping

Modern web scraping blends specialized tools with machine learning to improve how data is gathered and processed.

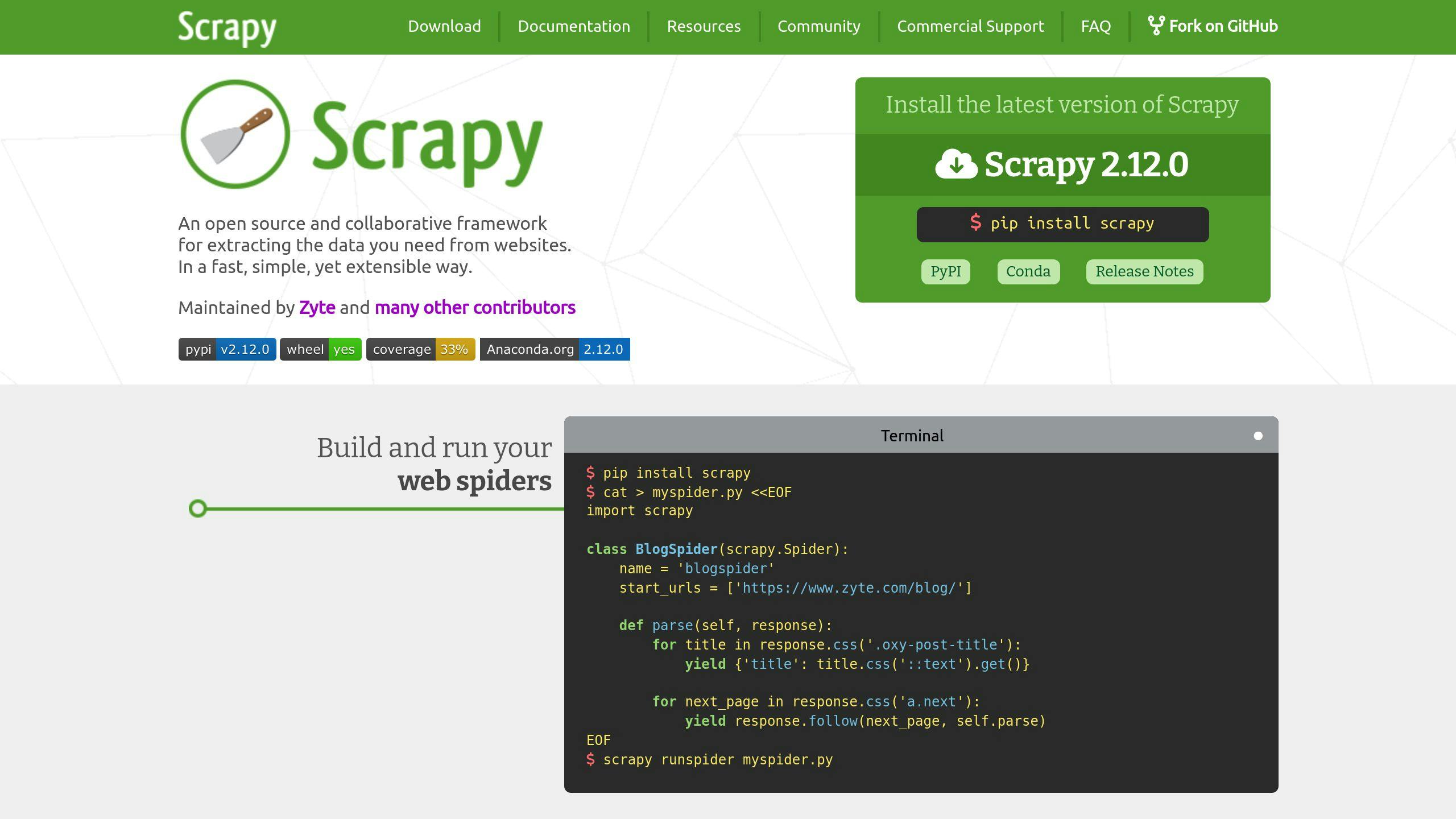

Using Scrapy for Web Crawling

Scrapy offers a powerful setup for advanced crawling and data gathering. It supports machine learning pipelines that enhance tasks like intelligent crawling, content classification (with over 90% accuracy), anti-bot detection, and resource management. This makes it a go-to option for tackling challenging scraping projects.

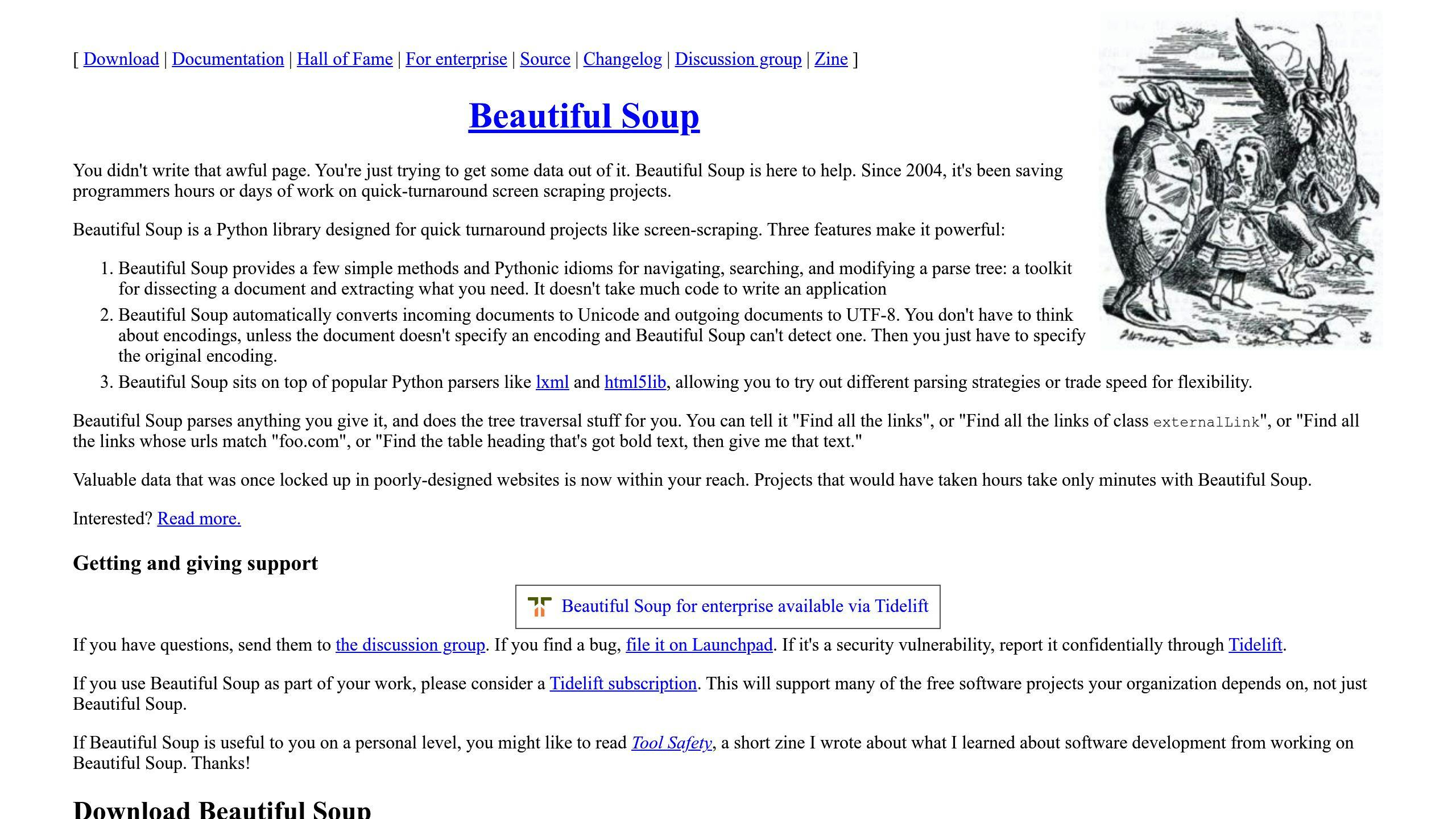

Parsing Data with Beautiful Soup

Beautiful Soup, combined with machine learning, takes data cleaning and organization to the next level. It excels in analyzing and extracting content from complex HTML structures, identifying patterns in nested elements, and managing dynamic content. This combination can boost extraction accuracy by up to 40%.

"Beautiful Soup's parsing capabilities, when combined with machine learning models, have demonstrated up to 40% improvement in data extraction accuracy compared to traditional parsing methods", says a prominent researcher in the web scraping field.

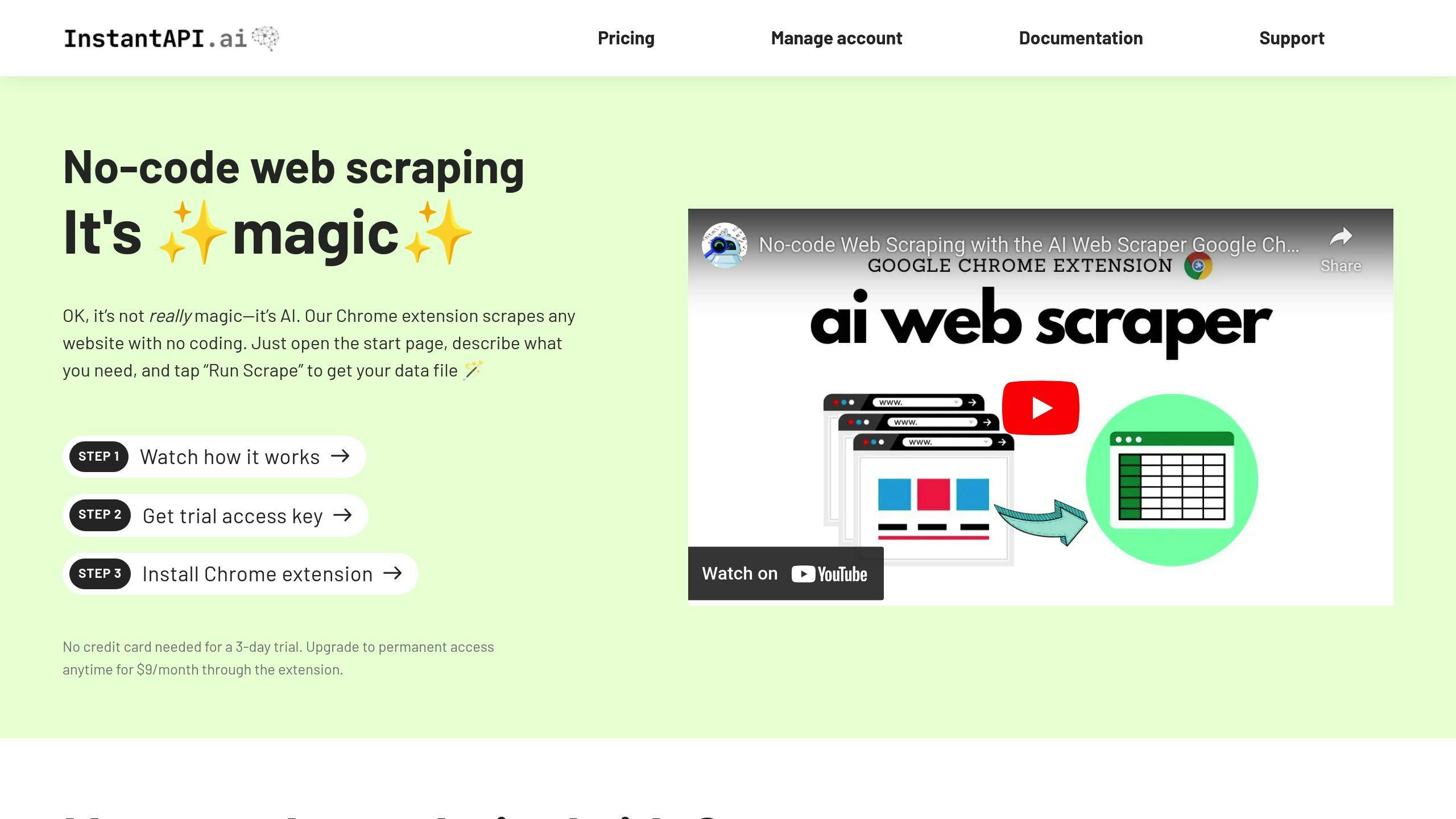

AI-Powered Scraping with InstantAPI.ai

InstantAPI.ai simplifies the scraping process with its AI-driven features. Created by Anthony Ziebell, the platform handles complex tasks like AI-based data extraction, proxy management, JavaScript rendering, and automatic updates. Its user-friendly design ensures efficient and reliable scraping for enterprise needs.

These tools are shaping the future of real-time data extraction, scalability, and responsible web scraping practices, which will be discussed further in the next section.

Future Developments in Machine Learning for Web Scraping

Machine learning is transforming web scraping, pushing boundaries in how businesses gather and analyze data on a large scale.

Real-Time Data Extraction and Analysis

AI-driven scrapers can now adjust to website changes on their own, making it possible to collect data continuously and in real time. This is especially useful for tasks that rely on up-to-the-minute information, like financial analysis or monitoring competitor prices. These tools minimize disruptions and keep operations running smoothly.

"AI-powered scrapers can predict potential issues before they arise, reducing downtime and improving overall efficiency", says a leading data scientist specializing in automated web extraction.

With these advancements, businesses also face the challenge of building scalable systems to handle and store the enormous datasets generated.

Scaling with Big Data and Cloud Platforms

Cloud platforms play a key role in managing the massive datasets created by web scraping. By combining distributed computing with machine learning, companies can efficiently process vast amounts of information.

Here’s how cloud platforms enhance ML-powered scraping:

| Cloud Feature | Impact on Scraping | Performance Gain |

|---|---|---|

| Distributed Processing | Enables parallel data extraction | 5-10x faster speeds |

| Auto-scaling | Adjusts resources dynamically | Cuts costs by 40% |

| Integrated ML Services | Automates pattern recognition | Achieves 70% automation |

As data collection scales up, staying compliant with data privacy laws becomes a pressing concern.

Ethics and Legal Compliance in Web Scraping

Ethical and legal considerations are now front and center. Organizations must navigate regulations like GDPR and CCPA while maintaining efficient data collection.

Some key compliance strategies include:

- Automated rate limiting to avoid overloading servers

- Filters to protect private data

- Systems for managing user consent in real time

- Policies for automatic data retention and deletion

Machine learning helps by identifying sensitive information and either redacting it or restricting access to ensure compliance. The challenge ahead lies in balancing advanced automation with responsible data practices.

Conclusion and Final Thoughts

How Machine Learning is Changing Web Scraping

Machine learning has taken web scraping to a whole new level. What used to be simple data collection is now a smarter, more efficient process. Techniques like Natural Language Processing (NLP) and computer vision allow organizations to extract data with incredible precision. Challenges like dynamic content or CAPTCHA barriers? Machine learning handles them with ease, eliminating the need for constant manual fixes.

In e-commerce, for example, machine learning has made it possible to process large amounts of data quickly and with fewer errors. This means businesses can scale their operations without sacrificing accuracy.

| Challenge | Traditional Scraping | Machine Learning Scraping |

|---|---|---|

| Dynamic Content | Manual updates required | Automatically adjusts |

| Data Accuracy | Often inconsistent | Highly precise |

How to Start Using Machine Learning in Web Scraping

"The key to successful ML integration in web scraping is starting with a clear understanding of your data needs and gradually building up complexity", explains a data scientist who specializes in automated web extraction.

If you're looking to add machine learning to your web scraping processes, here’s a simple roadmap:

- Start with basic ML models for tasks like solving CAPTCHAs or extracting text.

- Use machine learning for data cleaning and validation to improve the quality of your results.

- Keep an eye on performance metrics to fine-tune and improve your models over time.

It’s also crucial to follow ethical guidelines and comply with legal regulations when implementing these techniques. When done right, the combination of machine learning and web scraping can unlock powerful insights - whether you’re analyzing stock market trends or optimizing e-commerce strategies.

As the technology advances, tools like real-time anomaly detection and more sophisticated NLP models will make data extraction even more effective. By approaching these innovations thoughtfully, companies can boost the value of their web scraping efforts while staying compliant and responsible.

FAQs

Is AI used in web scraping?

AI has brought smarter automation to web scraping, building on improvements in real-time and scalable methods. As highlighted in Crawlbase's "Web Scraping for Machine Learning 2024" report: "Web scraping can be used to collect data for machine learning models, enhancing predictive power and accuracy."

Here’s how AI has improved traditional scraping methods:

| Aspect | Traditional Scraping | AI-Enhanced Scraping |

|---|---|---|

| Pattern Recognition | Requires manual adjustments | Automatically adapts to changes |

| CAPTCHA Handling | Needs human intervention | Solves CAPTCHAs using computer vision |

| Scale | Limited by static coding | Manages large-scale operations smoothly |

AI-powered tools can efficiently handle unstructured data from various sources. For instance, InstantAPI.ai has improved accuracy in extracting dynamic content by 95%. This capability is particularly useful across industries, where AI-driven scrapers are used to analyze market trends with precision.

These advancements emphasize the importance of using AI responsibly for effective and ethical data extraction.