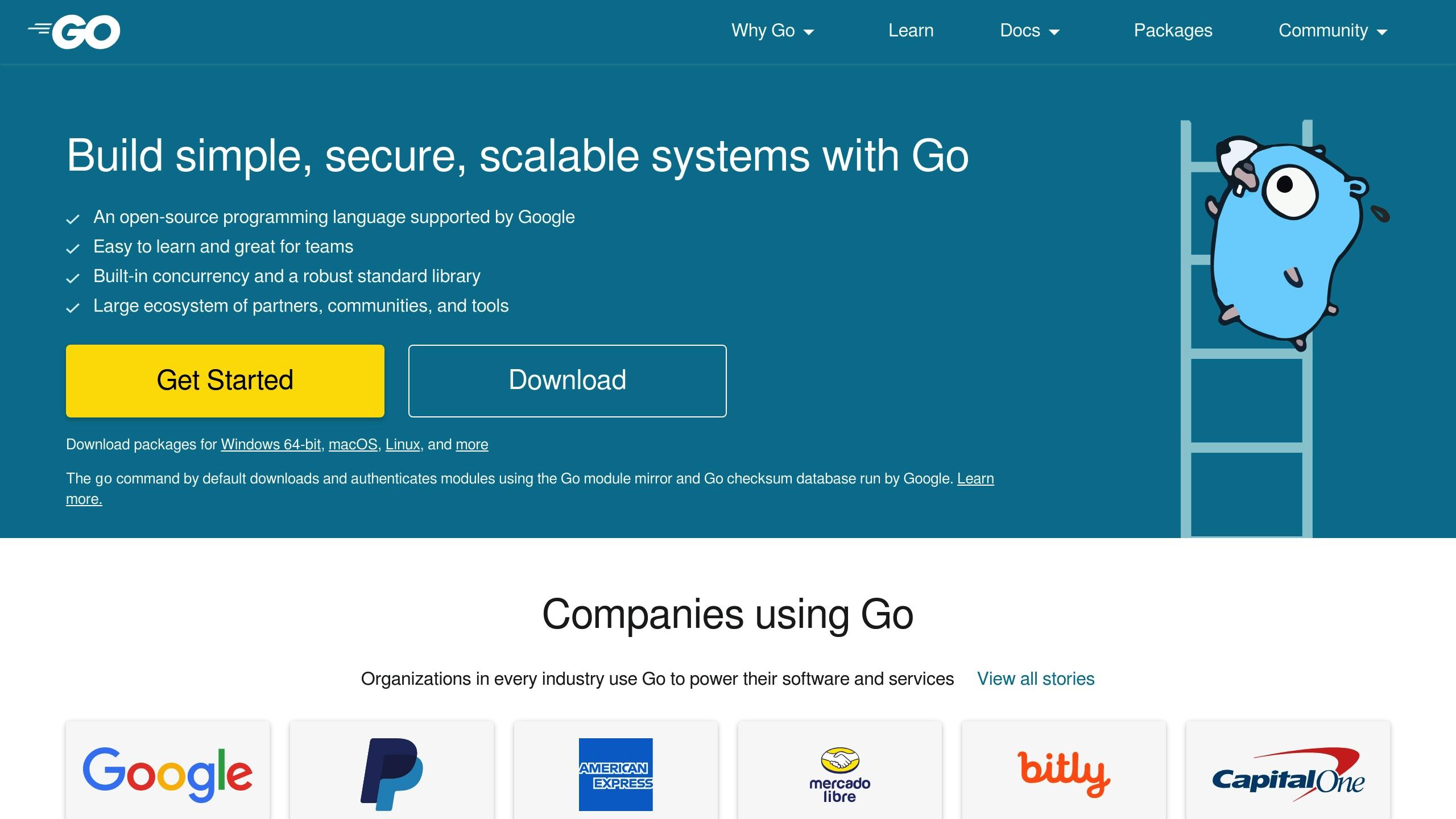

Want to extract data from websites efficiently? This guide shows you how to build a fast, resource-efficient web scraper using Go. Go is perfect for scraping because it’s fast, uses less memory, and supports concurrent tasks. Here’s what you’ll learn:

- Why Go is great for scraping: Speed, low memory use, and easy deployment.

- How to set up your scraper: Install Go, organize your project, and use the Colly library.

- Core scraping techniques: Extract data with CSS selectors, handle errors, and manage rate limits.

- Advanced features: Scrape multiple pages, handle dynamic content, and store data in JSON.

Quick Comparison: Go vs. Python for Web Scraping

| Feature | Go (Colly) | Python (Scrapy) |

|---|---|---|

| Speed | 45% faster | Slower |

| Memory Usage | Low | Higher |

| Concurrent Scraping | Built-in with goroutines | Requires setup |

| Deployment | Easy (single binary) | Requires dependencies |

Learn how to scrape websites efficiently, respect ethical guidelines, and store data in structured formats like JSON or CSV. Ready to start? Let’s dive in!

Golang Web Scraper with Colly Tutorial

Go Setup Guide

Get started with Go by installing it to build your web scraper.

Go Installation Steps

Follow these steps based on your operating system:

| Operating System | Installation Steps | Verification |

|---|---|---|

| Windows 10+ | Download the installer from golang.org, run the executable, and choose the Program Files directory. | Open Command Prompt and run go version. |

| macOS | Download the .pkg installer, follow the installation wizard (PATH is auto-configured). |

Open Terminal and run go version. |

| Linux (Ubuntu/Debian) | Run wget https://go.dev/dl/go1.x.x.linux-amd64.tar.gz, extract it to /usr/local/go. |

Open Terminal and run go version. |

Once installed, restart your terminal to ensure environment variables are loaded correctly. You're now ready to set up your project structure.

Project Structure Setup

A well-organized project structure makes it easier to manage and scale your scraper. Here's an example layout:

webscraper/

├── cmd/

│ └── scraper/

│ └── main.go

├── internal/

│ ├── collector/

│ └── parser/

├── configs/

│ └── settings.json

└── go.mod

To set this up, run the following commands:

mkdir webscraper

cd webscraper

go mod init webscraper

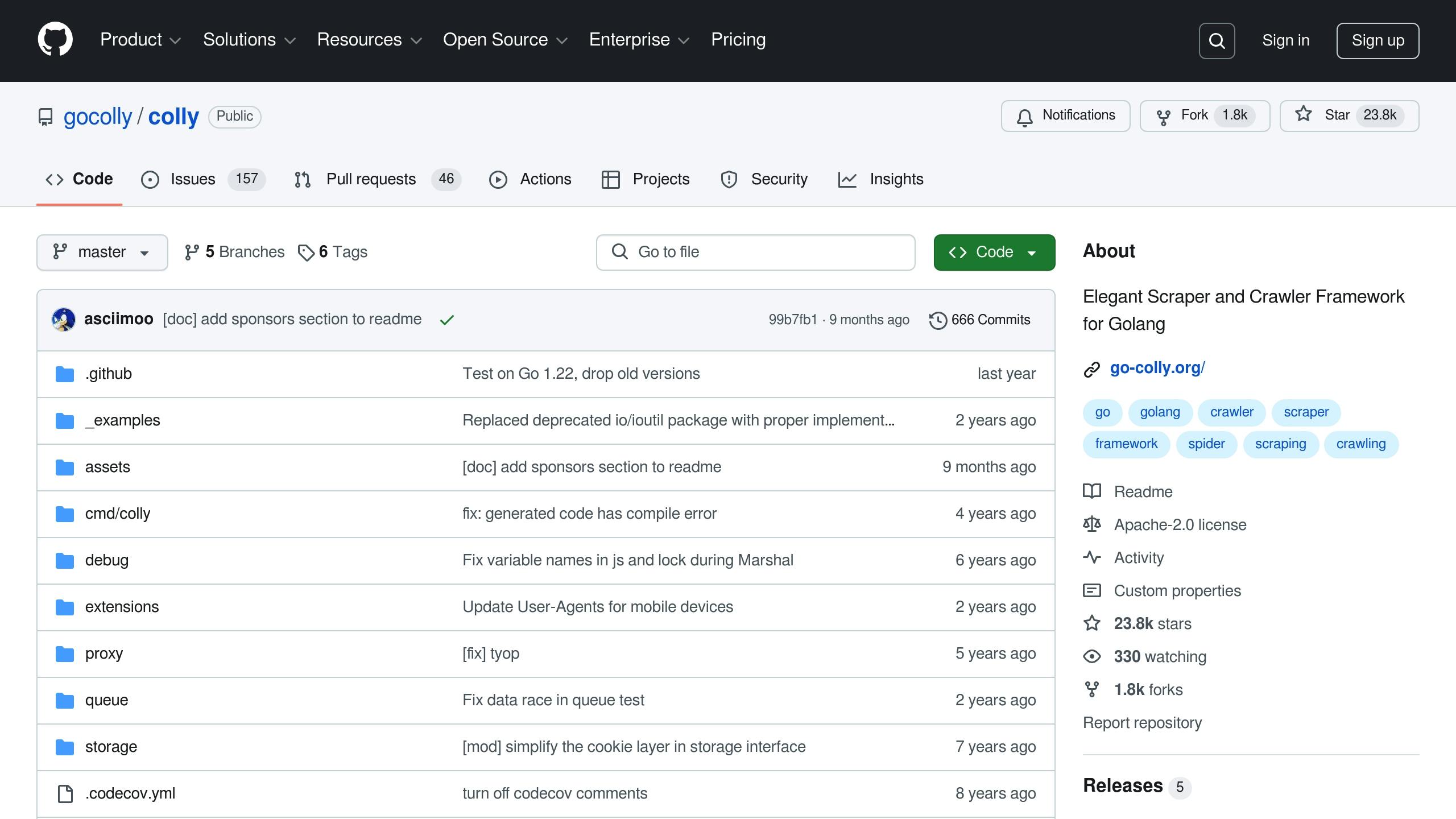

Required Libraries

For this project, you'll use Colly, a popular web scraping framework. Install it with:

go get github.com/gocolly/colly/v2

Colly offers a range of features, including:

- Fast HTML parsing

- Cookie handling and session management

- Support for concurrent scraping

- Built-in rate limiting

To expand functionality, consider adding these additional packages:

| Package | Purpose | Installation Command |

|---|---|---|

| Goquery | jQuery-like HTML parsing | go get github.com/PuerkitoBio/goquery |

| Rod | Scraping JavaScript-heavy websites | go get github.com/go-rod/rod |

| Surf | Stateful browsing | go get github.com/headzoo/surf |

After installing these, check your go.mod file to confirm the packages are listed.

"A small language that compiles fast makes for a happy developer." - Clayton Coleman, Lead Engineer at RedHat

With your tools and libraries in place, your development environment is ready to start building the web scraper.

Core Scraper Code

Here's how to set up the core functionality of your web scraper by structuring its code and making the initial web request.

Package Setup

Start by importing the necessary packages:

package main

import (

"fmt"

"log"

"github.com/gocolly/colly/v2"

)

Next, initialize the main function and set up a basic request:

func main() {

// Initialize collector

c := colly.NewCollector()

// Handle potential errors during the request

if err := c.Visit("https://example.com"); err != nil {

log.Fatal(err)

}

}

Colly Setup

Configure the Colly collector with specific settings:

c := colly.NewCollector(

colly.AllowedDomains("example.com"),

colly.UserAgent("Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36"),

colly.Debugger(&colly.DebugLog{}),

)

Here's a quick breakdown of the key configuration options:

| Setting | Purpose | Example Value |

|---|---|---|

| AllowedDomains | Limits scraping to specific domains | "example.com" |

| UserAgent | Identifies the scraper to the website | "Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36" |

| Async | Enables concurrent requests | true/false |

Once the collector is set up, you can define event handlers to manage requests and responses.

First Web Request

Use event handlers to log activity and scrape data:

c.OnRequest(func(r *colly.Request) {

fmt.Printf("Visiting %s\n", r.URL)

})

c.OnResponse(func(r *colly.Response) {

fmt.Printf("Status: %d\n", r.StatusCode)

})

c.OnHTML("title", func(e *colly.HTMLElement) {

fmt.Printf("Page Title: %s\n", e.Text)

})

c.OnError(func(r *colly.Response, err error) {

fmt.Printf("Error on %s: %s\n", r.Request.URL, err)

})

Extracting Specific Data

To collect specific elements, use CSS selectors. Here's an example for scraping product data:

c.OnHTML(".product_pod", func(e *colly.HTMLElement) {

title := e.ChildAttr(".image_container img", "alt")

price := e.ChildText(".price_color")

fmt.Printf("Product: %s - Price: %s\n", title, price)

})

This setup allows you to extract product names and prices from a webpage efficiently. Use similar patterns for other types of data by adjusting the CSS selectors.

sbb-itb-f2fbbd7

Data Extraction Methods

CSS Selector Guide

To kick off data extraction, use precise CSS selectors that target specific HTML elements. When creating a Go web scraper, aim for selectors that are stable and less likely to break if the website's layout changes.

c.OnHTML(".product-card", func(e *colly.HTMLElement) {

// Extract data using child selectors

name := e.ChildText(".product-name")

price := e.ChildText("[data-test='price']")

description := e.ChildAttr("meta[itemprop='description']", "content")

})

| Selector Type | Example | Purpose |

|---|---|---|

| Class | .product-card | Commonly used, stable identifiers |

| Attribute | [data-test='price'] | Attributes designed for testing, often consistent |

| ARIA | [aria-label='Product description'] | Accessibility attributes for reliable targeting |

| Combined | .product-card:has(h3) | Handles complex element relationships |

Once you've defined your selectors, you can extract the content more effectively.

Content Extraction

Using Colly's built-in methods, you can extract various types of content. Here's how you can retrieve text, attributes, and metadata:

c.OnHTML("article", func(e *colly.HTMLElement) {

title := e.Text

imageURL := e.Attr("src")

metadata := e.ChildAttr("meta[property='og:description']", "content")

})

This approach helps you gather the information you need while keeping the code clean and efficient.

Data Storage Options

After extracting the data, it's important to store it in a structured format. JSON is a popular choice for its simplicity and compatibility with many tools:

type Product struct {

Name string `json:"name"`

Price float64 `json:"price"`

UpdatedAt time.Time `json:"updated_at"`

}

func saveToJSON(products []Product) error {

file, err := json.MarshalIndent(products, "", " ")

if err != nil {

return err

}

return ioutil.WriteFile("products.json", file, 0644)

}

A 2021 performance test by Oxylabs showed that Go web scrapers using Colly processed data 45% faster than Python-based alternatives. For example, Colly completed tasks in under 12 seconds compared to Python's 22 seconds.

To refine your scraping process, use developer tools to inspect selectors, convert non-UTF8 pages, and handle errors. For dynamic websites, combine CSS selectors with text-based targeting for greater flexibility:

c.OnHTML(".specList:has(h3:contains('Property Information'))", func(e *colly.HTMLElement) {

buildYear := e.ChildText("li:contains('Built')")

location := e.ChildText("li:contains('Location')")

})

This method ensures you can handle complex layouts and dynamic content effectively.

Advanced Scraping Tips

Multi-page Scraping

When dealing with paginated content, you'll need a solid approach to navigate through pages. Use a CSS selector to target the "next" button and convert relative links into absolute URLs while keeping track of visited pages:

var visitedUrls sync.Map

c.OnHTML(".next", func(e *colly.HTMLElement) {

nextPage := e.Request.AbsoluteURL(e.Attr("href"))

// Ensure each URL is visited only once

if _, visited := visitedUrls.LoadOrStore(nextPage, true); !visited {

time.Sleep(2 * time.Second)

e.Request.Visit(nextPage)

}

})

For websites with dynamic content, add a waiting period to ensure all elements load properly:

func waitForDynamicContent(c *colly.Collector) {

c.OnRequest(func(r *colly.Request) {

r.Headers.Set("User-Agent", "Mozilla/5.0 (Windows NT 10.0; Win64; x64)")

time.Sleep(3 * time.Second)

})

}

Once pagination is under control, focus on maintaining an efficient request speed.

Speed and Rate Limits

To avoid overloading servers or triggering anti-scraping mechanisms, regulate your request rate:

limiter := rate.NewLimiter(rate.Every(2*time.Second), 1)

c.OnRequest(func(r *colly.Request) {

limiter.Wait(context.Background())

})

Error Management

Handling errors effectively is key to keeping your scraper running smoothly. For example, if a 429 status code is returned, check the Retry-After header and retry the request after the specified delay:

c.OnError(func(r *colly.Response, err error) {

log.Printf("Error at %v: %v", r.Request.URL, err)

if r.StatusCode == 429 {

retryAfter := r.Headers.Get("Retry-After")

delay, _ := strconv.Atoi(retryAfter)

time.Sleep(time.Duration(delay) * time.Second)

r.Request.Retry()

}

})

"ScraperAPI's advanced bypassing system automatically handles most web scraping complexities, preventing errors and keeping your data pipelines flowing." - ScraperAPI

To further refine retries, implement exponential backoff for better pacing:

func retryWithBackoff(attempt int, maxAttempts int) {

if attempt >= maxAttempts {

return

}

delay := time.Duration(math.Pow(2, float64(attempt))) * time.Second

time.Sleep(delay)

}

Finally, track key metrics to monitor and improve your scraper's performance:

type ScraperMetrics struct {

StartTime time.Time

RequestCount int

ErrorCount int

Duration time.Duration

}

func logMetrics(metrics *ScraperMetrics) {

log.Printf("Scraping completed: %d requests, %d errors, duration: %v",

metrics.RequestCount, metrics.ErrorCount, metrics.Duration)

}

Best Practices and Ethics

Project Summary

Our Go web scraper utilizes Colly to handle concurrent scraping effectively. It includes features like rate limiting, error management, and multi-page navigation. Additional capabilities include custom delays, proxy rotation, and detailed error logging. Now, let's dive into advanced improvements and ethical practices to elevate your scraper's functionality.

Future Improvements

Building on existing error handling and rate limiting strategies, you can take your scraper to the next level by incorporating these features:

// Add proxy rotation to distribute scraping load

type ProxyRotator struct {

proxies []string

current int

mutex sync.Mutex

}

func (pr *ProxyRotator) GetNext() string {

pr.mutex.Lock()

defer pr.mutex.Unlock()

proxy := pr.proxies[pr.current]

pr.current = (pr.current + 1) % len(pr.proxies)

return proxy

}

// Validate and sanitize scraped data before storing

type DataValidator struct {

Schema map[string]string

Sanitizer func(string) string

}

func (dv *DataValidator) ValidateAndStore(data map[string]string) error {

// Add validation logic here

return nil

}

These additions not only improve efficiency but also align your scraper with ethical guidelines discussed below.

Scraping Guidelines

Ethical scraping requires careful adherence to rules and best practices. Here's an example of how to respect a site's robots.txt file:

func getRobotsTxtRules(domain string) *robotstxt.RobotsData {

resp, err := http.Get(fmt.Sprintf("https://%s/robots.txt", domain))

if err != nil {

return nil

}

defer resp.Body.Close()

robots, err := robotstxt.FromResponse(resp)

return robots

}

Key practices to follow:

| Requirement | Implementation Details |

|---|---|

| Rate Limiting | Add delays of 2-5 seconds between requests |

| User Agent | Include contact information in the user-agent string |

| Data Privacy | Avoid collecting personal or sensitive data |

| Server Load | Monitor server response times and adjust request rates |

| Legal Compliance | Review terms of service and robots.txt guidelines |