Web scraping at scale is challenging but essential for industries like e-commerce and finance. Here's how to make it work:

- Use Distributed Systems: Split tasks across servers for parallel processing.

- Leverage Cloud Platforms: Scale easily with global distribution and redundancy.

- Integrate AI: Automate CAPTCHA solving, adapt to site changes, and improve reliability.

Key Challenges:

- Managing resources like computing power and storage.

- Ensuring system reliability with minimal downtime.

- Overcoming anti-scraping measures like bot detection.

Solutions:

- Resource Management: Choose serverless, dedicated, or hybrid setups based on needs.

- Automation: Schedule tasks smartly and retry failed scrapes automatically.

- Performance Monitoring: Use tools like Elasticsearch and Kibana to track system health.

Recommended Tools:

- InstantAPI.ai: AI-powered scraping with over 120,000 scrapes/month.

- Scrapy Cloud: Distributed scraping with robust customization.

- ScrapeHero Cloud: Pre-built crawlers for complex tasks.

Quick Comparison:

| Feature | InstantAPI.ai | Scrapy Cloud | ScrapeHero Cloud |

|---|---|---|---|

| Scale | 120,000+ scrapes/month | Unlimited pages/crawl | Flexible export options |

| AI Integration | Yes | No | Yes |

| Best For | Dynamic sites | Enterprise-level | Simplifying complex tasks |

Start with the right architecture, tools, and strategies to handle large-scale web scraping efficiently while staying compliant with legal guidelines.

How to build a scalable Web Scraping Infrastructure?

Key Elements of Scalable Web Scraping Systems

Creating an efficient web scraping system capable of handling large-scale operations requires a well-thought-out combination of components. Let’s break down the core elements that ensure scalability and performance.

Leveraging Distributed Systems

Distributed systems split tasks across multiple servers, increasing efficiency and reducing bottlenecks. This setup enables parallel processing, allowing you to scrape several web pages at once - a critical feature for time-sensitive projects.

For example, ScraperAPI handles vast networks of proxies, automating tasks like load balancing and resource allocation. This setup achieves over 95% success rates even on websites with strict protections.

While distributed systems are excellent for workload management, cloud platforms provide the flexibility to scale operations seamlessly.

Advantages of Cloud Platforms

| Feature | Advantage |

|---|---|

| Global Distribution | Faster, localized scraping with worldwide servers |

| Built-in Redundancy | Increased reliability with automatic failover |

| Managed Services | Reduced effort on maintenance and infrastructure |

Platforms like Scrapy Cloud allow you to expand your operations without the need for upfront investments in infrastructure, making scaling easier and cost-effective.

But infrastructure alone isn’t enough. AI adds a layer of intelligence that takes web scraping to the next level.

How AI Enhances Web Scraping

AI tools bring automation and intelligence to web scraping. They handle tasks like CAPTCHA solving, recognizing dynamic patterns, and fixing errors. AI also improves proxy rotation and anti-detection strategies, making scraping more reliable.

For instance, ScrapeHero Cloud uses AI to adapt to changing website layouts, maintaining a 99.9% accuracy rate without needing manual updates to scripts.

Steps to Build Scalable Web Scraping Workflows

Creating workflows that can handle large-scale web scraping projects requires careful planning to ensure they are efficient and reliable. Here’s how you can build workflows that meet these demands.

Managing Resources Effectively

Efficient resource management is key to scaling web scraping operations. Different setups suit different needs, and here’s a quick breakdown:

| Approach | Benefits | Ideal Scenarios |

|---|---|---|

| Serverless Computing | Automatically scales with demand; pay-as-you-go | Best for seasonal spikes, like holiday e-commerce campaigns |

| Dedicated Servers | Complete control and fixed costs | Perfect for ongoing, high-volume tasks like price tracking |

| Hybrid Setup | Combines flexibility with cost savings | Works well for workloads that mix steady and burst traffic needs |

For example, Scrapy Cloud allows for unlimited pages per crawl and adjusts resources dynamically based on demand, showcasing how resource management can be handled effectively.

Automating Tasks and Scheduling

Automation is a game-changer for simplifying operations. By scheduling crawls during low-traffic hours, retrying failed tasks automatically, and focusing on collecting only updated or new data, you can save time and reduce costs.

One financial data provider used these techniques to cut operational costs by 40%. They achieved this through smart scheduling and incremental updates, proving how automation can drive efficiency.

Tracking Performance and Reliability

Keeping an eye on performance metrics is crucial for long-term success. Tools like Elasticsearch, Logstash, and Kibana can help monitor:

- Crawl speed, completion rates, and resource usage

- Data quality and accuracy

- System health and error trends

sbb-itb-f2fbbd7

Tools and Platforms for Scalable Web Scraping

Now that we've covered the basics of scalable web scraping systems, let’s dive into the tools and platforms that can make it all happen. These solutions are built to handle large-scale, complex scraping tasks efficiently and reliably.

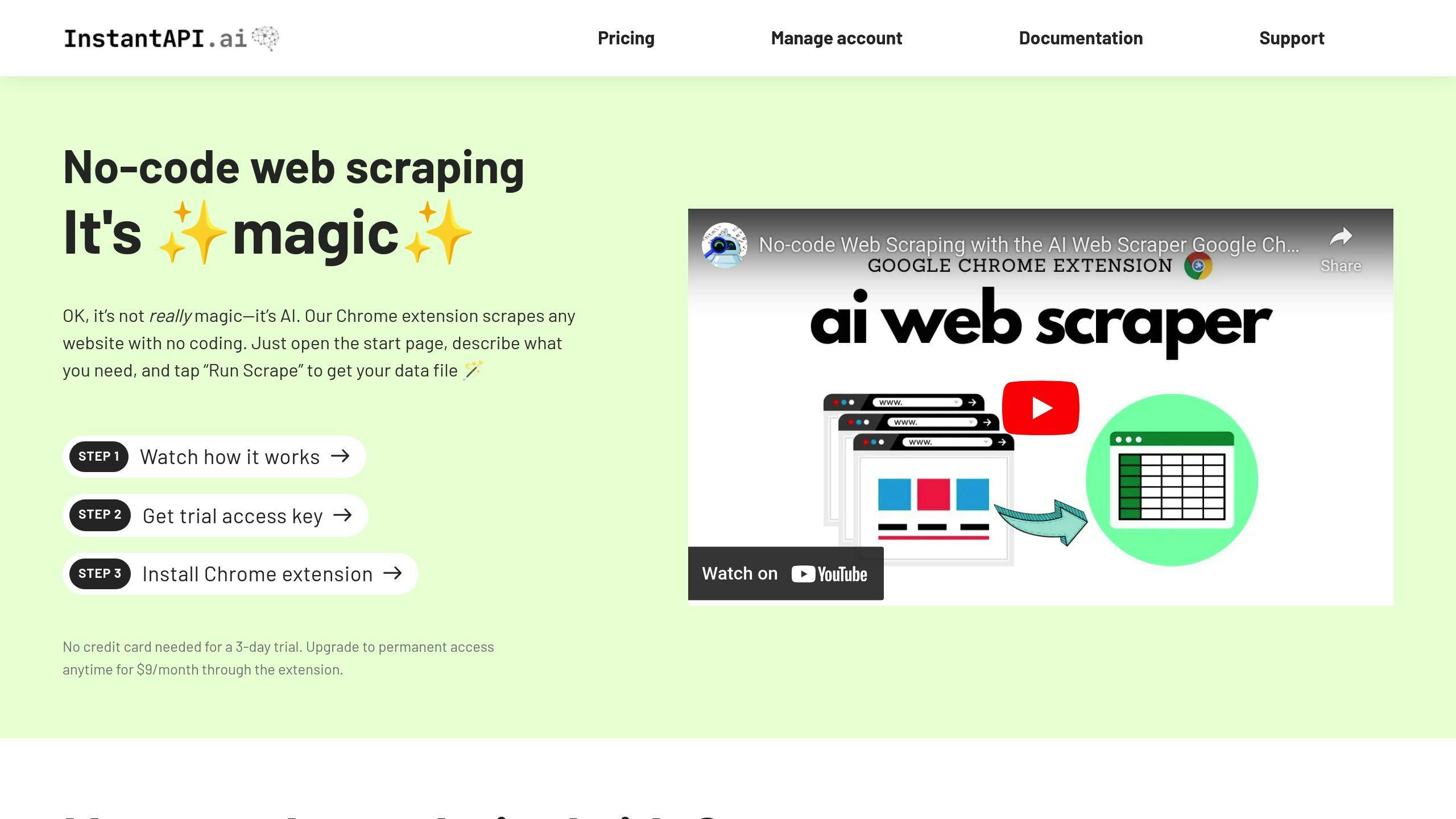

Overview of InstantAPI.ai

InstantAPI.ai takes an AI-driven approach to web scraping, simplifying the process and removing many technical hurdles. It supports everything from small-scale projects to enterprise-level operations, with plans allowing over 120,000 scrapes per month.

| Feature | What It Does |

|---|---|

| AI-Powered Extraction | Automatically adjusts to changes in website structures |

| Premium Proxies | Uses smart IP management to avoid getting blocked |

| JavaScript Rendering | Handles dynamic sites, including e-commerce platforms |

| Concurrent Processing | Boosts efficiency with parallel tasks |

Other Popular Web Scraping Tools

Beyond InstantAPI.ai, there are other strong contenders for large-scale scraping:

- Scrapy Cloud: Perfect for distributed scraping, offering extensive customization and smooth integration. It’s a great fit for enterprise-level data extraction, especially if you already use Scrapy.

- ParseHub: Known for its easy, point-and-click interface, it works well with JavaScript-heavy websites. However, it might not be the best choice for projects requiring long-term scalability.

- ScrapeHero Cloud: Comes with pre-built crawlers, automated scheduling, and flexible export options. It’s tailored for simplifying complex scraping tasks while maintaining reliability.

When choosing a tool, think about your project's specific needs. Factors like processing power, integration options, error handling, and scalability will guide you toward the right solution. Each platform brings its own strengths, so the best choice depends on your technical requirements and budget.

Conclusion

Key Points Recap

Handling large-scale web scraping effectively requires a combination of distributed systems, cloud technologies, and AI-based automation. These tools allow organizations to process massive datasets while maintaining performance and reliability. AI and cloud platforms streamline tasks like proxy management and bypassing anti-scraping defenses, making large-scale scraping more efficient and cost-effective. For example, services like ScraperAPI use AI-driven proxy management and CAPTCHA solving to improve success rates by up to 98% and reduce costs by as much as 60%.

Practical Advice for Selecting Tools

Choosing the right tools is essential for successful web scraping. Here are some practical considerations:

| Factor | What to Look For | Example Solution |

|---|---|---|

| Scale | Monthly scraping volume | InstantAPI.ai (120,000+ scrapes/month) |

| Complexity | Data structure requirements | ScrapeHero Cloud (Pre-built crawlers) |

| Budget | Cost vs. features offered | Webscraper.io ($50+/month for basic needs) |

Start by carefully evaluating your project needs, such as the volume of data, its complexity, and any technical challenges. Even if you're starting small, opt for platforms that allow for growth to avoid major overhauls as your project expands.

Lastly, ensure compliance and ethical practices by respecting robots.txt files, setting up proper rate limits, and adhering to legal guidelines. These steps will help you scale your web scraping efforts smoothly while maintaining integrity and efficiency.

FAQs

Is Golang or Python better for web scraping?

The choice between Golang and Python for web scraping depends on your project's specific needs. Golang stands out for its speed and efficiency, making it a great fit for large-scale scraping tasks.

Here’s a quick comparison:

| Feature | Golang | Python |

|---|---|---|

| Speed & Efficiency | Faster and more memory-efficient | Decent performance |

| Learning Curve | Steeper | Easier to learn |

| Library Ecosystem | Smaller but growing | Wide range of scraping libraries |

| Concurrency | Built-in support for parallel processing | Achievable with additional tools |

| Resource Usage | Lower memory usage | Higher memory consumption |

Golang is often the go-to for enterprise-level scraping, where high performance and efficiency are critical. On the other hand, Python shines in small to mid-sized projects due to its simplicity and rich library support, allowing for faster development.

"ScrapeHero engineers note that Golang is faster for large projects, while Python excels in ease of use and library support."

Your decision should align with the scale of your project and your team's goals. While Golang offers speed and scalability, Python's ecosystem and ease of use make it ideal for quick prototyping and smaller workloads. Remember, the language is just one part of creating a robust scraping system - it also depends on the architecture and tools you choose.