Want to scrape data from websites easily? Python and BeautifulSoup make it simple for beginners to extract information from web pages. Here's everything you need to know to get started:

- What is Web Scraping? Automating data collection from websites by parsing HTML.

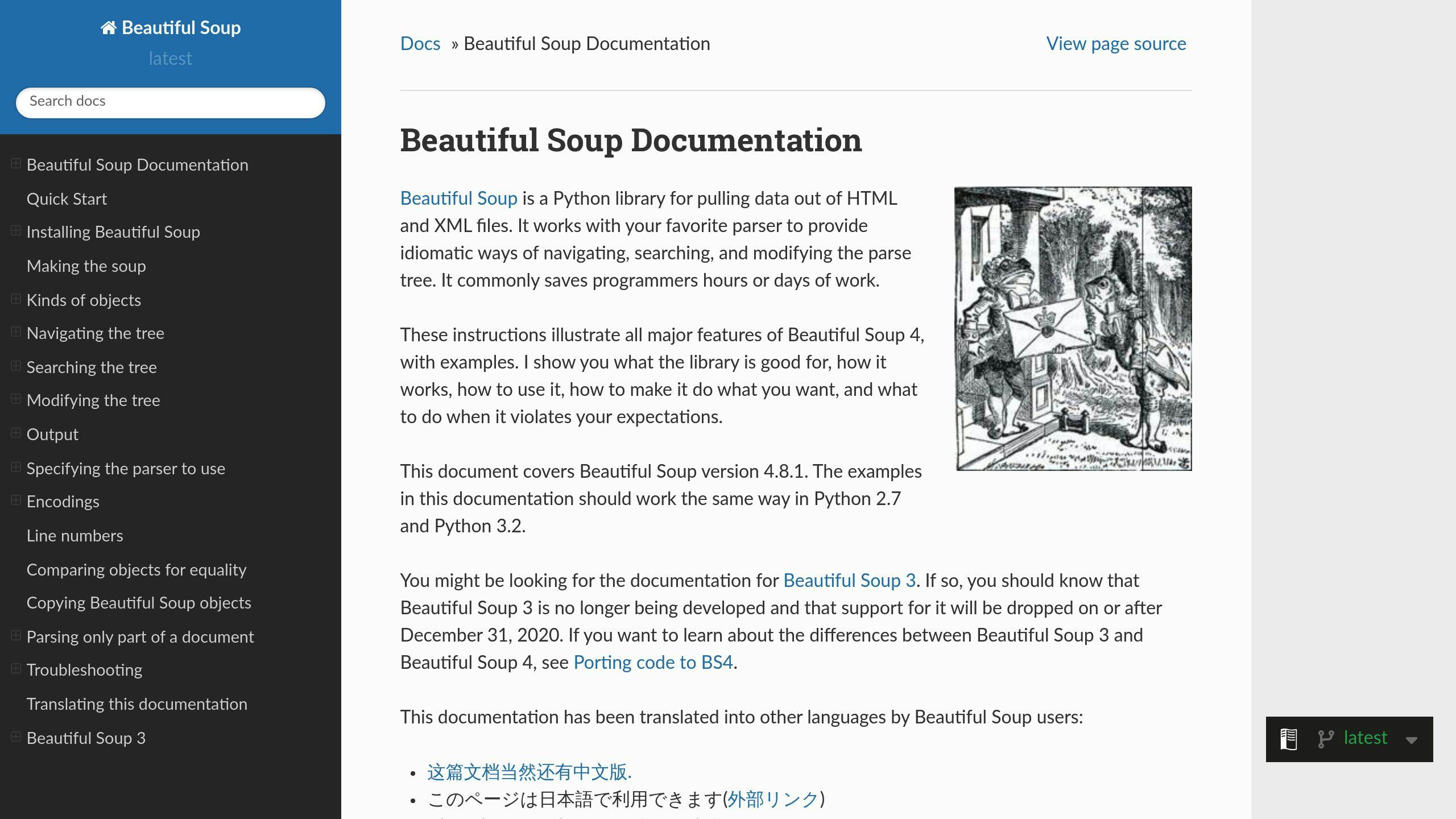

- Why Python and BeautifulSoup? Python’s easy syntax and BeautifulSoup’s HTML parsing tools make them ideal for beginners.

- Steps to Start:

- Install Python and libraries (

requests,beautifulsoup4). - Understand HTML structure and CSS selectors.

- Write a script to send HTTP requests, parse HTML, and extract data.

- Save your data in formats like JSON or CSV.

- Install Python and libraries (

- Tips for Success: Respect

robots.txt, use delays, and handle errors gracefully.

Quick Example: Scrape titles from Hacker News using Python:

from bs4 import BeautifulSoup

import requests

response = requests.get('https://news.ycombinator.com/')

soup = BeautifulSoup(response.content, 'html.parser')

titles = [title.text for title in soup.select('.titleline > a')]

print(titles)

This guide covers setup, ethical practices, handling dynamic content, and even no-code tools like InstantAPI.ai for faster scraping. Let’s dive in!

Beautiful Soup 4 Tutorial #1 - Web Scraping With Python

Setting Up Your Environment

Before starting with web scraping, it's important to prepare your development environment with the right tools. Here's a step-by-step guide to help you get everything in place.

Installing Python

First, you'll need Python installed on your system. Download Python 3.6 or later from python.org.

- For Windows: Run the downloaded

.exeinstaller. - For macOS: Use Homebrew with the command:

brew install python3. - For Ubuntu/Linux: Use the terminal command:

sudo apt install python3.

To check if Python is installed, type python --version in your terminal. If installed correctly, you'll see the version number displayed.

Installing Libraries

Once Python is ready, you'll need some libraries to handle web scraping. Start with these two:

requestsfor making HTTP requests.beautifulsoup4for parsing HTML content.

Install them using pip in your terminal:

pip install requests

pip install beautifulsoup4

For more advanced parsing, you can also add lxml or html5lib:

pip install lxml html5lib

Setting Up a Virtual Environment

A virtual environment helps keep your projects organized by isolating dependencies, avoiding potential conflicts between libraries.

To create and activate a virtual environment, follow these commands:

- Windows:

python -m venv scraper_env

scraper_env\Scripts\activate.bat

- macOS/Linux:

python -m venv scraper_env

source scraper_env/bin/activate

Once the virtual environment is activated, install the required libraries as described earlier. To save your project's dependencies, run:

pip freeze > requirements.txt

When you're done working, exit the virtual environment by typing:

deactivate

With your setup complete, you're ready to explore the structure of web pages and start extracting the data you need.

Understanding HTML and CSS Selectors

Before diving into data extraction, it's important to grasp how web pages are structured and how to pinpoint specific elements.

HTML Structure Basics

HTML documents are organized like a tree, starting with the <html> root that contains <head> and <body> sections. Each element is defined by tags and can include attributes to provide extra details:

<html>

<head>

<title>Page Title</title>

</head>

<body>

<div class="content">

<p id="intro">Introduction text</p>

</div>

</body>

</html>

Using CSS Selectors for Data Extraction

CSS selectors are handy tools for identifying specific elements. BeautifulSoup's select() method allows you to use these selectors effectively:

-

Basic Selectors

- Tag name:

soup.select('p')finds all<p>elements in the document. - Class name:

soup.select('.article')targets elements withclass="article". - ID:

soup.select('#header')locates the element withid="header".

- Tag name:

-

Combination Selectors

- Descendant:

soup.select('div p')finds<p>elements nested inside<div>elements. - Direct child:

soup.select('div > p')identifies<p>elements that are direct children of<div>elements.

- Descendant:

For example, to extract and display all article titles with the class title from a webpage:

from bs4 import BeautifulSoup

import requests

url = "https://example.com"

response = requests.get(url)

soup = BeautifulSoup(response.content, 'html.parser')

titles = soup.select('article .title')

for title in titles:

print(title.text.strip())

You can also create more specific selectors by combining attributes:

nav_links = soup.select('nav.main-menu a[href^="https"]')

Tips for Success:

- Use unique attributes like IDs or specific classes to create precise selectors.

- Test your selectors in your browser's developer tools (F12) to ensure accuracy.

- Cache BeautifulSoup objects when scraping multiple pages for better performance.

Mastering HTML and CSS selectors is key to building efficient and maintainable web scrapers. With this knowledge, you're well-equipped to start crafting your own scraper.

sbb-itb-f2fbbd7

Creating Your First Web Scraper

With a solid understanding of HTML structure and CSS selectors, it's time to build a web scraper. We'll use Hacker News as our example because of its straightforward HTML layout and its compatibility with responsible scraping practices.

Sending HTTP Requests

To retrieve webpage content, we'll use the requests library. Including a User-Agent header helps mimic browser behavior and reduces the chances of getting blocked:

import requests

headers = {

'User-Agent': 'Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36 (KHTML, like Gecko) Chrome/107.0.0.0 Safari/537.36'

}

response = requests.get('https://news.ycombinator.com/', headers=headers)

if response.status_code == 200:

html_content = response.content

else:

print(f"Failed to retrieve the webpage: {response.status_code}")

Parsing HTML with BeautifulSoup

Once you've fetched the HTML content, the next step is parsing it into a manageable format. For this, we'll use the BeautifulSoup library:

from bs4 import BeautifulSoup

soup = BeautifulSoup(html_content, 'html.parser')

The html.parser is Python's built-in parser, but you can also use lxml for faster performance when working with larger datasets.

Extracting Data with BeautifulSoup

Now, let's create a script to extract stories from Hacker News:

def extract_hn_stories():

stories = []

articles = soup.find_all('tr', class_='athing')

for article in articles:

title = article.find('span', class_='titleline').text.strip()

link = article.find('span', class_='titleline').find('a')['href']

# Get the following sibling row for points and comments

subtext = article.find_next_sibling('tr')

points = subtext.find('span', class_='score')

points = points.text.split()[0] if points else '0'

stories.append({

'title': title,

'link': link,

'points': points

})

return stories

This script uses key BeautifulSoup methods like find_all() to locate multiple elements, find() for individual elements, and find_next_sibling() to navigate related elements.

To handle unexpected errors during scraping, include error handling:

try:

stories = extract_hn_stories()

print(f"Successfully scraped {len(stories)} stories")

except Exception as e:

print(f"An error occurred: {str(e)}")

Saving Your Scraped Data

Once your scraper is working, save the data for future use. Here's how to store it in a JSON file:

import json

with open('hn_stories.json', 'w', encoding='utf-8') as f:

json.dump(stories, f, ensure_ascii=False, indent=2)

This saves your data in a structured format, making it easy to analyze or integrate into other applications.

Tips for Responsible Scraping

- Add delays between requests to avoid overloading servers.

- Implement retries for failed requests.

- Cache responses during development to minimize repeated requests.

- Always review the website's

robots.txtfile to ensure compliance with its scraping policies.

Best Practices and Common Challenges in Web Scraping

Respecting Robots.txt and Terms of Service

The robots.txt file outlines which parts of a site web crawlers can access. You can check it by appending /robots.txt to the site's root URL.

Here’s a Python snippet to ensure compliance with robots.txt:

from urllib.robotparser import RobotFileParser

from urllib.parse import urlparse

def can_scrape_url(url):

rp = RobotFileParser()

domain = urlparse(url).scheme + "://" + urlparse(url).netloc

rp.set_url(domain + "/robots.txt")

rp.read()

return rp.can_fetch("*", url)

Managing Dynamic Content and Pagination

Scraping modern websites often involves dealing with dynamic content and pagination. For content loaded via JavaScript, tools like Selenium can help. Here's how to use Selenium to wait for dynamic elements:

from selenium import webdriver

from selenium.webdriver.common.by import By

from selenium.webdriver.support.ui import WebDriverWait

from selenium.webdriver.support import expected_conditions as EC

driver = webdriver.Chrome()

driver.get(url)

# Wait for dynamic content to load

element = WebDriverWait(driver, 10).until(

EC.presence_of_element_located((By.CLASS_NAME, "content"))

)

For pagination, iterate through pages systematically:

def scrape_all_pages(base_url, max_pages=10):

all_data = []

for page in range(1, max_pages + 1):

url = f"{base_url}?page={page}"

# Add delay between requests

time.sleep(2)

try:

response = requests.get(url, headers=headers)

if response.status_code != 200:

break

# Process page data

page_data = extract_data(response.content)

all_data.extend(page_data)

except Exception as e:

print(f"Error on page {page}: {str(e)}")

break

return all_data

Saving Scraped Data

Choose a storage format based on your data's structure and use case:

| Storage Method | Best For |

|---|---|

| CSV | Simple tabular data, easy for spreadsheets |

| JSON | Nested data, readable and flexible |

| SQLite | Organized data, supports queries and transactions |

Here’s an example of saving data in multiple formats:

import pandas as pd

# Convert scraped data to a DataFrame

df = pd.DataFrame(scraped_data)

# Save as JSON and CSV

df.to_json('data.json', orient='records', lines=True)

df.to_csv('data.csv', index=False)

"Web scraping is a powerful tool for data extraction, but it must be used responsibly and ethically." - Vivek Kumar Singh, Author, Beautiful Soup Web Scraping Tutorial

Adding Delays and Using Proxies

To avoid overwhelming servers, use delays between requests:

from random import uniform

def make_request(url):

# Random delay between 1-3 seconds

time.sleep(uniform(1, 3))

return requests.get(url, headers=headers)

For large-scale scraping, proxy rotation can help prevent IP bans:

from fake_useragent import UserAgent

ua = UserAgent()

headers = {'User-Agent': ua.random}

Using proxies spreads requests across multiple IPs, reducing the chances of being blocked. With these techniques, you're equipped to tackle more advanced web scraping challenges.

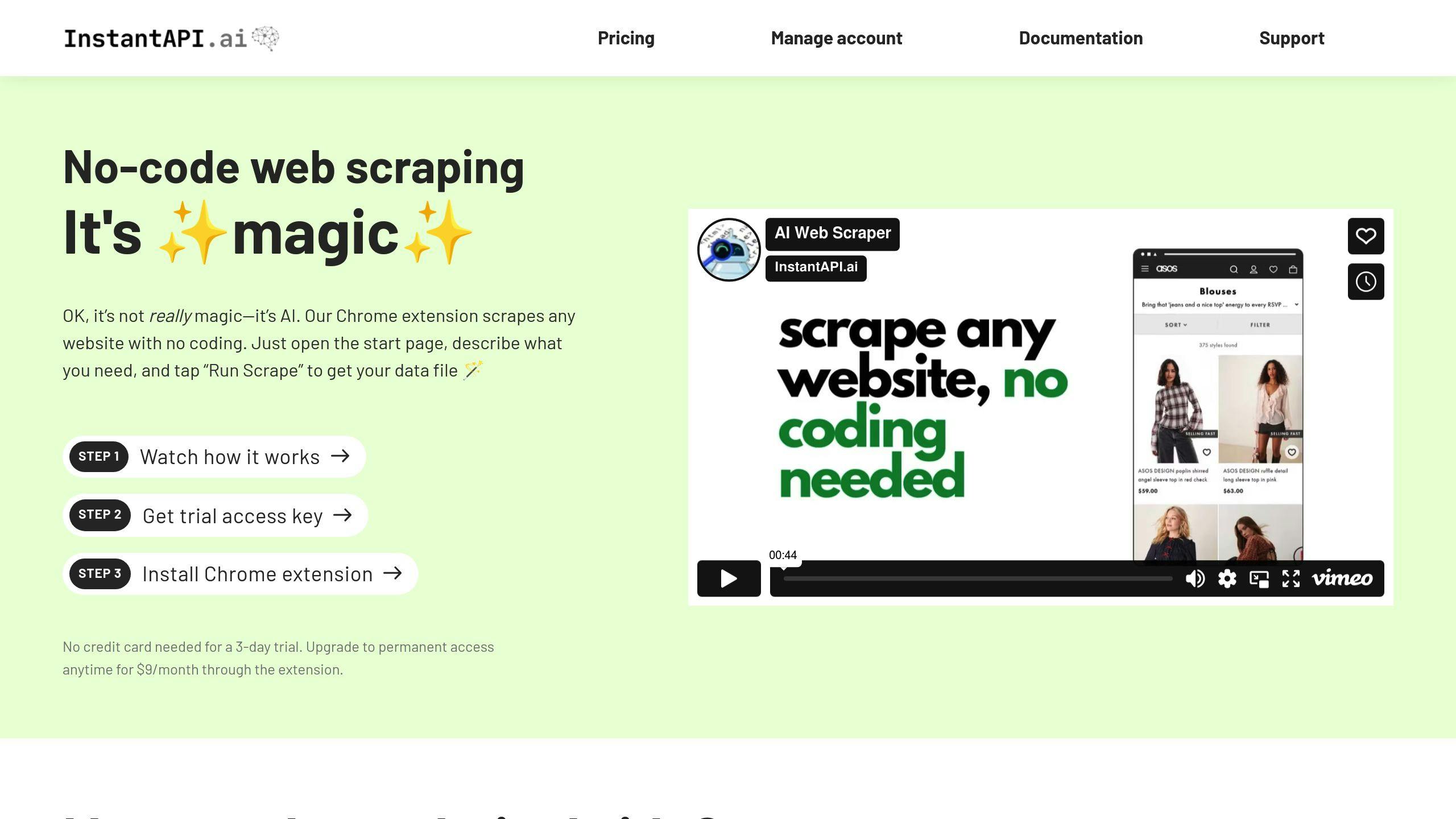

No-Code Web Scraping with InstantAPI.ai

What is InstantAPI.ai?

Building a scraper with tools like Python and BeautifulSoup is a great skill, but what if you’re after speed and simplicity? That’s where InstantAPI.ai steps in. This no-code platform uses AI to make web scraping easier for everyone, even if you have zero programming experience. It automatically scans HTML structures and detects patterns, so you can extract data quickly and efficiently.

What Does InstantAPI.ai Offer?

At the heart of InstantAPI.ai is its Chrome extension, which allows you to extract data with just a few clicks. The AI engine does the heavy lifting, so you don’t have to mess with HTML or CSS selectors manually.

Here’s a closer look at its standout features:

| Feature | What It Does | Why It’s Useful |

|---|---|---|

| Automated Content Handling | Works seamlessly with JavaScript-heavy sites | No need for extras like Selenium |

| Built-in Proxy Management | Prevents IP blocks and rate limiting | Keeps your scrapers running smoothly |

| Self-Updating Scrapers | Automatically adapts to website changes | Saves time on maintenance |

| API Integration | Provides RESTful API access | Easily connects to your existing systems |

It also takes care of tricky tasks like managing dynamic content, dealing with pagination, and cleaning up messy data.

How Much Does It Cost?

InstantAPI.ai keeps pricing simple. The Chrome extension costs $9/month and offers unlimited scraping, making it perfect for individuals or small teams. For larger businesses, enterprise plans include custom support and unlimited API access.

"InstantAPI.ai makes web scraping accessible to everyone while maintaining enterprise-grade reliability", says Anthony Ziebell, founder of InstantAPI.ai.

If you’re looking for a no-code solution that saves time and effort, InstantAPI.ai is worth considering. That said, having a basic understanding of web scraping concepts will always be helpful.

Conclusion and Next Steps

Key Points

This guide has covered the basics of web scraping using Python and BeautifulSoup, giving you the skills to create your first scraper and collect data responsibly. With these tools, you're now equipped to pull data from websites and store it for analysis. If you're looking to scale up your efforts, platforms like InstantAPI.ai offer a code-free option, while traditional programming methods allow for more control and customization.

To deepen your understanding and take on more advanced challenges, consider exploring additional resources.

Further Learning Resources

Here are some helpful resources to boost your web scraping expertise:

| Resource Type | What It Offers |

|---|---|

| Official Documentation | Detailed explanations of core syntax |

| Advanced Libraries | Tools for tackling complex tasks |

| Community Forums | Support and troubleshooting advice |

Next steps to sharpen your skills:

- Start with simple projects, such as scraping static websites.

- Focus on building strong error-handling techniques, including retries for failed requests.

- Dive into advanced topics like dealing with dynamic content or managing pagination.

As you gain experience, try experimenting with new tools and approaches. For instance, you could explore asynchronous scraping using asyncio and aiohttp, or learn how to navigate access restrictions by working with proxies.

Web scraping is constantly evolving. Stay in the loop by engaging with online communities and keeping up with updated documentation. Whether you stick with Python and BeautifulSoup or try no-code tools like InstantAPI.ai, the skills you've picked up here will set you up for success in extracting and working with data.