Automating data extraction saves time, reduces errors, and simplifies complex workflows. Python is a top choice for this, offering tools like BeautifulSoup for small-scale scraping, Scrapy for large-scale projects, and pandas for processing data. Here's what you'll learn:

- Why Python?: Simple syntax, powerful libraries, and scalability.

- Key Tools:

- BeautifulSoup: Best for parsing static HTML.

- Scrapy: Handles large, asynchronous scraping projects.

- pandas: Cleans and organizes data for analysis.

- How to Start:

- Set up a Python environment with virtual environments and libraries.

- Use BeautifulSoup for simple scraping or Scrapy for advanced workflows.

- Automate and schedule tasks with tools like

scheduleor Airflow.

Python makes every step of data extraction - from fetching to cleaning to integrating - efficient and reliable. Whether you're scraping a single page or managing a large dataset, Python has the tools you need.

Preparing Your Python Environment

Setting up your Python environment properly is key to ensuring your data extraction workflows run smoothly. A well-organized setup helps your scripts handle the demands of automation tasks without hiccups. Using Python 3.12.0 is recommended for the best performance and compatibility with libraries.

Installing Python Libraries

Start by installing the necessary libraries with pip:

pip install beautifulsoup4 requests lxml scrapy pandas pyarrow selenium

These libraries are essential for tasks like parsing HTML, web crawling, and handling data. At the beginning of your script, import the ones you need:

from bs4 import BeautifulSoup

import requests

import pandas as pd

import scrapy

Setting Up a Virtual Environment

To avoid dependency conflicts, create a virtual environment using:

python -m venv scraping-env

Activate it with the appropriate command for your operating system:

- Windows:

activate - Unix/Linux:

source activate

This keeps your project dependencies isolated and manageable.

Development Tools and Best Practices

Use an IDE like PyCharm or VS Code to simplify debugging and take advantage of features like code completion. For faster data processing and exporting, combine pyarrow with pandas to work with formats like Parquet.

Pick the right tools for your project:

- BeautifulSoup: Ideal for small projects where simplicity is key.

- Scrapy: Perfect for larger projects that need asynchronous requests.

- pandas: Great for manipulating and analyzing datasets efficiently.

With your environment set up, you're ready to start building scripts using libraries like BeautifulSoup and Scrapy for effective data extraction.

Using BeautifulSoup for Web Scraping

BeautifulSoup is a Python library that simplifies parsing HTML and XML, making it a great choice for smaller or moderately complex web scraping tasks. It's particularly useful for extracting data from static websites or handling straightforward scraping needs.

Fetching Webpage Content

To start scraping, you first need to fetch the webpage's content. Here's how you can do it:

import requests

from bs4 import BeautifulSoup

url = 'https://news.ycombinator.com/'

response = requests.get(url)

if response.status_code == 200:

soup = BeautifulSoup(response.content, 'html.parser') # Handles webpage encoding effectively

else:

print(f"Failed to retrieve page: Status code {response.status_code}")

Parsing HTML and Extracting Information

BeautifulSoup offers simple tools to navigate and extract data from HTML. For example, you can extract article titles and links with this code:

# Locate and extract article details

articles = soup.select('div.athing span.titleline a')

for article in articles:

print(f"Title: {article.text.strip()}, Link: {article['href']}")

You can also use more specific selectors to fine-tune your data extraction:

# Use CSS selectors for precise targeting

elements = soup.select('div.story > span.titleline > a')

# Find elements with specific attributes

data = soup.find('div', attrs={'class': 'content', 'id': 'main'})

Scraping Multi-Page and Dynamic Websites

For scraping data across multiple pages, you can set up a simple pagination process:

import time

from random import randint

for page in range(1, 6):

response = requests.get(f'https://example.com/page/{page}')

time.sleep(randint(2, 5)) # Add random delays to mimic human activity and reduce server load

soup = BeautifulSoup(response.content, 'html.parser')

# Add your scraping logic here

For websites that use JavaScript to load content, you'll need to pair BeautifulSoup with Selenium for better results:

from selenium import webdriver

from selenium.webdriver.chrome.service import Service

driver = webdriver.Chrome(service=Service(ChromeDriverManager().install()))

driver.get(url)

soup = BeautifulSoup(driver.page_source, 'html.parser')

BeautifulSoup is perfect for small-scale projects, but if you're dealing with more complex scraping tasks, you might want to consider a more advanced framework like Scrapy, which we'll discuss next.

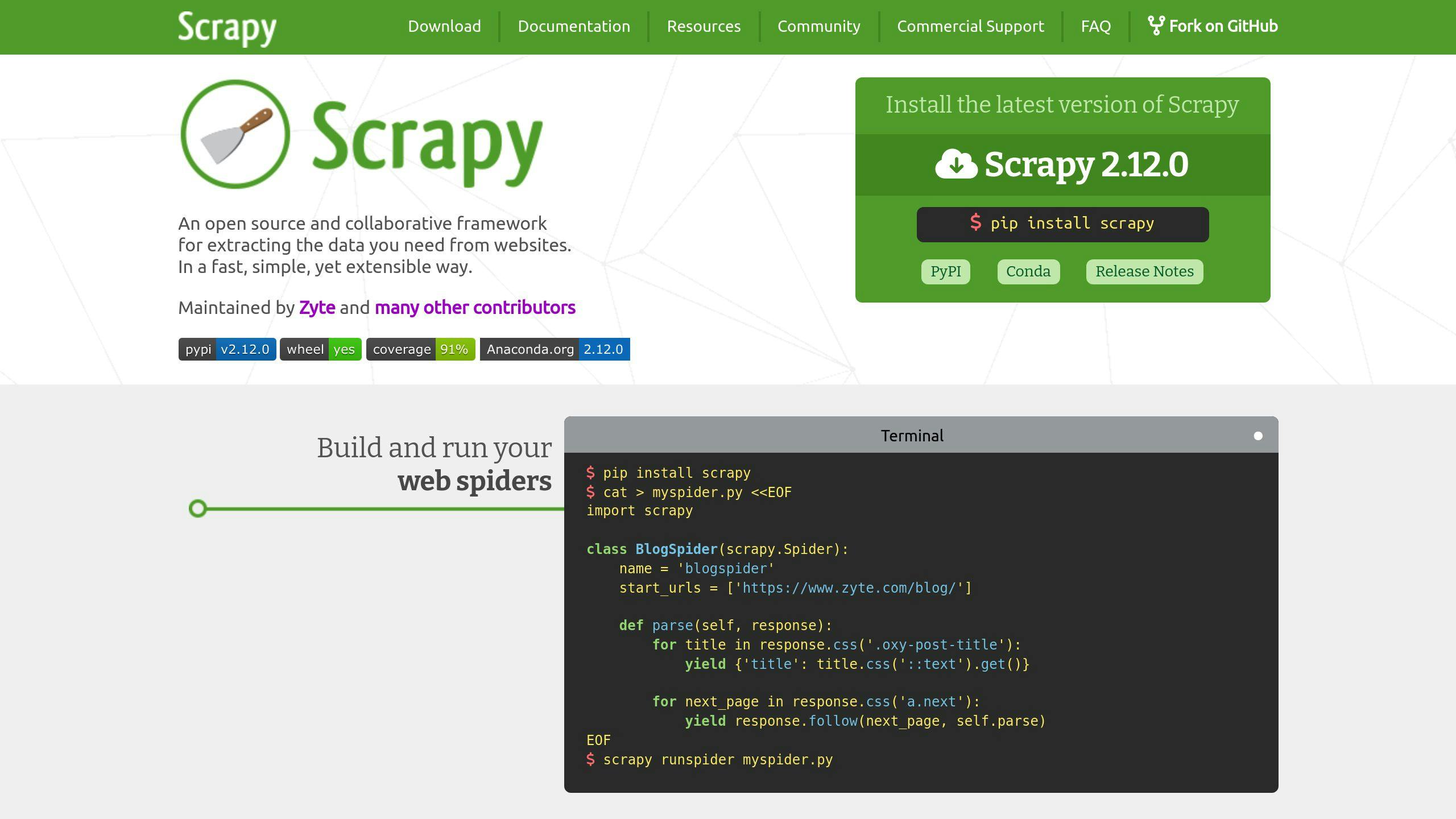

Scraping Complex Websites with Scrapy

Scrapy is a powerful tool for large-scale web scraping, especially when dealing with complex workflows. Its ability to handle numerous requests simultaneously makes it ideal for extracting data from websites efficiently.

Starting a Scrapy Project

To begin with Scrapy, you need to set up a project that organizes your spiders and configurations. Here's how to get started:

# Create a new Scrapy project

scrapy startproject ecommerce_scraper

# Generate a spider

cd ecommerce_scraper

scrapy genspider products example.com

Your spider file will look something like this:

import scrapy

class ProductsSpider(scrapy.Spider):

name = 'products'

allowed_domains = ['example.com']

start_urls = ['https://example.com/products']

def parse(self, response):

# Extract product details

products = response.css('div.product-item')

for product in products:

yield {

'name': product.css('h2.title::text').get(),

'price': product.css('span.price::text').get(),

'url': product.css('a.product-link::attr(href)').get()

}

Organizing Data with Items and Pipelines

Scrapy uses Items to structure your data and Pipelines to process it. These components work together to ensure your scraped data is clean and ready for use. Here's an example:

# items.py

class ProductItem(scrapy.Item):

name = scrapy.Field()

price = scrapy.Field()

url = scrapy.Field()

timestamp = scrapy.Field()

# pipelines.py

from datetime import datetime

class CleaningPipeline:

def process_item(self, item, spider):

item['price'] = float(item['price'].replace('$', '').strip())

item['timestamp'] = datetime.now()

return item

# settings.py

ITEM_PIPELINES = {

'ecommerce_scraper.pipelines.CleaningPipeline': 300,

}

Handling JavaScript and Other Challenges

Dynamic, JavaScript-driven websites can be tricky to scrape. Scrapy works with Splash, a lightweight browser emulator, to handle such cases:

from scrapy_splash import SplashRequest

class DynamicSpider(scrapy.Spider):

name = 'dynamic'

def start_requests(self):

urls = ['https://example.com/dynamic-content']

for url in urls:

yield SplashRequest(url, self.parse)

Additionally, you can tweak Scrapy's settings to improve performance and ensure responsible scraping:

# settings.py

CONCURRENT_REQUESTS = 16

DOWNLOAD_DELAY = 1.5

ROBOTSTXT_OBEY = True

With these tools and configurations, you can extract data efficiently, even from challenging websites. Next, we’ll dive into how to clean and format the data for further use.

Processing and Using Extracted Data

After scraping data successfully, the next steps involve cleaning, formatting, and integrating it into your workflows. Properly cleaned and formatted data ensures the information you’ve collected is ready for practical use.

Cleaning and Formatting Data with pandas

The pandas library makes it easier to clean and organize your scraped data. Here's an example of how to handle missing values, duplicates, and data types:

import pandas as pd

# Remove rows with missing values in critical columns

df = df.dropna(subset=['critical_columns'])

# Fill missing values in optional columns with default values

df = df.fillna({'optional_columns': 'default_value'})

# Remove duplicate rows based on a unique identifier

df = df.drop_duplicates(subset=['unique_identifier'])

# Convert data types for consistency

df['price'] = df['price'].astype(float)

df['date'] = pd.to_datetime(df['date'])

Saving Data in Different Formats

To ensure compatibility with various tools, export your data in formats that suit your needs. pandas supports several options:

# Save as CSV (compact and widely supported)

df.to_csv('extracted_data.csv', index=False)

# Save as JSON (ideal for web applications)

df.to_json('extracted_data.json', orient='records')

# Save as Excel (supports advanced formatting)

df.to_excel('extracted_data.xlsx', sheet_name='Extracted Data')

Integrating Data into Applications

To use your data in applications, load it into databases or APIs. Here's an example of how to integrate data into a PostgreSQL database:

from sqlalchemy import create_engine

# Establish a database connection

engine = create_engine('postgresql://user:password@localhost:5432/database')

# Write DataFrame to a SQL table

df.to_sql('extracted_data', engine, if_exists='replace', index=False)

For larger datasets, handle potential errors during insertion:

try:

df.to_sql('extracted_data', engine, if_exists='append')

except Exception as e:

print(f"Error during database insertion: {e}")

With your data cleaned, formatted, and integrated, you’re ready to streamline your workflows and make the most of the information you've gathered.

sbb-itb-f2fbbd7

Automating Data Extraction Workflows

Once you've extracted and processed your data, the next logical step is automating these workflows. Automation ensures consistent performance, reduces errors by up to 70%, and minimizes manual effort.

Combining Scripts into a Workflow

Python provides excellent tools to combine multiple scripts into smooth workflows. For simpler tasks, the subprocess module works well. For more complex workflows - like those with dependencies or advanced scheduling - Apache Airflow is a great choice. Here's how you can use both:

Using subprocess for basic automation:

import subprocess

def run_workflow():

try:

# Run the scraping script

subprocess.run(['python', 'scraper.py'], check=True)

# Process the extracted data

subprocess.run(['python', 'data_processor.py'], check=True)

# Load data into the database

subprocess.run(['python', 'database_loader.py'], check=True)

except subprocess.CalledProcessError as e:

raise

For more advanced workflows, Airflow can help:

from airflow import DAG

from airflow.operators.python_operator import PythonOperator

from datetime import datetime, timedelta

default_args = {

'owner': 'data_team',

'start_date': datetime(2025, 2, 3),

'retries': 3,

'retry_delay': timedelta(minutes=5)

}

dag = DAG('data_extraction_workflow',

default_args=default_args,

schedule_interval='@daily')

scrape_task = PythonOperator(

task_id='scrape_data',

python_callable=scrape_function,

dag=dag

)

No matter the method you choose, adding proper error handling and logging is crucial to keep workflows running smoothly.

Managing Errors and Logs

Good logging practices help you monitor and troubleshoot your workflows effectively. Here's an example of setting up logging:

import logging

from logging.handlers import RotatingFileHandler

handler = RotatingFileHandler(

'extraction_workflow.log',

maxBytes=10000000, # 10MB

backupCount=5

)

logging.basicConfig(

level=logging.INFO,

format='%(asctime)s - %(name)s - %(levelname)s - %(message)s',

handlers=[handler]

)

logger = logging.getLogger('extraction_workflow')

try:

logger.info("Data extraction started")

# ... extraction process ...

except Exception as e:

logger.error(f"Extraction failed: {str(e)}")

retry_extraction()

This setup ensures you have detailed records of your workflow's performance and any issues that arise.

Scheduling Tasks Automatically

To automate task scheduling in a platform-independent way, Python's schedule library is a simple yet effective tool:

import schedule

import time

def job():

try:

run_workflow()

except Exception as e:

print(f"Scheduled job failed: {str(e)}")

# Schedule the job to run daily at 2 AM

schedule.every().day.at("02:00").do(job)

while True:

schedule.run_pending()

time.sleep(60)

With workflows automated and tasks scheduled, you can shift your focus to analyzing the extracted data and driving insights.

Guidelines for Ethical and Scalable Data Extraction

To keep your automated workflows running smoothly and responsibly, it's important to follow ethical practices while optimizing performance. Ethical data extraction ensures a balance between efficiency and accountability, building on the scraping methods introduced with tools like BeautifulSoup and Scrapy.

Respecting Website Policies

Ethical scraping starts with adhering to website rules, such as those outlined in robots.txt files. Python's RobotFileParser can help you check permissions before scraping:

from urllib.robotparser import RobotFileParser

from urllib.parse import urlparse

def check_robots_txt(url, user_agent):

rp = RobotFileParser()

domain = urlparse(url).scheme + "://" + urlparse(url).netloc

rp.set_url(domain + "/robots.txt")

rp.read()

return rp.can_fetch(user_agent, url)

Once you've confirmed that scraping is allowed, focus on techniques that maintain efficiency while reducing the risk of detection.

Using Proxies and Rate Limiting

To scrape responsibly, it's crucial to manage your request frequency and use proxies. Here's an example of proxy rotation with rate limiting:

import requests

from time import sleep

import random

class ProxyRotator:

def __init__(self, proxy_list):

self.proxies = proxy_list

self.current = 0

def get_proxy(self):

proxy = self.proxies[self.current]

self.current = (self.current + 1) % len(self.proxies)

return proxy

def make_request(self, url):

proxy = self.get_proxy()

sleep(random.uniform(1, 3)) # Add a random delay between requests

return requests.get(url, proxies={'http': proxy, 'https': proxy})

This approach helps you avoid overwhelming servers and reduces the chances of being blocked.

Boosting Script Performance

To handle large datasets effectively, leverage tools like multiprocessing, asyncio, and caching. These techniques can significantly improve script performance:

| Technique | Best For | Performance Boost |

|---|---|---|

| Multiprocessing | CPU-heavy tasks | Up to 60% faster with parallel processing |

| Asyncio | I/O-heavy operations | Up to 10x faster for concurrent tasks |

| Intelligent caching | Frequently accessed data | 40-50% reduction in processing time |

"By carefully implementing these parallel processing techniques and following best practices, you can significantly enhance the speed and efficiency of your web scraping projects in Python." - ScrapingAnt Research Team

For massive datasets, consider using pandas' chunk processing to manage memory usage efficiently. For more details, see the earlier section on cleaning and formatting data.

Automating Data Extraction with Python

Key Takeaways

Python stands out as a powerful choice for automating data extraction tasks. Tools like BeautifulSoup and Scrapy work well together - BeautifulSoup simplifies straightforward parsing, while Scrapy is designed for more complex, large-scale data extraction. Together, they cover a wide range of data collection needs.

Here’s a quick comparison of the tools and their benefits:

| Tool | Best Use Case | Key Advantage | Typical Time Savings |

|---|---|---|---|

| BeautifulSoup | Single-page scraping | Easy to implement | 40-60% faster than manual |

| Scrapy | Large-scale projects | Built-in crawling tools | 70-85% faster than manual |

| Pandas | Data processing | Streamlined manipulation | 50-65% reduction in time |

These tools can help you save significant time and effort, making automated data extraction not just efficient but also practical for a variety of tasks.

How to Start Automating

- Define Your Goals: Clearly outline what data you need and select the right tool. Use BeautifulSoup for simpler tasks and Scrapy for handling larger, more complex projects.

- Build Step by Step: Begin with small scripts and expand gradually. Incorporate error handling, logging, and rate limiting to ensure your workflow is reliable. Tools like APScheduler can help with scheduling and monitoring tasks effectively.

FAQs

Which is better, Scrapy or BeautifulSoup?

The choice between Scrapy and BeautifulSoup depends on what your project needs. Here's a quick comparison to guide you:

| Feature | Scrapy | BeautifulSoup |

|---|---|---|

| Best Use Case | Large-scale web scraping | Simple HTML/XML parsing |

| Performance | High-speed (async requests) | Standard speed |

| Built-in Crawling | Yes | No |

| Proxy Support | Built-in | Requires extra libraries |

| Project Scale | Enterprise-level projects | Small to medium projects |

Scrapy is great for large-scale tasks, such as scraping e-commerce sites with thousands of pages. Its asynchronous processing and built-in crawling make it a powerful choice. On the other hand, BeautifulSoup works well for smaller projects or static pages that don't require heavy automation.

If you need JavaScript rendering, Scrapy can integrate with tools like Selenium or Scrapy-Splash. BeautifulSoup, however, depends on external libraries like requests-html for similar tasks. Many developers even combine Scrapy's crawling capabilities with BeautifulSoup's parsing for more precise data extraction.

Pro Tip: Start with BeautifulSoup for simpler tasks or early development. As your project grows and demands more advanced automation, transitioning to Scrapy can save time and effort.

Knowing the strengths of each tool helps you make smarter choices and optimize your data scraping process.