Web scraping has evolved. AI now solves problems that old methods couldn’t handle, like dynamic layouts, anti-bot defenses, and unstructured data. By combining AI with older techniques, you get smarter, faster, and more reliable data extraction.

Key Benefits of AI in Web Scraping:

- Handles Dynamic Websites: AI adapts to layout changes automatically.

- Solves Anti-Scraping Challenges: Bypasses CAPTCHAs, IP blocks, and rate limits.

- Improves Data Quality: Removes duplicates, standardizes formats, and validates accuracy.

- Processes Complex Data: NLP adds context to unstructured text, while computer vision tackles images and videos.

Quick Comparison

| Feature | Old Methods | AI-Powered Scraping |

|---|---|---|

| Adaptability | Breaks with layout changes | Learns and adjusts automatically |

| Data Quality | Error-prone | Accurate and validated |

| Anti-Bot Handling | Easily blocked | Mimics human browsing |

| Scalability | Limited | Manages diverse, large-scale tasks |

AI-driven tools like Diffbot, Import.io, and ParseHub are reshaping industries by making web scraping faster, more accurate, and scalable. Whether you’re extracting e-commerce data or analyzing news, AI is the future of web scraping.

How to Scrape Websites Using LLM Technology

Key AI Technologies for Web Scraping

AI has reshaped web scraping by introducing advanced tools that complement traditional techniques. Here’s a closer look at the key AI technologies improving how we extract and process web data.

Machine Learning

Machine learning brings flexibility to web scraping by analyzing structures, spotting patterns, and automatically adjusting to changes. A standout example is adaptive scraping, where systems like Bright Data's Web Unlocker can keep extracting data even when websites frequently update their layouts.

This technology relies on features like automatic pattern detection, dynamic learning, and self-improving algorithms, reducing the need for constant manual intervention.

While machine learning focuses on adapting to structural changes, NLP takes it a step further by interpreting unstructured text data.

Natural Language Processing (NLP)

NLP has changed the game for handling unstructured text from web sources. It helps systems grasp context, analyze sentiment, and uncover relationships within the data, making the extracted information more precise and meaningful.

For instance, Diffbot uses NLP to extract, classify, and contextualize data from intricate web pages. This blend of NLP and traditional scraping techniques has unlocked advanced data processing capabilities that were previously out of reach.

But AI isn’t just about text - it also tackles visual challenges through computer vision.

Computer Vision

Computer vision focuses on a tough aspect of web scraping: managing visual elements and anti-bot defenses. This technology can process images, videos, and even visual CAPTCHAs, expanding the scope of web scraping.

By analyzing layouts and solving visual challenges, tools like Import.io and ParseHub show how AI-powered computer vision can handle complex interfaces, especially in areas like e-commerce and social media scraping.

Together, these AI technologies - machine learning, NLP, and computer vision - combine with traditional methods to create more accurate and scalable web scraping solutions. They allow organizations to handle diverse data types with greater efficiency and precision.

Advantages of AI-Driven Web Scraping

AI technologies have transformed how web scraping works, boosting performance, reliability, and the quality of extracted data. Here's a closer look at why AI-driven web scraping stands out.

Greater Flexibility

AI-powered web scrapers use machine learning to handle dynamic websites and layout changes with ease. These tools can:

- Manage dynamic content and complex data formats

- Adjust automatically to structural changes without needing manual tweaks

- Process diverse data structures with efficiency

- Improve over time through continuous learning

By combining these features with traditional methods, businesses can achieve a strong mix of efficiency and adaptability.

Tackling Anti-Scraping Challenges

AI systems are equipped with advanced tools to navigate anti-scraping defenses. Using techniques like reinforcement learning and generative models, they address common barriers effectively:

| Anti-Scraping Challenge | AI Solution |

|---|---|

| CAPTCHAs | Uses computer vision to solve them |

| IP Blocking | Simulates natural browsing patterns |

| Browser Fingerprinting | Creates realistic user profiles |

| Rate Limiting | Dynamically adjusts request speeds |

Higher Data Quality

AI excels at cleaning and validating data thanks to its ability to recognize patterns and apply machine learning techniques. Key benefits include:

- Automatically identifying and removing duplicates

- Filtering out irrelevant information intelligently

- Standardizing formats from multiple sources

- Validating data based on patterns for accuracy

Natural Language Processing (NLP) takes this further by understanding context and relationships within the data, producing cleaner, more accurate datasets that need minimal manual adjustments.

These improvements not only address the limitations of traditional scraping but also open doors to new tools and applications, which will be discussed in the upcoming section.

sbb-itb-f2fbbd7

Practical Tools and Examples

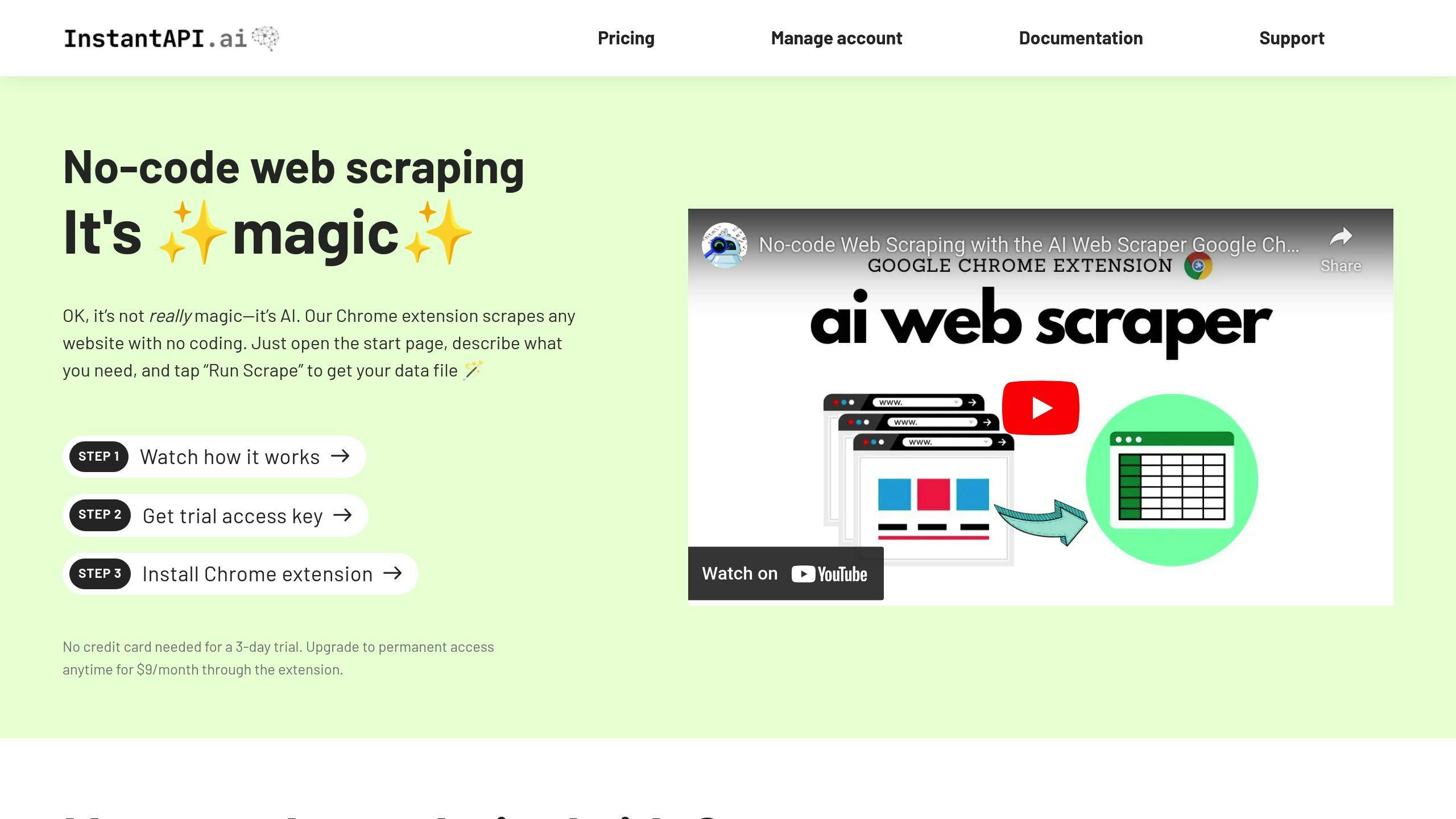

InstantAPI.ai

InstantAPI.ai makes web scraping easier by using AI to manage complex tasks. It offers premium proxies to hide IP addresses and avoid detection, while also handling JavaScript-heavy websites effortlessly. Its AI automatically adjusts to website changes, so you don’t have to worry about constant reconfigurations.

Other AI Scraping Tools

Different platforms bring their own strengths to AI-powered scraping:

| Tool | Key AI Features | Primary Use Case |

|---|---|---|

| Diffbot | Uses NLP and Visual AI for data extraction | News and product data |

| Import.io | Automates multi-page data collection | Large-scale data consolidation |

| ParseHub | Handles dynamic content with machine learning | E-commerce site extraction |

Each tool shines in specific situations. For instance, Diffbot excels at pulling structured data from news articles and product pages using advanced NLP. ParseHub is a go-to for e-commerce sites, especially when layouts change frequently.

Practical Applications

AI-driven web scraping is transforming industries like travel and market research. Take a travel startup, for example - they used AI tools to track hotel prices and weather data, cutting down months of manual work into just days. Similarly, market research firms now monitor prices, reviews, and customer sentiment in real-time, making competitive analysis faster and more efficient.

For businesses, starting small with pilot projects often leads to the best results before scaling up. Factors like website complexity, the volume of data needed, and existing tech infrastructure are crucial to consider.

These tools and real-world applications show how AI is reshaping web scraping, paving the way for a closer look at how it compares to traditional methods.

Comparison of Old vs. AI-Powered Web Scraping

Differences Between the Two Approaches

Traditional web scraping relies on fixed patterns, which often break when websites update their layouts. On the other hand, AI-powered tools use machine learning to adjust to these changes in real time. This eliminates the need for constant manual reprogramming and ensures smoother operation.

AI-based scrapers also simulate human browsing to bypass challenges like CAPTCHAs and anti-scraping measures. This ensures consistent data collection, even on websites with tight security. These features lead to faster data gathering, lower maintenance costs, and more dependable insights for businesses.

Comparison Chart

Here's a breakdown of how traditional and AI-powered web scraping stack up:

| Feature | Traditional Web Scraping | AI-Powered Web Scraping |

|---|---|---|

| Adaptability and Maintenance | Relies on fixed patterns, frequent updates needed | Automatically adjusts to changes, minimal upkeep |

| Data Quality | Errors common after structural changes | Maintains accuracy with auto-validation |

| Scalability | Limited by manual setup | Handles multiple site variations at once |

| Anti-Scraping Handling | Easily blocked by security measures | Mimics human behavior to avoid detection |

| Setup and Error Handling | Quick setup but manual fixes required | Higher initial setup, automatic recovery |

While AI-powered scraping tools involve a higher initial investment, they save time and money in the long run through reduced maintenance and greater reliability. Traditional methods might still work for simpler, unchanging sites, but AI-driven tools represent a leap forward in efficient and reliable data extraction.

AI in Web Scraping: Key Takeaways

AI-powered web scraping has reshaped how data is collected online, addressing modern challenges, cutting down on upkeep, and streamlining processes. By merging AI tools with established scraping methods, businesses can now tackle even the most complex data extraction tasks.

Highlights

- Reduced Manual Effort: AI tools automatically adjust to changes, cutting down on the need for manual updates. This not only saves time but also reduces costs, making data extraction more efficient.

- Improved Data Accuracy: AI systems can analyze context and content, ensuring the data collected is relevant and precise. This eliminates many of the shortcomings found in older methods, offering better scalability and reliability.

- Overcoming Security Barriers: AI scrapers can navigate challenges like CAPTCHAs, ensuring uninterrupted data collection. This makes the entire process smoother and more dependable.

Looking ahead, the combination of AI and traditional scraping techniques will define the future of data extraction. While simpler tasks may still rely on older methods, AI's ability to handle complex, large-scale projects ensures it will play a central role as websites continue to evolve.