Web scraping is transforming the energy sector by automating data collection, saving time, reducing costs, and enabling smarter decisions. Here's a quick summary of its key benefits and uses:

-

Main Benefits:

- Saves 30–40% of time compared to manual data collection.

- Improves data accuracy to 99.5%.

- Cuts operational costs by up to 40%.

-

Common Applications:

- Price Tracking: Monitor real-time prices of electricity, gas, and fuel.

- Renewable Energy Insights: Track growth in solar, wind, and other renewables.

- Market Research: Analyze competitors and spot industry trends.

- Usage Pattern Analysis: Forecast demand using public consumption data.

-

Key Features for Web Scraping Tools:

- JavaScript rendering for dynamic pages.

- Proxy management for accessing global markets.

- Geotargeting to analyze regional data.

- CAPTCHA bypass for uninterrupted scraping.

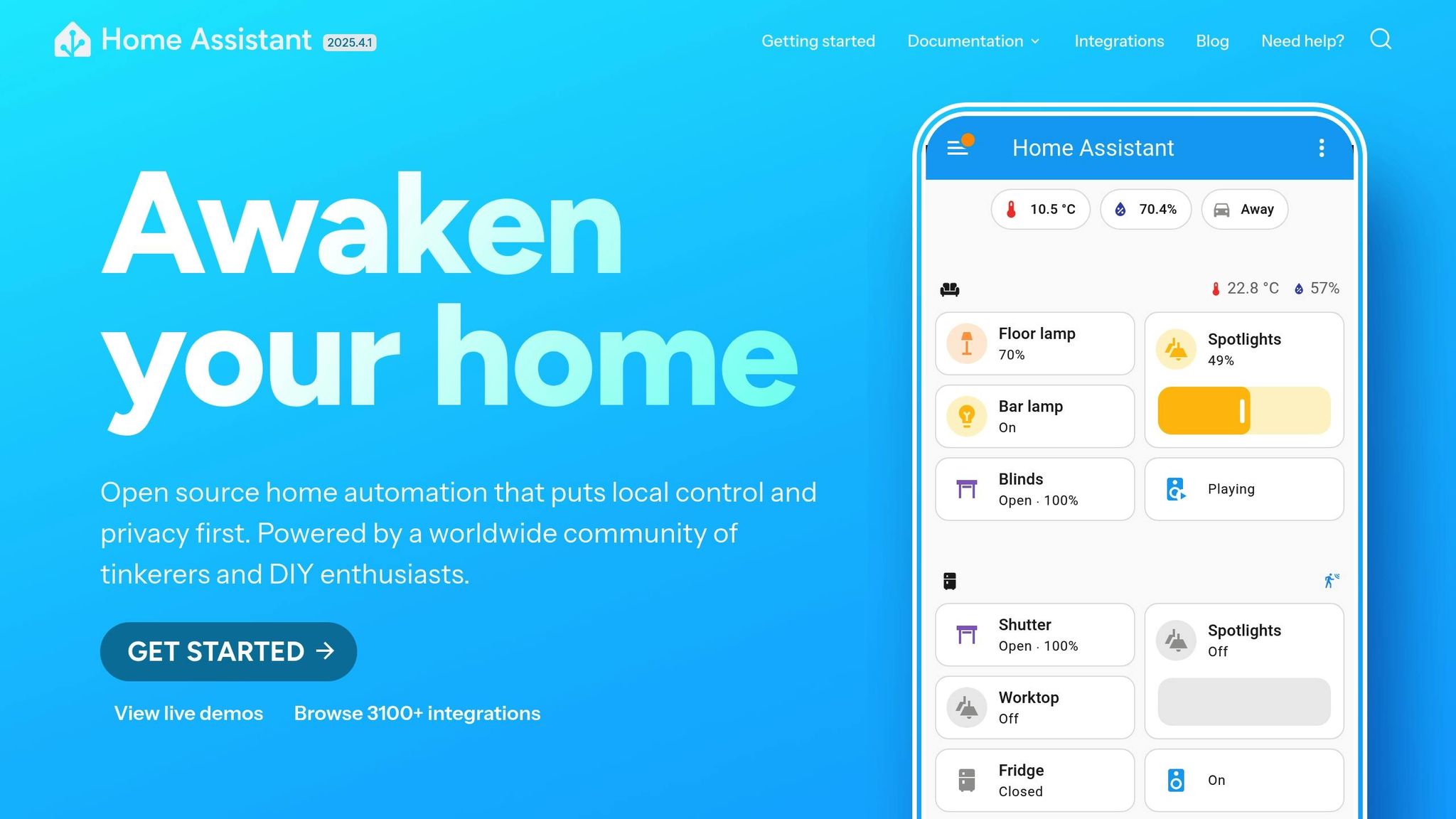

Scrape ANY Data from ANY website in Home Assistant ...

Common Uses in Energy

The energy industry uses web scraping to improve operations and make smarter decisions.

Price Tracking Systems

Energy companies rely on real-time price tracking to monitor electricity, natural gas, and fuel prices across different markets. This allows them to adjust quickly and create pricing strategies, including comparing their rates to competitors.

Renewable Energy Data Collection

Web scraping is essential for collecting and analyzing data in the renewable energy market. It helps track growth in solar, wind, and other renewable sources. For example, solar installations grew by 34% between 2014 and 2015, and wind energy now powers 17 million U.S. households. Experts predict renewables could make up 30% of U.S. energy capacity by 2030.

"Big data is changing the future of the renewable energy sector." – DiscoverDataScience.org

These insights are key for broader market research.

Market Research

Web scraping enables energy companies to gather detailed market intelligence. They can monitor competitor strategies, stay updated on new developments, and spot emerging trends in the industry.

Usage Pattern Analysis

Energy providers use web scraping to study consumption patterns by pulling data from public sources. This helps with demand forecasting and planning infrastructure more effectively.

Impact on Energy Companies

Web scraping has transformed the way energy companies operate, leading to measurable gains across various business functions.

Workflow Improvements

AI-powered web scraping automates the tedious task of data collection, processing thousands of pages in just an hour. This automation allows teams to shift their focus to high-value activities like tracking market prices or analyzing consumption patterns. The result? Huge time savings and a better ability to make strategic decisions.

Better Decision Making

With streamlined workflows, real-time data becomes a powerful tool for smarter decision-making. Energy companies can:

- Adjust pricing in response to market changes

- Allocate resources more effectively during peak times

- Spot new trends ahead of competitors

Having access to accurate, up-to-date data enables faster, better-informed decisions, giving companies a competitive edge.

Cost Reduction

In addition to better strategies, these tools help cut costs significantly. Companies using these solutions have reported savings in several key areas:

| Area of Impact | Cost Savings |

|---|---|

| Energy Costs | 10–30% |

| Equipment Downtime | Up to 50% |

| Energy Demand Forecasting | Up to 20% |

| Operational Efficiency | Up to 30% |

These savings come from improvements like predictive maintenance, better resource allocation, and more accurate demand forecasting. By identifying inefficiencies sooner, companies can act quickly to address them.

Market Understanding

Automated data collection offers a clearer view of market dynamics, helping companies:

- Monitor competitor pricing and strategies in real time

- Track regional demand fluctuations

- Study consumer behavior patterns

- Pinpoint new market opportunities

As renewable energy and smart grid technologies grow in importance, having comprehensive market insights is crucial for staying ahead in the industry.

sbb-itb-f2fbbd7

Implementation Guide

Set up effective web scraping by planning carefully and using the right tools.

Key Features for Tools

Energy companies rely on specific tool capabilities to collect and process industry data efficiently. Here are the most important features to look for:

| Feature | Purpose | Benefit |

|---|---|---|

| JavaScript Rendering | Handle dynamic pricing pages | Ensures accurate, real-time data |

| Proxy Management | Access global energy markets | Avoids IP blocks, keeps data flowing |

| Geotargeting | Analyze regional markets | Gathers insights across multiple areas |

| CAPTCHA Bypass | Maintain uninterrupted scraping | Keeps data streams consistent |

| Custom Data Output | Format data as needed | Simplifies integration with systems |

Once you have the right tools, follow these steps to build your scraping system.

Steps to Get Started

1. Define What Data You Need

Decide exactly what you need to collect - like energy prices, consumption trends, market insights, or competitor data. Also, determine how often the data should be updated and its required format.

2. Set Up Your Data Infrastructure

Build a system to store and manage your data. Use time-series databases, validate incoming data, and ensure sensitive information is protected.

3. Configure Scraping Rules

Adjust your scraping parameters based on:

- How often target websites update

- Best times for scraping (peak vs. off-peak hours)

- How fresh the data needs to be

- Regional market schedules

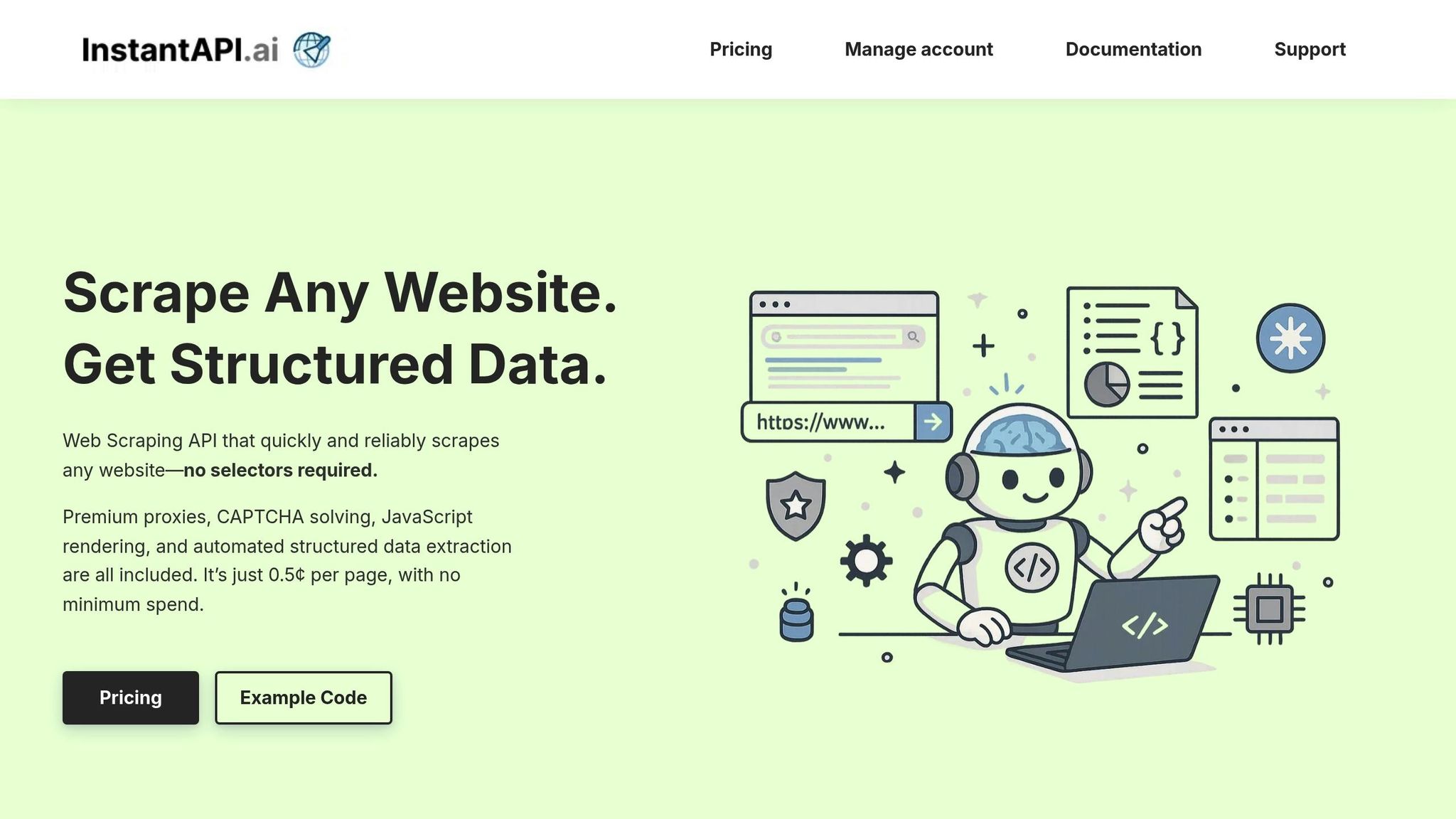

For a smoother process, you can use InstantAPI.ai to simplify setup and management.

Benefits of Using InstantAPI.ai

InstantAPI.ai is designed to make energy sector data collection easier. Its AI-driven system converts raw web data into structured formats, ready for immediate analysis.

"After trying other options, we were won over by the simplicity of InstantAPI.ai's Web Scraping API. It's fast, easy, and allows us to focus on what matters most - our core features." - Juan, Scalista GmbH

Here’s how InstantAPI.ai can help:

| Setup Phase | Action Items | Outcome |

|---|---|---|

| Initial Configuration | Define JSON schema for data | Standardized, usable data output |

| Regional Setup | Enable geotargeting for markets | Access to global energy data |

| Integration | Connect API to your systems | Automated data flow |

| Monitoring | Track usage at 0.5¢ per page | Scalable and cost-efficient |

With access to over 65 million rotating IPs, InstantAPI.ai ensures reliable data collection from energy markets worldwide. This is especially useful for companies operating across multiple regions or monitoring global trends.

Common Issues and Fixes

Web scraping in the energy sector comes with its own set of challenges, each requiring specific solutions. Here's a breakdown of common problems and how to address them effectively.

Website Changes

Energy market websites often update their structures, which can interrupt data collection efforts. These changes are usually aimed at improving security or user experience.

| Challenge | Solution | Implementation |

|---|---|---|

| Dynamic HTML Elements | Use stable selectors | Focus on attributes like data-IDs |

| JavaScript Updates | Monitor rendering changes | Add headless browser functionality |

| Security Patches | Update authentication | Adjust headers and user agents |

For instance, when Best Buy revamped its website, PriceRunner's technical team quickly adapted their scraping scripts by monitoring the structural changes. This quick response ensured uninterrupted data collection.

Legal Requirements

Legal compliance is a critical aspect of web scraping in the energy sector. Here are some key areas to consider:

| Requirement | Compliance Action | Verification Method |

|---|---|---|

| Data Privacy | Avoid collecting PII | Use automated PII detection |

| Terms of Service | Review site policies | Conduct regular checks |

| Rate Limiting | Introduce delays | Track request patterns |

"Businesses must consider whether their scraping activities harm the target website." - AIMultiple

Always check the robots.txt file before scraping. For example, appending "robots.txt" to an energy market URL (e.g., https://www.energymarket.com/robots.txt) will display the site's crawling rules.

Data Processing

Efficient data processing is essential for transforming raw information into actionable insights. Here’s how to manage it:

- Data Validation: Cross-check sources, identify outliers, and verify timestamps.

- Storage Optimization: Use time-series databases, compress data, and schedule automated cleanups.

- Performance Management: Monitor processing speeds, fine-tune queries, and balance server loads.

Setting up a structured validation pipeline can help detect anomalies before they impact analysis. These strategies ensure energy companies can handle large data volumes effectively.

"The question of whether or not web scraping is legal has no definitive and single response. Such an answer depends on many factors, and some may fluctuate based on the country's laws and rules." - Dan Suciu

Conclusion

Web scraping has become a powerful tool for energy companies navigating today’s data-centric market. By automating the collection of key information - like price changes, renewable energy developments, and market trends - companies can make faster, more informed decisions.

This automation simplifies operations and enhances efficiency, helping businesses streamline workflows and cut costs. With modern solutions priced at around 0.5¢ per page scrape, web scraping is an affordable option for companies of any size.

To get the most out of web scraping, it’s crucial to focus on data accuracy, legal compliance, scalability, and dependable technical support. These elements ensure a strong, reliable system for gathering data that continues to deliver results over time.

As the energy industry evolves, web scraping will play an even bigger role in helping companies monitor trends, refine operations, and stay competitive. Its ability to integrate across various business functions makes it an essential tool in this data-driven era.