Want real-time stock market data for faster, smarter decisions? Web scraping is the key. Here's what you need to know:

- Why It Matters: Stock prices change in seconds. Real-time data helps you act instantly, reducing risks and maximizing gains.

- How It Works: Tools like Beautiful Soup, Scrapy, and Selenium automate data collection from financial sites, social media, and forums.

- Challenges: Dynamic content (JavaScript), frequent site changes, and legal compliance require advanced techniques like headless browsers and ethical scraping practices.

- Best Tools: Use Scrapy for large-scale tasks, Selenium for dynamic sites, or InstantAPI.ai for easy, AI-powered scraping at $2 per 1,000 pages.

- Scalable Systems: Combine distributed scraping, proxy rotation, and real-time data pipelines (e.g., Apache Kafka) for seamless analysis.

Quick Comparison of Popular Tools:

| Tool | Best For | Strengths | Weaknesses |

|---|---|---|---|

| Beautiful Soup | Beginners, simple tasks | Easy to use, quick setup | Limited scalability, slower |

| Scrapy | Large-scale operations | High performance, built-in tools | Steeper learning curve |

| Selenium | JavaScript-heavy websites | Handles dynamic content, user simulation | Resource-intensive, slower |

| InstantAPI.ai | Simplified scraping | AI-powered, handles complex sites | Pay-per-use cost |

In short: Web scraping transforms stock market analysis by delivering real-time data, empowering traders and analysts to stay ahead in fast-moving markets. Dive into the article for tools, methods, and tips to get started.

Python: Real Time Stock Price Scraping and Plotting with Beautiful Soup and Matplotlib Animation

Key Tools and Methods for Stock Market Web Scraping

Picking the right tool for stock market scraping can make a huge difference in both efficiency and data accuracy. Whether you're pulling straightforward price data or dealing with websites loaded with JavaScript, having the right setup ensures smoother operations and better results.

Popular Web Scraping Tools Compared

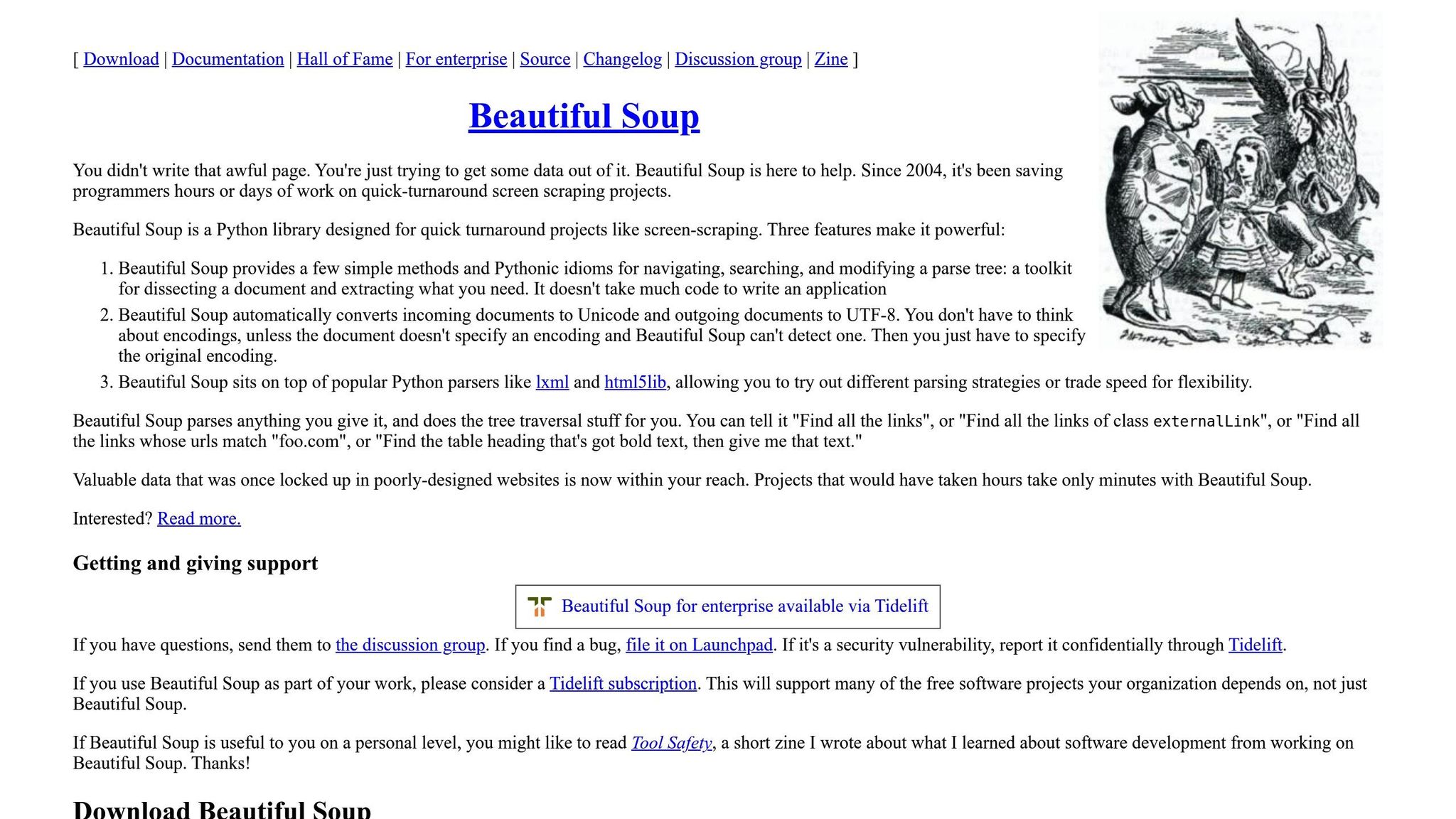

When it comes to stock market scraping, three tools often stand out: Beautiful Soup, Scrapy, and Selenium. Each has its strengths and is better suited for specific tasks. For example, Beautiful Soup is perfect for beginners working with simple HTML. Scrapy shines in large-scale projects thanks to its ability to handle multiple pages quickly. Selenium, on the other hand, is the go-to choice for sites heavy on JavaScript, though it’s not as fast as Scrapy for simpler tasks.

| Tool | Key Features | Advantages | Limitations | Best For |

|---|---|---|---|---|

| Beautiful Soup | • Simple HTML parsing • Easy syntax • Good documentation |

• Beginner-friendly • Quick setup • Minimal learning curve |

• Slower performance • Requires external parsers • Limited scalability |

• Small projects • Static content • Learning web scraping |

| Scrapy | • Asynchronous framework • Built-in data pipelines • Concurrent requests |

• High performance • Excellent scalability • Built-in data handling |

• Steeper learning curve • Overkill for simple tasks • Complex setup |

• Large-scale operations • Multiple data sources • Production environments |

| Selenium | • Full browser automation • JavaScript execution • User interaction simulation |

• Handles dynamic content • Mimics real users • Works with complex sites |

• Resource-intensive • Slower execution • Higher maintenance |

• JavaScript-heavy sites • Dynamic content • Interactive elements |

Python is the backbone for these tools, especially when paired with Pandas for managing and analyzing data. But for websites with dynamic content, you’ll need to step up your game with headless browsers.

Using Headless Browsers for Real-Time Scraping

Static scrapers can only capture the initial HTML of a webpage, which leaves out dynamic content loaded via JavaScript or AJAX. That’s where headless browsers come in. These tools fully render webpages, execute JavaScript, and even simulate user actions, making them indispensable for scraping real-time financial data.

Headless browsers, like ChromeDriver, Puppeteer, and Playwright, allow you to access data that traditional scrapers miss. They’re particularly useful for financial sites such as Yahoo Finance or MarketWatch, where stock prices and charts often load after the main page. These tools can handle pop-ups, wait for elements to load, and even fill out forms if needed.

The downside? They’re slower and more resource-intensive than direct HTTP requests. But for websites heavily reliant on JavaScript, they’re worth the trade-off. A smart approach might involve mixing methods - using headless browsers for dynamic content and faster tools like Scrapy for static data.

Another bonus of headless browsers is that they mimic real user behavior, which helps bypass anti-scraping measures. This reduces the risk of getting blocked when gathering data from multiple financial sites throughout the trading day.

Using InstantAPI.ai for Efficient Data Scraping

For an even easier way to scrape stock market data, InstantAPI.ai takes care of the heavy lifting. It uses headless Chromium to render web pages and automatically detects when a page is fully loaded, so you don’t have to tweak request timings or worry about missing data.

With its AI-powered system, you can specify exactly what data you need - like ticker symbols, prices, volume, or percentage changes - and it extracts it in real time, regardless of how the website structures its content.

"After trying other options, we were won over by the simplicity of InstantAPI.ai's Web Scraping API. It's fast, easy, and allows us to focus on what matters most - our core features." - Juan, Scalista GmbH

Some standout features of InstantAPI.ai include:

- Worldwide geotargeting: Access region-specific market data.

- Automatic CAPTCHA bypass: No interruptions in data collection.

- Unlimited concurrency: Scrape multiple financial sites at once.

- Proxy management: Built-in IP rotation to avoid blocks and rate limits.

At $2 per 1,000 web pages scraped, the platform’s pay-per-use pricing is budget-friendly, whether you’re analyzing small datasets or collecting data at scale. The price includes all features - JavaScript rendering, proxy management, and AI-powered data extraction - without any hidden fees.

For tasks like gathering historical data or processing multi-page financial reports, InstantAPI.ai’s ability to handle pagination automatically saves time. It delivers structured JSON data that integrates seamlessly into your workflow, so you can focus less on cleaning and formatting and more on making critical market decisions.

Common Challenges in Real-Time Stock Data Scraping

Scraping real-time stock data comes with its fair share of obstacles - both technical and regulatory. Tackling issues like dynamic content loading and legal compliance is crucial to ensure smooth operations and avoid setbacks.

Handling Dynamic Content and AJAX Requests

Modern financial websites often use JavaScript and AJAX to update stock data in real time. This means the initial HTML you see might not include the stock information you’re after - it’s loaded dynamically afterward. To work around this, you can use tools like Chrome DevTools to inspect network activity. By analyzing the Network tab, you can identify XHR requests that fetch JSON data directly from API endpoints. Extracting data this way is often faster and cleaner than parsing fully rendered HTML. For instance, you can replicate these AJAX calls in Python to pull JSON data efficiently.

If API endpoints aren’t readily available, headless browsers can simulate user interactions to capture rendered content. However, this approach is slower and requires careful management, such as rate-limiting requests and adhering to site terms, to avoid IP bans. These strategies help you adapt to the dynamic nature of financial websites and their frequent updates.

Managing Frequent Website Layout Changes

Financial websites don’t stay the same for long - unexpected layout changes can easily break your scraping setup. Fixed CSS selectors or XPath expressions may stop working overnight. To counter this, AI-powered scraping tools can dynamically analyze the Document Object Model (DOM) and adjust to changes. Machine learning and computer vision techniques can even identify stock prices based on visual cues, like specific colors for gains or losses, regardless of HTML structure.

Additionally, implementing detailed logging and setting up real-time alerts for layout anomalies can help you spot and address issues before they disrupt your data pipeline. Staying proactive with these measures ensures your scraping system remains reliable.

Following Legal and Ethical Guidelines

Navigating the legal side of stock data scraping is just as important as handling technical challenges. Start by respecting a website’s robots.txt file, which outlines the parts of the site that automated tools can access. Ignoring rules like Crawl-delay can lead to IP bans - or worse, legal trouble.

Many financial websites explicitly prohibit scraping in their Terms of Use agreements. Violating these terms can have serious consequences, especially with recent legal cases highlighting the importance of compliance. If you’re using scraped data for commercial purposes or redistribution, you’ll also need to follow SEC regulations.

Privacy laws like GDPR and CCPA add another layer of complexity. GDPR protects all personal data, no matter where it’s sourced, while CCPA excludes information published by government entities. A 2023 study estimated that black hat scraping causes over $100 billion in damages to businesses worldwide each year.

As Amber Zamora wisely puts it:

"The data scraper acts as a good citizen of the web, and does not seek to overburden the targeted website."

sbb-itb-f2fbbd7

Building Scalable Web Scraping Systems

Handling large-scale stock data scraping requires systems that can grow and adapt to demand. By combining distributed architectures, effective data integration, and performance optimization, you can ensure timely and reliable market insights.

Creating Distributed Scraping Systems

To manage the heavy lifting of large-scale scraping, tasks can be divided and run in parallel using containerization platforms like Kubernetes. This allows you to scale up or down based on market activity. For instance, during a busy trading session, you can deploy more scrapers to keep up with the data flow.

Proxy rotation is another essential piece. By considering geographic diversity and response times, you can reduce latencies. For example, accessing data from New York Stock Exchange servers might be faster using proxies located on the East Coast.

A microservices architecture can also help by letting you scale different components independently. For example, a NASDAQ scraper might need more resources during trading hours, while a news scraper can operate on lighter infrastructure after hours. This setup also makes it easier to update or modify individual scrapers without disrupting the entire system.

For websites that rely heavily on JavaScript, tools like Puppeteer or Selenium are indispensable. These headless browsers can be distributed across multiple containers to handle the resource-intensive task of rendering complex pages. However, they should only be used when simpler HTTP requests can't retrieve the required data.

Once you've set up a distributed scraping system, the next challenge is integrating all that data for real-time analysis.

Data Integration for Real-Time Analysis

Real-time pipelines, such as Apache Kafka, are essential for processing the thousands of updates that stream in every second. These pipelines allow you to handle raw data efficiently and feed it into analytical systems.

Python's Pandas library can help merge diverse datasets on-the-fly. For example, you can combine stock price data with trading volume, news sentiment, and technical indicators in real time. Choosing the right storage solution is equally important: SQL databases like MySQL are ideal for structured data with clear relationships, while NoSQL databases like MongoDB are better for unstructured data such as news articles or social media content.

Cloud services can further simplify scaling during high-volume periods. AWS Lambda can trigger data processing tasks whenever new stock information is scraped, while Google Cloud Dataflow can manage complex data transformations without requiring you to manage the underlying infrastructure.

Caching is another way to improve performance. Frequently accessed data, like information on popular stocks such as Apple or Tesla, can be stored in systems like Redis or Memcached. This reduces the need to repeatedly scrape the same data and helps avoid overloading target websites.

To take things a step further, optimizing performance means focusing on reducing latency and minimizing errors.

Reducing Latency and Error Rates

Asynchronous programming with Python's asyncio is a game-changer for improving performance. It allows you to handle multiple requests at once without blocking operations, speeding up the overall process.

When dealing with temporary server issues, exponential backoff retries (e.g., waiting 1, 2, 4, or 8 seconds between attempts) can help maintain stability without overwhelming the target site. Intelligent error handling is also essential. By using try-catch blocks around individual scrapes, you can ensure that a single failure doesn’t disrupt the entire operation.

HTTP/2 connections are another way to reduce latency. They allow multiple requests to be sent over a single connection, making them particularly effective for scraping multiple data points from the same site.

Real-time monitoring is crucial for identifying and resolving issues before they affect your analysis. Alerts for unusual error rates, spikes in response times, or data anomalies can help you stay ahead of problems. Tools like InstantAPI.ai offer built-in error handling and reliable data extraction, letting you focus more on analysis and less on infrastructure.

AI-based adaptive algorithms can further enhance your system. These algorithms can adjust scraper behavior dynamically, slowing down requests when anti-bot measures are detected or switching to backup data sources when primary feeds are unavailable.

"Optimizing web scrapers involves implementing advanced techniques for more efficient data extraction." - Bright Data

Conclusion: Getting the Most from Real-Time Stock Market Data

Web scraping has reshaped market analysis by delivering real-time, multi-source data, enabling faster and more informed decisions. Sandro Shubladze, CEO and Founder at Datamam, highlights its value: "The ability to gather real-time and historical stock information empowers companies to make data-driven decisions that can significantly impact their competitiveness and profitability."

The financial sector's commitment to this technology is evident, with hedge funds allocating about $2 billion to web scraping software - a clear testament to its return on investment. Access to real-time data allows businesses to monitor trends, track key metrics, and act swiftly in volatile markets, where price changes can occur in seconds.

To put this into perspective, daily financial page scrapes skyrocketed from 10 billion in 2018 to 25 billion in 2022, showcasing the growing reliance on automated data collection. Tools like InstantAPI.ai simplify the process with features like automated IP rotation, CAPTCHA handling, and AI-powered data extraction. At just $2 per 1,000 pages scraped, it delivers structured JSON data that integrates seamlessly into existing systems.

Success in this field hinges on combining efficient tools, ethical practices, and scalable systems. With the web scraping industry projected to reach $5 billion by 2025, mastering these strategies now ensures you're well-positioned to seize future opportunities. By leveraging robust technology and adhering to ethical standards, you gain a competitive edge in the ever-changing financial landscape.

FAQs

What legal and ethical guidelines should I follow when scraping real-time stock market data?

When gathering real-time stock market data through web scraping, it's crucial to adhere to both legal requirements and ethical guidelines.

From a legal standpoint, ensure that the data you're accessing is publicly available and that your actions align with the website's terms of service. Scraping copyrighted material or personal information without proper authorization can lead to violations of regulations like the GDPR or CCPA, so tread carefully.

On the ethical side, it's important to avoid disrupting the target website's functionality. This means keeping your requests at a reasonable frequency to prevent server overload and respecting the guidelines specified in the website's robots.txt file. Transparency is key - steer clear of collecting sensitive or confidential data to uphold trust and maintain integrity in your practices.

What factors should I consider when choosing a web scraping tool for real-time stock market analysis?

When picking a web scraping tool for real-time stock market analysis, start by identifying the type of data you need. For instance, if you’re dealing with dynamic websites powered by JavaScript, tools like Selenium are great for navigating and extracting such content. If you prefer a simpler, no-code option, look for tools with an intuitive, user-friendly interface.

You’ll also want to consider the frequency of data collection. For tasks requiring real-time updates or regular extractions, choose tools that support automation and scheduling features. Lastly, evaluate your technical expertise. Some tools are built for developers comfortable with coding, while others are designed for non-technical users, allowing you to get started without writing a single line of code.

By matching these elements to your specific requirements, you can select a tool that streamlines the process of gathering and analyzing stock market data, keeping you ahead with timely insights.

How can I manage dynamic content and AJAX requests when scraping stock market data?

Scraping stock market data from websites that use dynamic content and AJAX requests can be tricky, but it’s manageable with the right approach. Start by examining the website's network activity using tools like Chrome Developer Tools. This helps you identify how data is being fetched - often through APIs or AJAX calls. Once you pinpoint these sources, you can replicate the requests in your script to access the data directly, bypassing the need to scrape rendered HTML.

For sites that load content dynamically, tools like Selenium can be invaluable. Selenium lets you simulate browser interactions, including waiting for specific elements to load before extracting data. Pairing this with robust error handling ensures your scraping process runs smoothly and minimizes disruptions.

Lastly, always review and respect the website's terms of service to ensure your scraping practices remain ethical and compliant. Cutting corners here can lead to legal or technical challenges, so it’s better to stay on the safe side.