PHP is a practical choice for web scraping, offering tools and techniques to extract data from websites efficiently. Whether you're working on small projects or handling medium-scale scraping tasks, PHP provides libraries like Simple HTML DOM Parser, cURL, and Symfony HttpBrowser to simplify the process. Here's what you need to know:

-

Why PHP?

- Built-in HTTP and HTML parsing functions.

- Easy integration with web applications.

- Beginner-friendly for developers.

-

Key Libraries:

- Simple HTML DOM Parser: Ideal for parsing messy HTML with jQuery-like syntax.

- cURL: Offers low-level control for advanced scraping.

- Symfony HttpBrowser: A modern replacement for Goutte, supporting DOM navigation and form handling.

-

Legal and Ethical Guidelines:

- Respect robots.txt and Terms of Service.

- Avoid scraping copyrighted material.

- Comply with GDPR/CCPA regulations.

-

Advanced Techniques:

- Use tools like Puppeteer for JavaScript-heavy websites.

- Implement rate limiting and rotating proxies to avoid blocks.

- Handle authentication and cookies for restricted content.

Quick Comparison of PHP Libraries for Web Scraping

| Library | Best For | Key Features |

|---|---|---|

| Simple HTML DOM Parser | Static HTML sites | Easy syntax, handles malformed HTML |

| cURL | Custom requests | Low-level control, flexible setup |

| Symfony HttpBrowser | Modern scraping | Built-in DOM navigation, form handling |

| Puppeteer (via Node.js) | JavaScript-heavy sites | Renders dynamic content |

PHP lets you build scrapers for various use cases, from static sites to dynamic, JavaScript-driven pages. By combining the right tools and ethical practices, you can extract valuable data efficiently and responsibly.

PHP Web Scraping with Simple HTML DOM Parser

PHP Libraries for Web Scraping

PHP offers a variety of libraries that make web scraping easier. Let’s dive into three popular options: Goutte, Simple HTML DOM Parser, and cURL.

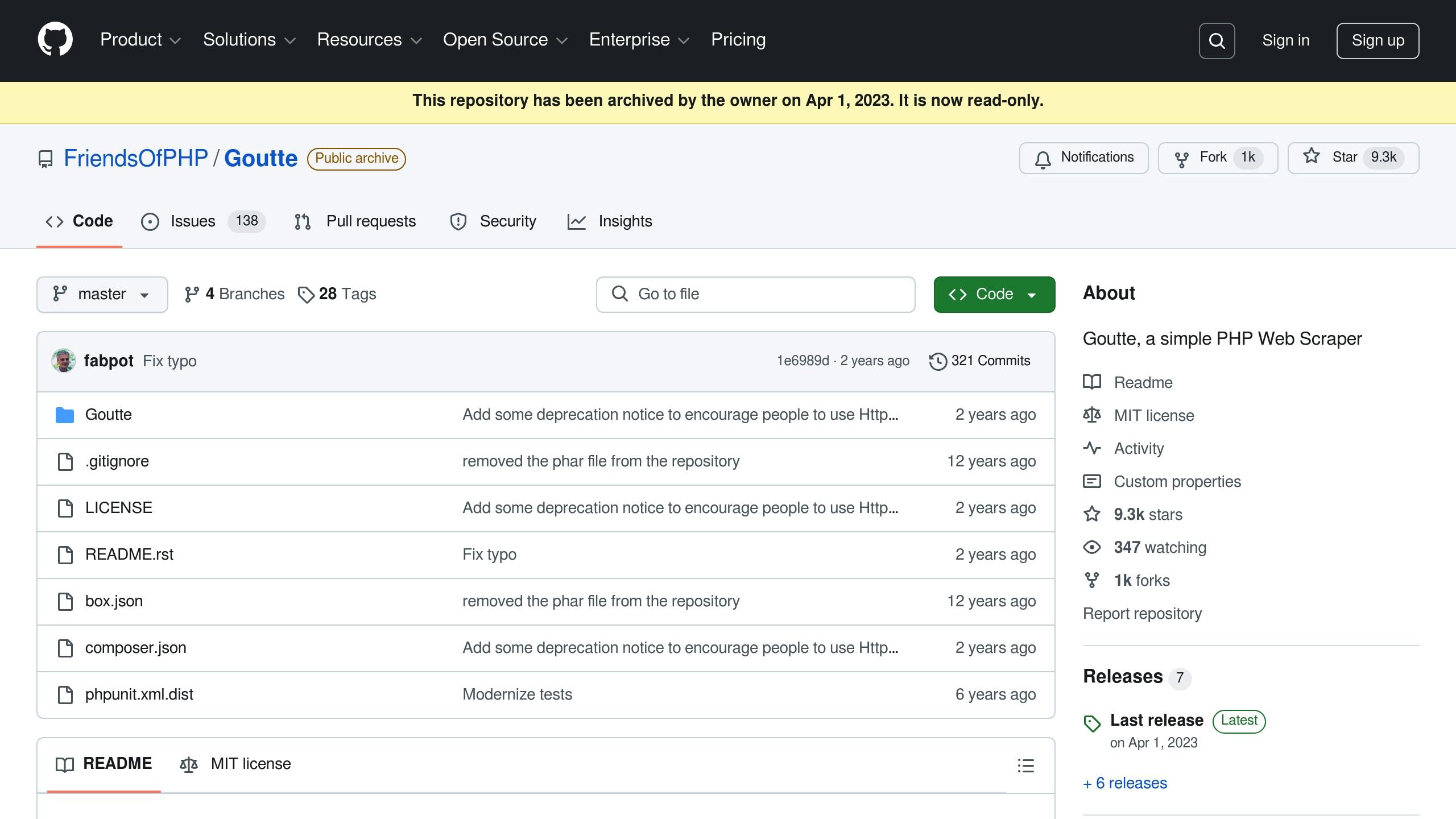

Using Goutte

Although Goutte has been deprecated since April 1, 2023, in favor of Symfony's HttpBrowser, it still serves as a foundation for its successor. Here’s a quick breakdown of its features:

| Feature | Description |

|---|---|

| HTTP Handling | Built on Guzzle for handling HTTP requests |

| DOM Navigation | Uses Symfony DomCrawler for navigating documents |

| Form Handling | Automates form submissions and processing |

| Element Selection | Supports CSS selectors and XPath expressions |

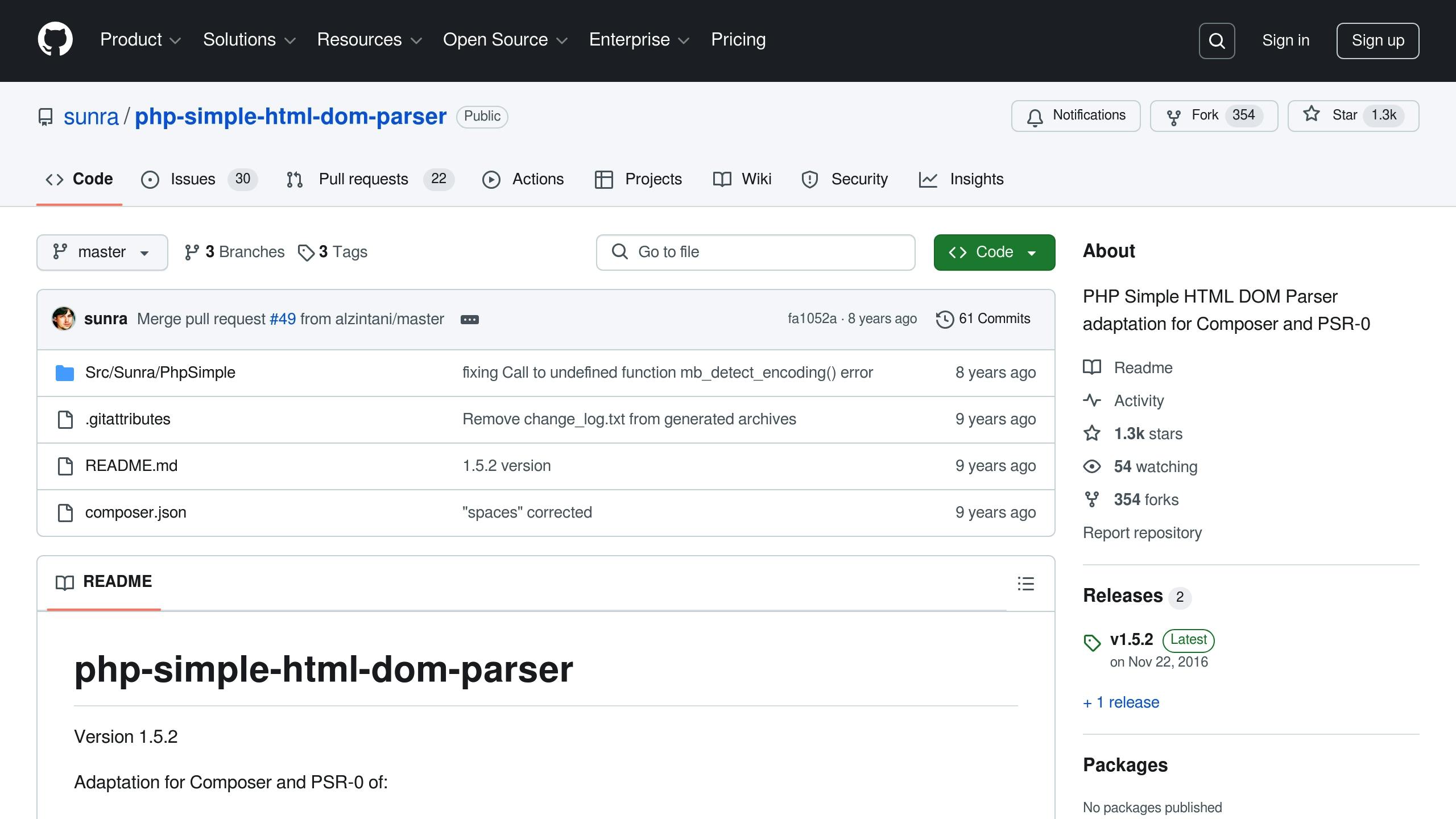

Simple HTML DOM Parser

Simple HTML DOM Parser is a great choice for parsing HTML with a syntax similar to jQuery. It’s especially useful for working with messy or outdated HTML, which makes it perfect for scraping older or poorly formatted websites. The library can parse HTML from both files and strings, giving you flexibility in how you handle data.

Here’s a comparison of Simple HTML DOM Parser versus traditional DOM methods:

| Feature | Simple HTML DOM Parser | Traditional DOM |

|---|---|---|

| Handles malformed HTML well | Yes | Limited |

| Memory Usage | Higher | Lower |

| Syntax Style | jQuery-like | Native PHP |

| Learning Curve | Easier | More challenging |

Working with cURL

cURL provides low-level control over HTTP requests and is the backbone of many other scraping tools. While it requires more setup compared to higher-level libraries, it’s incredibly flexible for advanced scraping tasks. Key features include:

- Protocol Support: Works with HTTP, HTTPS, FTP, and more.

- Authentication: Supports multiple authentication methods.

- Cookie Management: Automatically handles session cookies.

One of cURL’s strengths is its configurability. For example, when scraping high-traffic websites, you can implement rate limiting to avoid being blocked. Here’s a quick example:

curl_setopt($ch, CURLOPT_USERAGENT, 'Mozilla/5.0 (compatible; MyBot/1.0)');

curl_setopt($ch, CURLOPT_TIMEOUT, 30);

Whether you need the simplicity of Simple HTML DOM Parser, the control of cURL, or the modern balance of HttpBrowser, these PHP libraries provide the tools you need for effective web scraping.

PHP Setup for Web Scraping

Library Installation Steps

Setting up PHP libraries for web scraping involves configuring and installing the right tools. The simplest way to manage dependencies is by using Composer, PHP's package manager.

To install Goutte, create a project directory and run:

composer require fabpot/goutte

For Simple HTML DOM, you have two options:

-

Using Composer:

composer require simple-html-dom/simple-html-dom -

Manual Installation:

Downloadsimple_html_dom.phpand include it in your project with:include_once 'path/to/simple_html_dom.php';

For cURL, it’s usually pre-installed with PHP. However, double-check your php.ini file to ensure it's enabled.

PHP Settings

Adjusting PHP settings ensures smooth web scraping, especially when handling large data or long-running tasks. Here are some key configurations:

| Setting | Recommended Value | Purpose |

|---|---|---|

memory_limit |

256M | Handles larger HTML documents |

max_execution_time |

60 | Supports longer scraping tasks |

default_socket_timeout |

30 | Prevents hanging connections |

Set these values directly in your script:

ini_set('memory_limit', '256M');

ini_set('max_execution_time', 60);

ini_set('default_socket_timeout', 30);

After optimizing these settings, focus on implementing effective error handling techniques.

Error Management

Effective error management is key to ensuring stable scraping operations. Use these strategies to handle common issues:

-

Enable Error Logging:

error_reporting(E_ALL); ini_set('log_errors', 1); ini_set('error_log', 'scraping_errors.log'); -

Monitor Memory Usage:

$memoryUsage = memory_get_usage(true); if ($memoryUsage > 256000000) { // 256MB threshold error_log("Memory usage exceeded threshold: " . ($memoryUsage / 1024 / 1024) . "MB"); }

"Optimizing memory usage in PHP is essential for building efficient and scalable applications", says Khouloud Haddad Amamou, Teacher and Full Stack PHP & JavaScript Developer.

-

Add Delays Between Requests:

To avoid being flagged for rate-limiting, introduce a delay between requests:function makeRequest($url) { sleep(2); // 2-second delay $ch = curl_init($url); curl_setopt($ch, CURLOPT_RETURNTRANSFER, true); curl_setopt($ch, CURLOPT_USERAGENT, 'Mozilla/5.0 (compatible; MyBot/1.0)'); return curl_exec($ch); }

These practices will help you build a more reliable and efficient scraping setup.

sbb-itb-f2fbbd7

Creating a Simple Web Scraper

First Scraper Script

Here's a basic PHP scraper using the Simple HTML DOM Parser library:

<?php

require 'vendor/autoload.php';

// Initialize Simple HTML DOM Parser

use simplehtmldom\HtmlWeb;

$html = new HtmlWeb();

// Fetch webpage content

$webpage = $html->load('https://example.com');

// Basic error handling

if (!$webpage) {

die("Failed to load the webpage");

}

// Extract specific content

$title = $webpage->find('title', 0)->plaintext;

$headings = $webpage->find('h1');

// Process and display results

echo "Page Title: " . $title . "\n";

foreach ($headings as $heading) {

echo "Heading: " . $heading->plaintext . "\n";

}

?>

This script fetches a webpage, extracts its title, and lists all H1 headings. You can refine it further by focusing on specific data points.

Data Selection Methods

Different methods can be used to target content efficiently:

| Method | Best For | Example Usage |

|---|---|---|

| CSS Selectors | Websites with consistent class names | $webpage->find('.product-title') |

| XPath | Complex HTML structures | $xpath->query('//div[@class="content"]/p') |

| Regular Expressions | Simple text patterns | preg_match('/Price: \$(\d+)/', $content) |

For websites with dynamic content loaded via JavaScript, you can use Symfony Panther:

<?php

use Symfony\Component\Panther\Client;

$client = Client::createChromeClient();

$crawler = $client->request('GET', 'https://example.com');

$dynamicContent = $crawler->filter('.js-loaded-content')->text();

?>

Content Type Processing

Once you've extracted data, process it based on the content type:

<?php

// HTML content processing

function processHTML($url) {

$html = new \simplehtmldom\HtmlWeb();

return $html->load($url);

}

// JSON content processing

function processJSON($url) {

$json = file_get_contents($url);

return json_decode($json, true);

}

// XML content processing

function processXML($url) {

$xml = simplexml_load_file($url);

return $xml;

}

?>

"Web scraping lets you collect data from web pages across the internet. It's also called web crawling or web data extraction." - Manthan Koolwal

You can also detect the content type dynamically:

<?php

function detectContentType($url) {

$headers = get_headers($url, 1);

return $headers['Content-Type'];

}

?>

Setting Headers and Debugging

To make your scraper identifiable and avoid being flagged, set custom headers:

<?php

$options = array(

CURLOPT_USERAGENT => 'MyBot/1.0 (https://example.com/bot)',

CURLOPT_FOLLOWLOCATION => true,

CURLOPT_RETURNTRANSFER => true

);

?>

For debugging, enable error reporting:

<?php

ini_set('display_errors', '1');

ini_set('display_startup_errors', '1');

error_reporting(E_ALL);

?>

These tools and techniques will help you build a scraper that handles a variety of websites and content types effectively.

Advanced Scraping Methods

JavaScript Content Scraping

Many modern websites load their content dynamically using JavaScript, which makes traditional PHP scraping methods insufficient. To handle this, you can use tools that execute JavaScript to render the full page content.

Here’s an example where PHP runs a Node.js Puppeteer script:

<?php

// Run a Node.js Puppeteer script from PHP

$nodeScript = 'puppeteer-script.js';

$url = 'https://example.com';

$output = shell_exec("node $nodeScript $url");

// Process the rendered HTML content

$renderedHTML = json_decode($output, true);

?>

The corresponding Puppeteer script (puppeteer-script.js) looks like this:

const puppeteer = require('puppeteer');

const url = process.argv[2];

(async () => {

const browser = await puppeteer.launch();

const page = await browser.newPage();

await page.goto(url, {waitUntil: 'networkidle0'});

const content = await page.content();

console.log(JSON.stringify({html: content}));

await browser.close();

})();

If you prefer a PHP-native solution, the php-puppeteer library can be used:

<?php

use Nesk\Puphpeteer\Puppeteer;

$puppeteer = new Puppeteer;

$browser = $puppeteer->launch();

$page = $browser->newPage();

$page->goto('https://example.com');

$content = $page->content();

$browser->close();

?>

These approaches are particularly useful for scraping dynamic content that traditional methods cannot access.

Multi-page Data Collection

When scraping data spread across multiple pages, you need to handle pagination and include rate limiting to avoid being flagged as a bot. Here’s an example:

<?php

function scrapePaginatedContent($baseUrl, $maxPages = 5) {

$client = new \GuzzleHttp\Client([

'headers' => ['User-Agent' => 'CustomBot/1.0'],

'http_errors' => false

]);

$collectedData = [];

$currentPage = 1;

while ($currentPage <= $maxPages) {

// Add a delay between requests (1-2 seconds)

usleep(rand(1000000, 2000000));

$url = sprintf("%s?page=%d", $baseUrl, $currentPage);

$response = $client->get($url);

if ($response->getStatusCode() !== 200) {

break;

}

// Extract and process page content

$pageData = processPageContent($response->getBody());

$collectedData = array_merge($collectedData, $pageData);

$currentPage++;

}

return $collectedData;

}

?>

This script ensures a smooth scraping process by respecting server limits and handling pagination effectively.

Login and Cookie Handling

Some websites require user authentication, which involves managing sessions and cookies. Here’s how you can handle authenticated scraping with PHP:

<?php

function authenticatedScraping($loginUrl, $targetUrl, $credentials) {

$cookieFile = tempnam("/tmp", "CURLCOOKIE");

$ch = curl_init();

// Set up login request

curl_setopt_array($ch, [

CURLOPT_URL => $loginUrl,

CURLOPT_POST => true,

CURLOPT_POSTFIELDS => $credentials,

CURLOPT_COOKIEJAR => $cookieFile,

CURLOPT_COOKIEFILE => $cookieFile,

CURLOPT_RETURNTRANSFER => true,

CURLOPT_FOLLOWLOCATION => true

]);

// Perform login

$loginResponse = curl_exec($ch);

// Access authenticated content

curl_setopt_array($ch, [

CURLOPT_URL => $targetUrl,

CURLOPT_POST => false

]);

$content = curl_exec($ch);

curl_close($ch);

unlink($cookieFile);

return $content;

}

?>

This method allows you to log in to a site, maintain the session, and scrape content that's only available to authenticated users.

AI Tools for Web Scraping

Enhance your PHP scraping capabilities with AI-driven tools that handle JavaScript rendering and bypass anti-bot systems effortlessly.

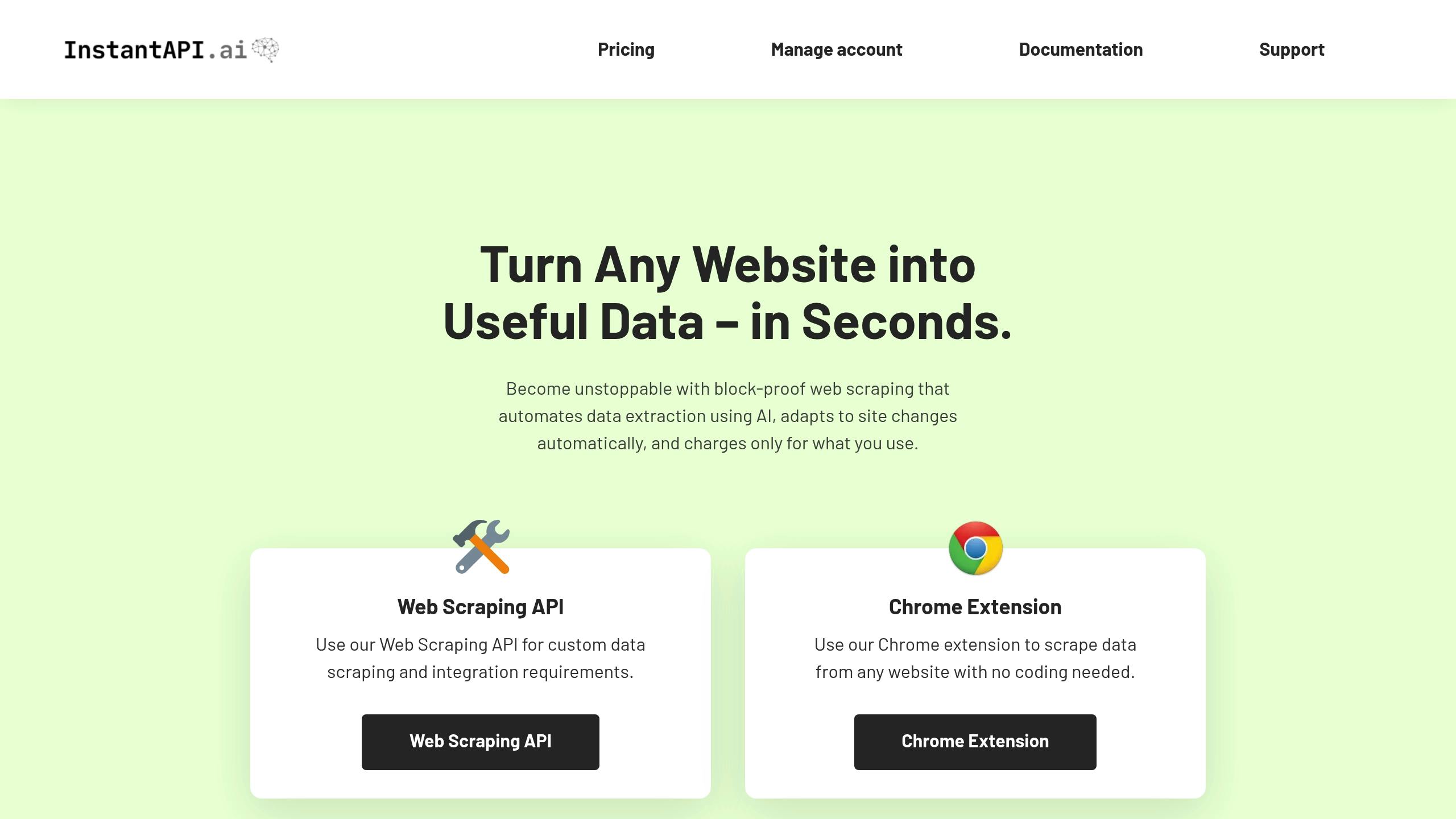

InstantAPI.ai

Here's an example PHP snippet using InstantAPI.ai for web scraping:

$curl = curl_init();

curl_setopt_array($curl, [

CURLOPT_URL => "https://api.instantapi.ai/scrape",

CURLOPT_RETURNTRANSFER => true,

CURLOPT_POST => true,

CURLOPT_POSTFIELDS => json_encode([

"url" => "https://example.com",

"schema" => [

"title" => "h1",

"price" => ".product-price",

"description" => ".product-description"

]

]),

CURLOPT_HTTPHEADER => [

"Authorization: Bearer YOUR_API_KEY",

"Content-Type: application/json"

]

]);

$response = curl_exec($curl);

$data = json_decode($response, true);

curl_close($curl);

Pricing Options

InstantAPI.ai offers plans to suit various scraping needs:

| Plan | Price | Features |

|---|---|---|

| Free Access | $0 | 500 pages/month |

| Full Access | $10/1,000 pages | Unlimited concurrency, no minimum spend |

| Enterprise | Custom | Direct API access, account manager, custom support |

Key Features

Here’s what makes InstantAPI.ai stand out:

1. Smart Data Extraction

- Achieves over 99.99% success in extracting data

- Automatically adjusts to website updates

- Transforms data into the desired format in real-time

2. Anti-blocking Measures

- Uses a pool of over 65 million rotating IPs from 195 countries

- Automates premium proxy rotation

- Handles CAPTCHA challenges with human-like interaction patterns

3. Optimized Performance

- Fully supports JavaScript rendering using headless Chromium

- Allows unlimited concurrent scraping tasks

Real-World Example

One user shared their experience:

"After trying other options, we were won over by the simplicity of InstantAPI.ai's Web Scraping API. It's fast, easy, and allows us to focus on what matters most - our core features."

For instance, when scraping an Amazon product page, the API can fetch product details, ratings, prices, and features in one request. The output is delivered as a well-structured JSON file, ready for PHP integration. This AI-powered solution complements the PHP methods covered earlier, making it a reliable choice for handling dynamic websites.

Summary

Tools Overview

PHP web scraping relies on various libraries, each suited to specific tasks. Simple HTML DOM Parser is great for basic HTML parsing with its CSS selector support, while Goutte simplifies scraping with a DOM-style interface. For projects needing JavaScript rendering and anti-bot measures, InstantAPI.ai offers a complete solution, including proxy support.

| Library | Best For | Key Strength |

|---|---|---|

| Goutte | Basic scraping | DOM manipulation |

| Simple HTML DOM | Static sites | CSS selectors |

| cURL | Custom requests | Low-level control |

| Panther | Dynamic content | JavaScript support |

| InstantAPI.ai | Enterprise needs | AI-powered extraction |

Let’s dive into the steps to set up and execute your scraper.

Getting Started Guide

-

Environment Setup

- Install PHP 8.0 or newer.

- Configure Composer and ensure memory limits are set to at least 256MB.

-

Library Selection

- Choose libraries based on the site's complexity.

- For dynamic e-commerce sites, use Panther for JavaScript rendering alongside Simple HTML DOM for data extraction.

-

Implementation Strategy

- Add rate limiting (e.g., 1 request every 2–3 seconds).

- Use rotating proxies to avoid IP blocks.

- Incorporate error handling with try-catch blocks.

- Save data in structured formats like JSON or CSV for easier processing.