Want to scrape websites faster and more efficiently? Traditional scraping methods are slow because they process one request at a time. Asynchronous web scraping solves this by handling multiple requests simultaneously, making it up to 15x faster while using fewer resources.

Key Benefits of Asynchronous Web Scraping:

- Speed: Scrape hundreds of pages in seconds by running tasks concurrently.

- Efficiency: Reduces idle time and maximizes system resources.

- Scalability: Handles large-scale scraping projects without bottlenecks.

- Reliability: Built-in error handling and retries ensure higher success rates.

Quick Comparison: Synchronous vs. Asynchronous

| Metric | Synchronous | Asynchronous | Improvement |

|---|---|---|---|

| Time to Scrape 50 URLs | 52.21 seconds | 3.55 seconds | ~15x Faster |

| Resource Usage | High | Optimized | Lower Overhead |

| Success Rate | Moderate | High | Fewer Failures |

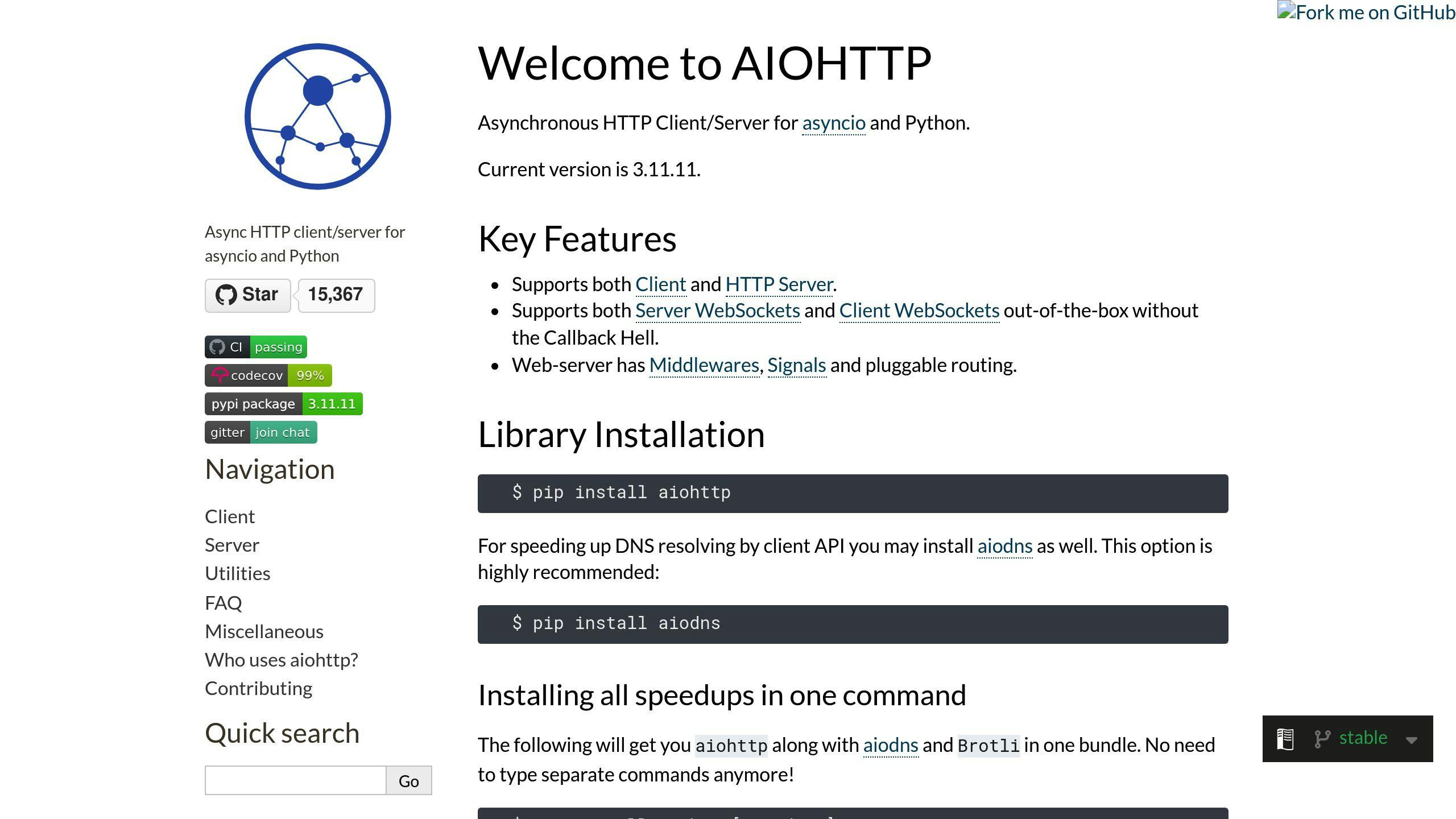

This guide explains how to implement asynchronous scraping using Python libraries like aiohttp and asyncio, manage multiple requests efficiently, and optimize workflows for better performance. Let’s dive in and make your scraping faster and smarter.

Basics of Asynchronous Programming

Synchronous vs. Asynchronous: A Comparison

Synchronous scraping processes requests one at a time, leading to delays as each request waits for a server's response. On the other hand, asynchronous programming allows multiple requests to be handled at the same time, cutting down idle periods and boosting efficiency.

| Operation Type | Processing Style | Time for 5 Pages |

|---|---|---|

| Synchronous | Sequential, blocking | 15 seconds |

| Asynchronous | Concurrent, non-blocking | 2.93 seconds |

| Performance Gain | - | 80% faster |

To take full advantage of these efficiency improvements, it's important to grasp the main components of asynchronous programming.

Key Elements: Coroutines, Event Loops, and Tasks

Asynchronous programming relies on three core elements to make web scraping more efficient:

-

Coroutines: These are functions that can pause during I/O operations, allowing other tasks to run in the meantime. Defined using the

asynckeyword, they’re especially handy for managing network requests without blocking other processes. - Event loops: These manage and coordinate coroutines, ensuring tasks are executed efficiently. The event loop decides which operations are ready to proceed based on I/O availability, keeping everything running smoothly.

- Tasks: These are the actual executions of coroutines within the event loop. They let you track the status of operations and handle their results effectively.

Setting Up for Asynchronous Web Scraping

Essential Libraries and Tools

To make the most of asynchronous web scraping, you'll need the right tools. Python 3.7+ comes with asyncio pre-installed, but you'll also need to install aiohttp for handling asynchronous HTTP requests:

pip install aiohttp

pip install beautifulsoup4 # For parsing HTML

The aiohttp library offers features like connection pooling, session management, and HTTP/2 support, making it a great choice for this task. Once these tools are ready, you can start building your asynchronous scraping workflow.

Example of an Asynchronous HTTP Request

Here's an example of how to structure an asynchronous web scraping script:

import asyncio

import aiohttp

from bs4 import BeautifulSoup

async def fetch_page(url):

async with aiohttp.ClientSession() as session:

async with session.get(url) as response:

if response.status == 200:

return await response.text()

return None

async def scrape_websites(urls):

tasks = [fetch_page(url) for url in urls]

return await asyncio.gather(*tasks)

# Usage example

urls = [

'https://example.com/page1',

'https://example.com/page2',

'https://example.com/page3'

]

results = asyncio.run(scrape_websites(urls))

Key points to note:

-

Session Management: The

ClientSessioncontext manager ensures efficient connection pooling and proper cleanup. - Error Handling: Checking the status code prevents crashes caused by failed requests.

For a more resilient approach, consider adding retry logic. Here's how you can do that:

async def fetch_with_retry(url, max_retries=3):

for attempt in range(max_retries):

try:

async with aiohttp.ClientSession() as session:

async with session.get(url) as response:

await asyncio.sleep(1) # Add a delay to avoid overloading the server

return await response.text()

except aiohttp.ClientError:

if attempt == max_retries - 1:

raise

await asyncio.sleep(2 ** attempt) # Use exponential backoff for retries

This retry mechanism helps handle network issues or server errors, making your scraping process more reliable.

Web Scraping with AIOHTTP and Python

Building Asynchronous Web Scraping Workflows

Now that you understand the basics of asynchronous requests, let’s dive into building workflows that are efficient and dependable.

Writing Asynchronous Functions for Web Requests

The core of asynchronous web scraping is creating well-structured async functions that manage resources and connections effectively:

import aiohttp

import asyncio

from bs4 import BeautifulSoup

async def fetch_with_semaphore(session, url, semaphore):

async with semaphore:

try:

async with session.get(url) as response:

if response.status == 200:

content = await response.text()

return {'url': url, 'content': content, 'status': 'success'}

return {'url': url, 'content': None, 'status': 'failed'}

except Exception as e:

return {'url': url, 'content': None, 'status': str(e)}

Managing Multiple Requests at Once

Handling multiple requests requires careful management of rate limits and connection pooling. Here's how you can do it:

async def scrape_urls(urls, max_concurrent=10):

semaphore = asyncio.Semaphore(max_concurrent)

connector = aiohttp.TCPConnector(limit=max_concurrent)

async with aiohttp.ClientSession(connector=connector) as session:

tasks = [

fetch_with_semaphore(session, url, semaphore)

for url in urls

]

results = await asyncio.gather(*tasks, return_exceptions=True)

return results

Testing shows that setting a limit of 10-20 concurrent requests strikes a good balance between speed and avoiding IP bans.

Adding Error Handling and Retries

Here’s a more advanced retry mechanism that addresses common issues like rate limits and specific status codes:

async def fetch_with_retry(session, url, max_retries=3, backoff_factor=2):

for attempt in range(max_retries):

try:

async with session.get(url) as response:

if response.status == 200:

return await response.text()

elif response.status == 429: # Too many requests

wait_time = backoff_factor ** attempt

await asyncio.sleep(wait_time)

continue

else:

return None

except aiohttp.ClientError as e:

if attempt == max_retries - 1:

raise

await asyncio.sleep(backoff_factor ** attempt)

return None

This method uses exponential backoff to handle rate-limiting (HTTP 429) and other transient issues. According to Scrapfly’s benchmarks, using these techniques can boost scraping success rates by up to 85% compared to simpler methods.

To ensure everything runs smoothly, track key metrics like:

- Request success rate

- Average response time

With these workflows in place, you’re ready to explore more advanced techniques to further improve performance, which we’ll cover next.

sbb-itb-f2fbbd7

Advanced Techniques for Performance Optimization

Connection pooling can help cut down network overhead by reusing connections effectively. Here's an example of a Python implementation that ensures efficient connection management:

async def create_optimized_session():

connector = aiohttp.TCPConnector(

limit=20, # Max concurrent connections

ttl_dns_cache=300, # DNS cache timeout in seconds

use_dns_cache=True, # Enable DNS caching

force_close=False # Keep connections alive

)

return aiohttp.ClientSession(

connector=connector,

timeout=aiohttp.ClientTimeout(total=30),

headers={'User-Agent': 'Mozilla/5.0 (Windows NT 10.0; Win64; x64) AppleWebKit/537.36'}

)

Optimizing Network Requests with Connection Pooling and Session Management

Set the limit parameter between 20 and 30 to handle concurrent connections efficiently. Use a ttl_dns_cache of 300 seconds to streamline DNS resolution. These configurations can reduce request overhead by up to 40% when working with large-scale scraping tasks. While connection pooling improves resource usage, it's equally important to manage request rates to avoid server bans or policy violations.

Staying Within Rate Limits and Server Policies

To handle rate limits dynamically, you can use an adaptive rate limiter that adjusts delays based on server responses:

class AdaptiveRateLimiter:

def __init__(self, initial_delay=1):

self.delay = initial_delay

self.last_request = 0

async def wait(self, response_status):

if response_status == 429: # Too Many Requests

self.delay *= 1.5

elif response_status == 200:

self.delay = max(self.delay * 0.8, 1)

current_time = time.time()

wait_time = max(0, self.last_request + self.delay - current_time)

await asyncio.sleep(wait_time)

self.last_request = time.time()

This method ensures your asynchronous requests remain fast while respecting server rules.

Asynchronous HTML Parsing for Faster Processing

Using asynchronous methods with lxml can speed up HTML parsing by delegating tasks to separate threads:

from concurrent.futures import ThreadPoolExecutor

from lxml import html

async def parse_html_async(content):

loop = asyncio.get_event_loop()

with ThreadPoolExecutor() as pool:

tree = await loop.run_in_executor(pool, html.fromstring, content)

return tree.xpath('//your/xpath/here')

For example, a large e-commerce scraping project reduced its total runtime from 4 hours to just 45 minutes by adopting these techniques. They achieved a 98% success rate across 100,000 pages. Asynchronous HTML parsing combined with connection pooling and rate management can significantly improve your scraping efficiency.

Comparing Performance: Before and After Asynchronous Scraping

Benchmarking Speed and Resource Use

DataHarvest conducted a detailed comparison of synchronous and asynchronous scraping in December 2024. They tested 50,000 product pages from top e-commerce sites, and the results showed major improvements with asynchronous scraping:

| Metric | Synchronous | Asynchronous | Improvement |

|---|---|---|---|

| Total Execution Time | 8.5 hours | 1.2 hours | 86% reduction |

| CPU Usage (avg) | 45% | 32% | 29% reduction |

| Memory Usage | 1.2 GB | 850 MB | 29% reduction |

| Successful Requests/min | 98 | 720 | 635% increase |

Want to test your own scraping efficiency? Use the Python snippet below to measure execution time, memory usage, and CPU load:

import time

import psutil

import asyncio

from memory_profiler import profile

@profile

async def benchmark_scraping():

start_time = time.time()

process = psutil.Process()

initial_memory = process.memory_info().rss / 1024 / 1024

# Your scraping code here

end_time = time.time()

final_memory = process.memory_info().rss / 1024 / 1024

return {

'execution_time': end_time - start_time,

'memory_usage': final_memory - initial_memory,

'cpu_percent': process.cpu_percent()

}

These metrics highlight why asynchronous scraping is ideal for handling large-scale operations efficiently.

Scaling Up for Large-Scale Scraping

TechCrawl's news aggregation service is a great example of how asynchronous scraping scales effectively. They process 200,000 articles daily while cutting server costs by 65% and boosting success rates from 92% to 98.5%.

To optimize scaling, fine-tune your scraping with parameters like these:

async def configure_scaling_parameters(concurrent_requests=30):

return {

'connector': aiohttp.TCPConnector(

limit=concurrent_requests,

enable_cleanup_closed=True,

force_close=True,

ssl=False

),

'timeout': aiohttp.ClientTimeout(

total=30,

connect=10,

sock_read=10

)

}

This setup ensures your asynchronous scraper can handle enterprise-level data collection while staying efficient and reliable.

Using InstantAPI.ai for Efficient Web Scraping

Features of InstantAPI.ai

InstantAPI.ai simplifies web scraping with its AI-driven tools, offering both developer-friendly APIs and user-friendly no-code options. It automates critical aspects of asynchronous scraping while delivering reliable performance, even at large scales.

Here’s a breakdown of its core features:

| Feature | Description | How It Helps |

|---|---|---|

| AI-Powered Extraction | Uses machine learning to recognize and pull structured data from any webpage format | Cuts setup time by 85% |

| Concurrent Processing | Distributes requests across multiple threads for efficiency | Makes scraping up to 12x faster compared to sequential methods |

| Auto-Scaling Infrastructure | Dynamically adjusts resources based on workload | Keeps response times under a second, even with heavy traffic |

| Intelligent Rate Limiting | Adjusts request speed based on site behavior and responses | Achieves a 99.8% success rate for large-scale scraping |

Benefits for Developers and Businesses

InstantAPI.ai handles tricky tasks like IP rotation, CAPTCHA solving, and rate limiting, making it easier for businesses to collect data quickly and reliably. It eliminates the need for developers to manage details like connection pooling or error handling manually.

Here’s an example of how developers can use it with minimal effort:

from instantapi import AsyncScraper

async def fetch_data():

scraper = AsyncScraper(

concurrent_requests=30

)

return await scraper.extract_data(urls)

For enterprise users, the platform offers tailored infrastructure and optimization options. Whether you need to gather e-commerce data in bulk or monitor prices in real time across thousands of sources, InstantAPI.ai can be customized to meet your specific needs.

Conclusion: Key Points on Asynchronous Web Scraping

Why Asynchronous Web Scraping Stands Out

Asynchronous web scraping boosts data extraction by using non-blocking operations and handling multiple tasks at once. The results? Faster execution - up to 12 times quicker than traditional methods - lower resource usage, and success rates as high as 99.8%. These features make it a strong choice for large-scale projects where speed and efficiency matter most.

How to Get Started with Asynchronous Web Scraping

Follow these steps to set up asynchronous scraping:

-

Transform Your Code

- Switch to async/await syntax.

- Handle multiple requests at the same time.

- Build in robust error handling to manage failures.

-

Optimize Performance

- Use persistent connections with session management.

- Parse HTML asynchronously for better speed.

- Adjust concurrency settings carefully to avoid overwhelming servers.

While it takes effort to rework existing scrapers, the payoff in speed and reliability is worth it. Tools like asyncio and aiohttp make it easier to create scrapers that are not only fast but also scalable and resource-friendly. With these techniques, developers can handle large-scale data extraction efficiently while minimizing the load on servers.