Natural Language Processing (NLP) transforms unstructured text into structured, usable data. It automates processes like identifying entities (e.g., names, dates) and analyzing relationships, making data extraction faster and more accurate. Tools like spaCy (optimized for speed) and NLTK (ideal for research) cater to different needs. Advanced techniques, including deep learning models like BERT and GPT, enable context-aware extraction, scalability, and multilingual processing. Here’s what you’ll learn:

- Key methods: Named Entity Recognition (NER), relationship analysis, and event detection.

- Tools: Comparison of spaCy and NLTK.

- Integration steps: Assess workflows, select tools, and implement NLP efficiently.

- Trends: Real-time processing, hybrid approaches, and multilingual NLP.

| Feature | spaCy | NLTK |

|---|---|---|

| Performance Speed | Fast, production-ready | Slower, research-focused |

| Customization | Streamlined for production | Extensive, flexible options |

| Best Use Case | Large-scale, real-time tasks | Educational and exploratory |

NLP simplifies data extraction, reduces manual effort, and scales for global applications. Ready to integrate it into your workflows?

Spacy vs NLTK: NLP Tutorial For Beginners

Key NLP Methods for Extracting Data

Natural Language Processing (NLP) offers tools to turn unstructured text into structured, usable data. Below are some of the key techniques that make this transformation possible.

Named Entity Recognition (NER)

Named Entity Recognition (NER) is used to find and categorize entities like names, locations, and dates within text. It’s a fundamental technique for extracting data from text. NER relies on approaches ranging from simple rule-based systems to advanced deep learning models like BERT.

Here’s a comparison of different NER methods:

| Approach | Strengths | Best Used For |

|---|---|---|

| Rule-based | Precise in specific domains | Well-structured documents |

| Statistical Models | Balanced accuracy and speed | General-purpose extraction |

| Deep Learning | High accuracy, context-aware | Complex, varied text sources |

Once entities are identified, the next step involves analyzing how these entities interact within the text.

Extracting Relationships and Events

Identifying entities is just the beginning. NLP can also determine how entities relate to each other and detect specific events. This process involves:

- Entity Detection: Finding relevant entities in the text.

- Relationship Analysis: Identifying connections between entities.

- Event Construction: Building event profiles with details like participants, actions, and timelines.

This layered approach helps uncover deeper insights from the text.

Using Templates for Data Extraction

Templates offer a structured way to extract targeted information. They rely on patterns to consistently identify and pull specific data.

Template-based extraction can be achieved through:

- Rule-based Matching: Using predefined patterns to locate and extract data.

- Machine Learning Models: Training algorithms to classify and extract information.

- Hybrid Methods: Combining rules and machine learning for better results.

The success of this method depends on the quality of the templates and the consistency of the text being analyzed. Together, these techniques empower organizations to turn unstructured text into useful insights with precision.

Best Tools and Libraries for NLP

Selecting the right tools for NLP in data extraction can make or break your project's success. Two of the most popular libraries, spaCy and NLTK, cater to different needs, offering unique strengths for various applications.

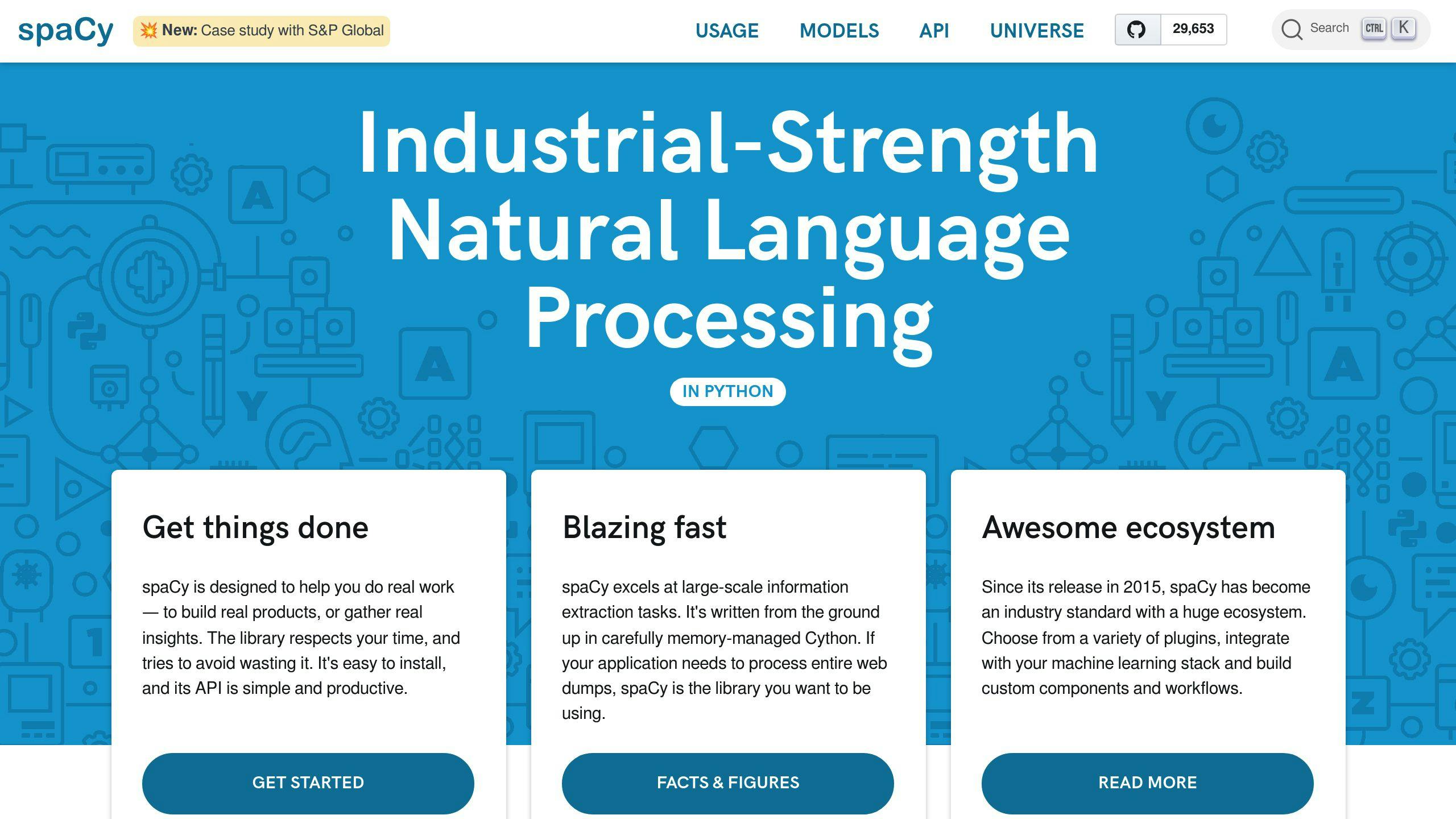

Features of spaCy

spaCy is designed for production environments, focusing on speed and efficiency. Its architecture is optimized for handling large-scale data extraction tasks.

Key features:

- High-performance entity recognition for identifying names, dates, and more

- Text relationship analysis to understand connections between elements

- Optimized text classification models for fast processing

- Built for real-time use in production systems

- Designed to be memory-efficient

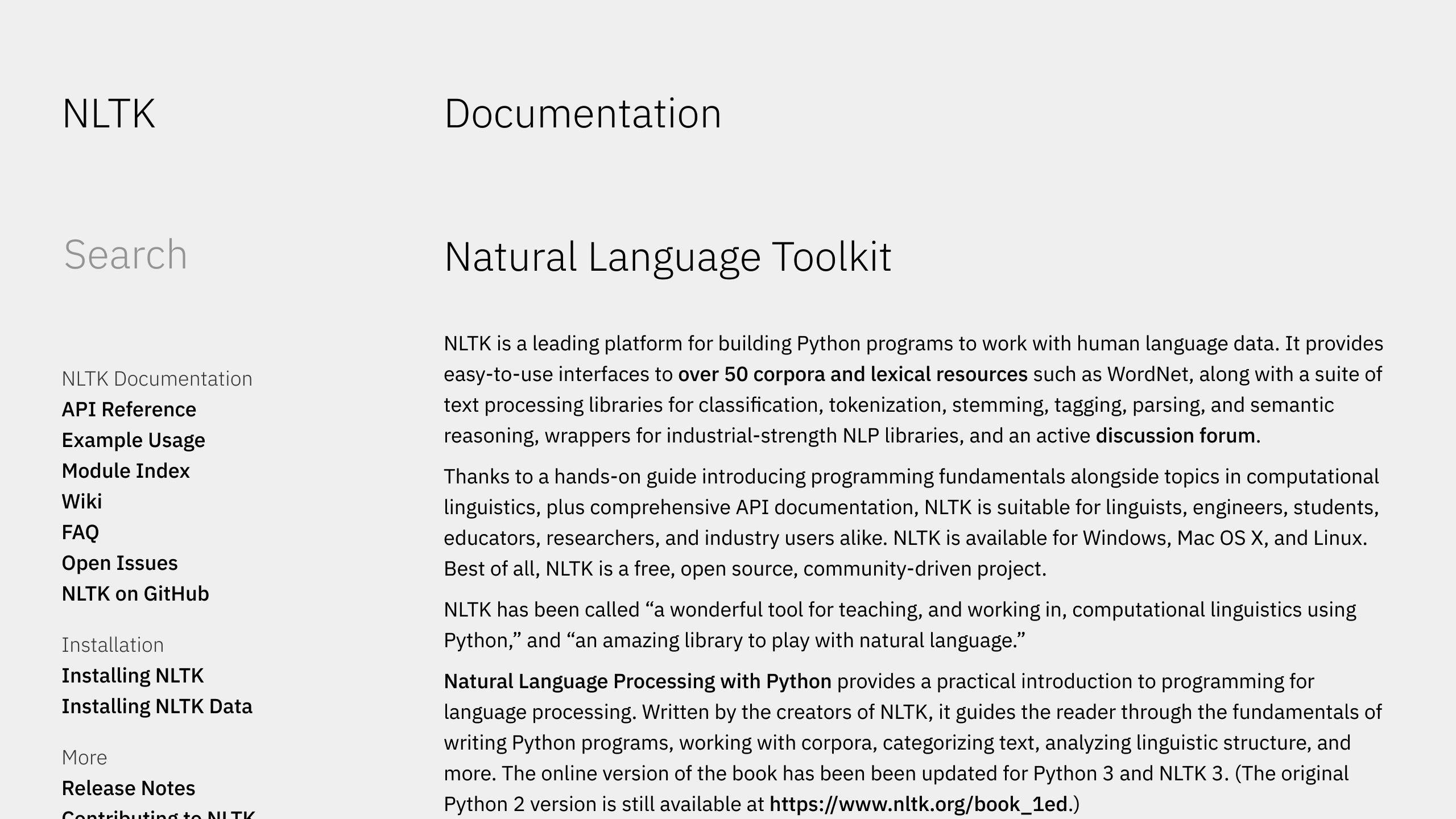

Using NLTK

NLTK shines in academic and research settings, offering a flexible toolkit for exploring language processing concepts. Its modular framework is ideal for experimentation and prototyping.

What NLTK offers:

- Tools for in-depth text analysis and manipulation

- Capabilities to study word structures and forms

- A focus on research and educational exploration

- A flexible design for linguistic experiments

- A wide range of text processing algorithms

Comparing spaCy and NLTK

Here’s a quick side-by-side look at their key differences:

| Feature | spaCy | NLTK |

|---|---|---|

| Performance Speed | Built for fast production use | Ideal for research and analysis |

| Pre-trained Models | Modern neural network models | Traditional statistical models |

| Customization | Streamlined for production | Extensive, research-focused options |

| Primary Strength | Speed and scalability | Deep linguistic exploration |

If you're working on production-scale tasks where speed matters, spaCy is your go-to. For research-focused projects that require detailed linguistic analysis, NLTK is a better fit. Both libraries can play a crucial role in improving your data extraction workflows, depending on your goals and environment.

Once you've chosen the right tool, the next challenge is integrating NLP seamlessly into your processes to get the most out of your data.

sbb-itb-f2fbbd7

How to Add NLP to Data Extraction Workflows

Examples of NLP in Data Extraction

Natural Language Processing (NLP) techniques, such as Named Entity Recognition (NER), help identify and categorize essential details like company names, dates, and monetary values. By combining rule-based approaches with NLP, you can extract structured data more effectively, managing both straightforward patterns (like phone numbers) and more nuanced, context-driven information. Preprocessing ensures the text is ready for accurate NLP analysis.

Steps to Integrate NLP with Existing Workflows

Once you've chosen the right tools, it's time to integrate NLP into your workflows for better performance and efficiency.

1. Assessment and Planning

Evaluate your current data extraction process to pinpoint where NLP can make a difference, especially when dealing with unstructured text. Focus on factors like the amount of data, the level of accuracy needed, and how quickly results must be processed.

2. Tool Selection and Setup

Selecting the right tools, such as spaCy or NLTK, is critical for smooth integration. Here's a quick comparison to guide your choice:

| Factor | Consideration |

|---|---|

| Data Volume | Tools like spaCy handle large datasets efficiently. |

| Accuracy | For tasks requiring deep linguistic analysis, NLTK may be more suitable. |

| Processing Speed | Environments needing fast results benefit from optimized tools like spaCy. |

| Compatibility | Ensure the tool works well with your current systems. |

3. Implementation Strategy

Begin with a small-scale project focusing on a specific task. Include preprocessing steps, NER models, and clear metrics for evaluation. Based on the results, you can gradually expand the integration to other areas.

Benefits of Using NLP for Data Extraction

NLP streamlines data extraction by improving accuracy and consistency while reducing the need for manual work. It also handles complex language variations more effectively. Pre-trained models like BERT and GPT make it possible to process large text datasets quickly without sacrificing precision. These tools enable organizations to scale their operations while maintaining high-quality results.

Advanced NLP Techniques and Trends

Deep Learning Models in NLP

Transformer models like BERT and GPT are exceptional at grasping context and pulling detailed, nuanced information from unstructured text. This makes them a great fit for advanced data extraction tasks. By leveraging pre-training and fine-tuning, these models can zero in on context-specific details, such as extracting monetary values with precision.

| Model Capability | Traditional Methods | Advanced NLP Models |

|---|---|---|

| Context Understanding | Limited to predefined rules | Captures deep context |

| Pattern Recognition | Basic pattern matching | Identifies complex relationships |

| Scalability | Requires manual updates | Easily fine-tuned |

| Language Support | Language-specific rules | Supports multiple languages |

With these capabilities, AI tools powered by such models offer scalable solutions for extracting data from complex and varied text sources.

AI Tools for NLP and Data Extraction

AI tools continuously improve by learning from new data, which boosts their accuracy and allows them to handle inconsistent text formats effectively. This makes them especially useful for processing documents that don't follow standard formats. Their ability to adapt over time ensures more reliable results, even with challenging real-world data.

Trends in NLP for Data Extraction

Multilingual NLP is transforming how global businesses work with data. By enabling unified systems to process content across languages, organizations can extract insights on a global scale without needing separate tools for each language.

Real-time processing is another game-changer. Advanced NLP systems can now handle streaming text data, allowing businesses to analyze and extract information immediately, even from large-scale datasets.

Hybrid approaches that combine NLP with statistical methods are also improving accuracy. These methods are particularly effective in specialized fields where precision and context matter most. For example, statistical techniques can:

- Validate patterns using numerical data

- Assign confidence scores to extracted information

- Detect anomalies in text

- Enhance performance by leveraging historical data

These advancements are paving the way for more efficient and reliable data extraction processes. By embracing these technologies, organizations can process vast amounts of text-based information faster and with greater accuracy, minimizing the need for manual effort.

Conclusion and Final Thoughts

With advancements like deep learning and real-time processing, NLP continues to transform how we extract data. Let’s break down its key contributions.

Key Points Recap

This guide explained how blending traditional NLP methods with modern AI models like BERT improves data extraction by enhancing context understanding, scalability, and accuracy.

| Aspect | Impact on Data Extraction |

|---|---|

| Accuracy & Efficiency | Handles large-scale data processing with precision and speed |

| Scalability | Works across various languages and data formats |

| Integration | Adapts easily to existing workflows |

NLP tools have become more approachable, offering pre-trained models and features that simplify even complex text processing tasks. This opens doors for professionals in many fields to adopt these technologies with less effort.

Advice for Professionals

If you’re planning to use NLP for data extraction, keep these strategies in mind:

- Prioritize Data Quality: Strong preprocessing and cleaning workflows are essential for accurate results.

- Track Performance: Use metrics like precision and recall to monitor how well your models are performing over time.

- Scale Wisely: For larger projects, distributed systems can speed up processing by dividing tasks across multiple machines.

Staying up-to-date with the latest NLP advancements and best practices is crucial. Regularly reviewing your processes ensures they remain effective. Key factors to consider include:

- Preprocessing needs specific to your data

- Choosing the right model for your data size and complexity

- Seamless integration with your current systems

- Ongoing performance monitoring and fine-tuning strategies