Data parsing is how raw, unorganized data is converted into structured formats for easy analysis. It’s essential for tasks like generating reports, integrating APIs, and automating workflows. Tools like Beautiful Soup, Scrapy, and AI-based solutions simplify the process of handling formats like JSON, XML, and HTML.

Key Takeaways:

- Why It Matters: Helps businesses analyze data, automate tasks, and make decisions.

- Who Uses It: Developers, analysts, marketing teams, and business intelligence teams.

- Methods: From basic string functions and RegEx to advanced techniques like machine learning and parallel processing.

- Popular Tools: Beautiful Soup (free), Scrapy (free), Import.io ($299/month), ParseHub ($189/month), InstantAPI.ai ($49/month).

Quick Comparison of Parsing Tools:

| Tool | Best For | Key Features | Starting Price |

|---|---|---|---|

| Beautiful Soup | HTML/XML parsing | Simple syntax, great documentation | Free |

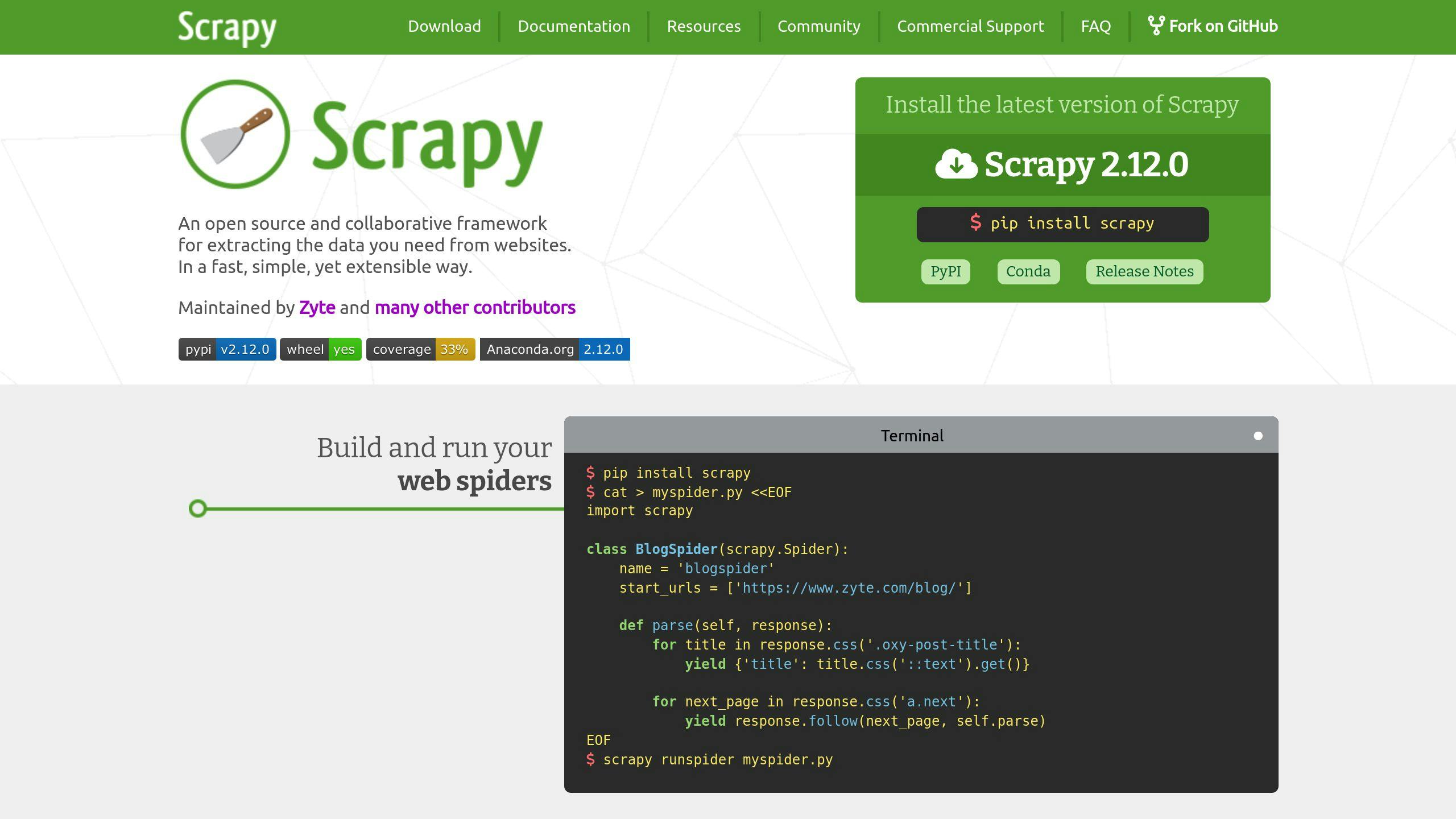

| Scrapy | Large-scale scraping | Parallel processing, custom pipelines | Free |

| Import.io | Enterprise integration | Automated workflows, API access | $299/month |

| ParseHub | Dynamic websites | Handles interactive content | $189/month |

| InstantAPI.ai | AI-based scraping | Self-maintaining extractors | $49/month |

Data parsing is critical for transforming raw information into actionable insights, whether for small projects or large-scale enterprise needs.

Beautifulsoup vs Selenium vs Scrapy - Which Tool for Web Scraping?

Key Methods for Parsing Data

Data parsing involves using different techniques depending on the format of your source data. Let's break down some effective ways to handle everything from basic text to more complex web data.

Using Strings and RegEx for Text Parsing

Text parsing often combines basic string functions with RegEx for more advanced pattern matching. Each method is suited to specific tasks:

| Parsing Method | Best For | Examples |

|---|---|---|

| String Functions | Structured text | Splitting URLs, CSV files |

| Regular Expressions | Complex patterns | Dates, email validation |

| Hybrid Approach | Mixed data types | Analyzing logs, documents |

Parsing XML and JSON Files

XML and JSON are structured formats commonly used in APIs and web services. Parsing these requires specialized libraries that can interpret their syntax and hierarchy. These tools allow you to extract and process data in a way that preserves its structure.

HTML Parsing for Web Data

Parsing HTML is more intricate due to the complexity of web documents. Tools like Beautiful Soup and PyQuery make it easier by helping you navigate the document and extract specific elements.

Key features of HTML parsing include:

- Navigating the structure of the HTML document

- Extracting elements using CSS selectors

- Handling dynamic content rendered by JavaScript

HTML parsing is especially important for web scraping, where automated tools extract data from websites. Modern scrapers are designed to handle diverse HTML layouts and dynamic content with ease.

With these approaches, you can tackle different data formats effectively by selecting the right method and tools for the job.

Tools and Software for Data Parsing

Choosing the right tools is key to efficiently transforming raw data into meaningful insights. Modern parsing tools are equipped to handle various data formats and complexities, making the process smoother and more effective.

Popular Parsing Tools

Beautiful Soup is a go-to option for lightweight HTML and XML parsing. However, for larger projects, Scrapy stands out with its ability to handle large-scale data extraction. If you're looking for enterprise-grade solutions, Import.io (starting at $299/month) offers automated workflows and robust data integration features, while ParseHub (starting at $189/month) is tailored for extracting data from websites with interactive or dynamic elements.

AI-Based Parsing Tools

AI-powered tools are reshaping data parsing by simplifying complex processes. For example, InstantAPI.ai ($49/month) eliminates the need for manual XPath selectors, making data extraction more intuitive and easier to maintain. These tools can adapt to changing website structures automatically, ensuring accuracy with minimal effort.

Comparing Parsing Tools

Here’s a quick comparison of popular parsing tools to help you decide:

| Tool | Best For | Key Features | Starting Price |

|---|---|---|---|

| Beautiful Soup | Basic HTML/XML parsing | Simple syntax, great documentation | Free |

| Scrapy | Large-scale scraping | Parallel processing, custom pipelines | Free |

| Import.io | Enterprise integration | Automated workflows, API access | $299/month |

| ParseHub | Dynamic websites | Handles dynamic content, automation | $189/month |

| InstantAPI.ai | AI-based scraping | Self-maintaining extractors | $49/month |

Each tool has its strengths, catering to different needs. Open-source options like Beautiful Soup and Scrapy are ideal for smaller projects, while enterprise tools like Import.io and ParseHub are designed for more complex tasks. AI-based solutions like InstantAPI.ai bring ease and adaptability to the table, making them a great choice for modern workflows.

Next, we’ll dive into how these tools and techniques can be applied effectively in practical scenarios.

sbb-itb-f2fbbd7

Practical Uses and Guidelines

Data parsing turns raw information into usable insights, making it a powerful tool across various industries. Let’s dive into how it’s applied and the key steps to ensure success.

Applications of Data Parsing

Businesses rely on data parsing to extract insights from different sources. For example, analyzing customer feedback can reveal areas for product improvement. Parsing tools are also used to track competitor pricing, study market trends, and automate data collection.

Another common use is API integration. Parsing formats like JSON or XML allows real-time data synchronization, reporting, and workflow automation.

Here's how different industries make use of data parsing:

| Industry | Application | Business Impact |

|---|---|---|

| E-commerce | Price monitoring & competitor analysis | Fine-tune pricing strategies |

| Finance | Transaction data processing | Streamline reconciliation |

| Marketing | Social media sentiment analysis | Sharpen campaign targeting |

| Healthcare | Electronic health records integration | Improve patient care |

| Manufacturing | Supply chain data processing | Boost inventory efficiency |

These examples show how parsing organizes raw data into structured insights that support better decision-making. To get the most out of data parsing, following best practices is crucial.

Tips for Effective Parsing

Use these strategies to ensure your data parsing workflows are both efficient and accurate.

Data Preprocessing

Start by cleaning your data. Remove duplicates, handle missing values, standardize formats, and ensure data types are consistent. This step lays the groundwork for reliable parsing.

Modular Design

Build your parsing system in separate, independent modules. Each module should handle a specific task, like data validation, transformation, or error handling. This makes updates easier and keeps the system flexible for future changes.

Error Management

Set up error-handling mechanisms, such as try-catch blocks, to log and address exceptions. When combined with preprocessing and modular design, error management ensures your parsed data remains accurate and dependable.

Validation Techniques

Check for null values, confirm data formats, and cross-reference key points to validate your data. For large-scale parsing, using parallel processing can significantly boost performance.

Advanced Parsing Techniques and Trends

Machine learning and parallel processing are changing the way we handle and analyze information from a variety of data sources.

Using Machine Learning for Parsing

Machine learning models are excellent at working with unstructured and semi-structured data. They detect patterns that traditional rule-based systems might overlook, making them ideal for handling datasets with varied formats and structures.

NLP (Natural Language Processing) models play a key role in parsing text-based data. They identify important entities, understand context, manage language differences, and adjust to new patterns without needing constant manual updates.

For example:

- Financial institutions rely on ML models to parse transaction data and detect fraud.

- Search engines use them to analyze and categorize web content effectively.

When implementing ML-based parsing, keep these factors in mind:

| Consideration | Impact | Implementation Focus |

|---|---|---|

| Data Quality | Affects model accuracy | Ensure high-quality input |

| Model Selection | Influences parsing ability | Choose task-specific algorithms |

| Resource Requirements | Impacts processing speed | Plan computing infrastructure |

| Scalability | Supports future growth | Design adaptable architecture |

These tools are transforming raw data into structured information, helping businesses make informed decisions.

Scaling Parsing with Parallel Processing

Parallel processing changes the game for large-scale parsing tasks by splitting the workload across multiple processors. This approach speeds up processing and increases efficiency when dealing with massive datasets.

Frameworks like Apache Spark and Hadoop make distributed parsing easier. They divide large tasks into smaller pieces that can be handled at the same time.

Key benefits of parallel processing include:

- Faster task execution by sharing the load.

- Better resource utilization.

- Improved reliability through fault tolerance.

- The ability to scale up for growing datasets.

Data partitioning is central to parallel processing, allowing tasks to run simultaneously and avoid bottlenecks. To ensure success, focus on error handling, balancing workloads, and reassembling data consistently across processors.

Conclusion

Data parsing has transformed how organizations process and utilize their data, making it faster and more accessible. With tools like AI and parallel processing, businesses can now handle even the most complex and varied data sources efficiently.

Modern approaches combine traditional techniques like regex with cutting-edge technologies, offering quicker and more precise data extraction. AI and parallel processing, in particular, simplify workflows and help companies manage increasing data demands with ease.

When implementing parsing solutions, it's important to keep the following in mind:

- The structure and format of your data sources

- The volume of data to be processed

- Automation capabilities required

- Accuracy and quality expectations