Single-threaded web scraping is slow because it processes one request at a time, leaving your CPU idle during network delays. Multi-threading solves this by running multiple tasks in parallel, drastically improving speed and efficiency for I/O-heavy tasks like scraping web pages.

Key Benefits of Multi-threading:

- Faster Data Collection: Handle multiple requests at once, reducing delays.

- Efficient Resource Use: Keeps your CPU busy during network wait times.

- Scalable Performance: Add more threads to process larger datasets.

Example: Airbnb reduced scraping time for 10,000 listings from 3 hours to 28 minutes by switching to a 20-thread system, improving booking conversions by 7%.

Quick Comparison: Single-threaded vs Multi-threaded Scraping

| Aspect | Single-Threaded | Multi-Threaded |

|---|---|---|

| Processing Style | Sequential | Parallel |

| Resource Usage | Poor CPU utilization | Efficient resource use |

| Network Handling | Delayed by slow responses | Works despite delays |

| Scalability | Limited | Expands with CPU cores |

To implement multi-threading in Python, use tools like ThreadPoolExecutor for managing threads, handle shared data with thread-safe structures, and optimize performance with proxy rotation and error handling. Multi-threading is ideal for I/O-bound tasks, even with Python's Global Interpreter Lock (GIL), as it allows concurrent network operations.

Whether you build a custom scraper or use SaaS platforms, multi-threading is key to faster, more reliable web scraping.

Parallel and Concurrency in Python for Fast Web Scraping

Multi-threading Basics

Learn how to use Python threading to make your web scraping tasks faster and more efficient. Here's a breakdown of the key concepts you need to know.

Threads vs Processes: The Key Differences

Choosing between threads and processes can greatly influence your scraping performance. Threads operate within the same memory space of a process, making them lightweight and ideal for web scraping. This shared memory setup allows threads to easily access resources like connection pools and cached data.

| Feature | Threads | Processes |

|---|---|---|

| Memory Usage | Shared memory space | Separate memory spaces |

| Resource Sharing | Direct and simple | Requires inter-process communication |

| Creation Speed | Fast and lightweight | Slower and more resource-heavy |

| Scalability | Can support hundreds | Limited by system resources |

| Best Use Case | I/O-bound tasks | CPU-intensive tasks |

A real-world example? Nick Becker's 2019 project showed that using ThreadPoolExecutor with 30 threads reduced the download time for multiple Hacker News stories from 11.9 seconds to just 1.4 seconds - an 88% improvement. Knowing these distinctions is crucial before diving into Python threading, especially with its Global Interpreter Lock (GIL).

Python's GIL: What You Need to Know

The Global Interpreter Lock (GIL) in Python often confuses developers when it comes to threading. Here's the deal: the GIL prevents multiple threads from running Python code at the same time, but it doesn't significantly hinder web scraping. Why? Because the GIL is released during I/O operations - precisely when your scraper is waiting for responses from servers.

When one thread is waiting for a web response, the GIL allows other threads to execute. This makes threading perfect for web scraping, where most of the time is spent waiting on network operations.

To get the most out of multi-threading for scraping:

- Use multiple threads to send network requests simultaneously.

- Leverage thread pools by using

ThreadPoolExecutorto manage and reuse threads efficiently. - Streamline connection handling with connection pooling to cut down on overhead.

"The GIL's impact on I/O-bound web scraping is often manageable, and the benefits of multi-threading for concurrent network operations usually outweigh the drawbacks."

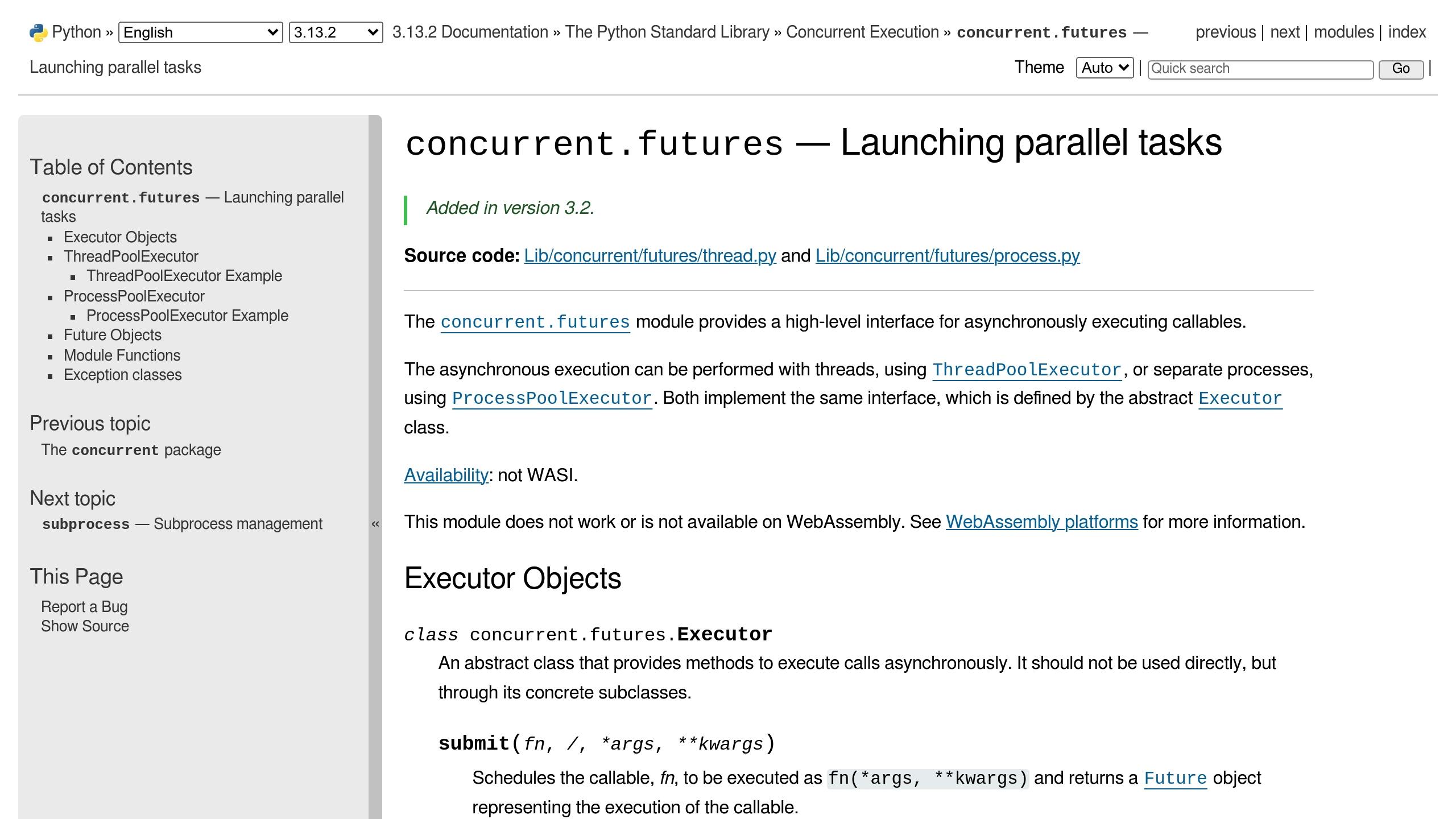

Here's a quick example of how to implement thread pools in Python using the concurrent.futures module:

from concurrent.futures import ThreadPoolExecutor

def scrape_url(url):

# Add your scraping logic here

pass

urls = ["http://example1.com", "http://example2.com"]

with ThreadPoolExecutor(max_workers=10) as executor:

executor.map(scrape_url, urls)

This method highlights how multi-threading can improve performance for tasks that rely heavily on network I/O.

Building Multi-threaded Scrapers

Learn how to create multi-threaded web scrapers in Python using ThreadPoolExecutor and effectively handle shared resources.

Using ThreadPoolExecutor

Python's ThreadPoolExecutor makes multi-threading easier by handling threads for you. This lets you focus on the scraping logic itself. Here's a simple example:

import threading

from concurrent.futures import ThreadPoolExecutor

import requests

from bs4 import BeautifulSoup

thread_local = threading.local()

def get_session():

if not hasattr(thread_local, "session"):

thread_local.session = requests.Session()

return thread_local.session

def scrape_url(url):

session = get_session()

response = session.get(url)

soup = BeautifulSoup(response.text, 'html.parser')

return soup.title.text

urls = ["http://example1.com", "http://example2.com", "http://example3.com"]

with ThreadPoolExecutor(max_workers=3) as executor:

results = list(executor.map(scrape_url, urls))

In this setup, each thread gets its own session, improving performance. Next, let's look at a more complete example with error handling.

Multi-threaded Scraping Code Example

Here’s how you can add error handling to your scraper:

import logging

from tenacity import retry, stop_after_attempt, wait_exponential

logging.basicConfig(level=logging.INFO)

logger = logging.getLogger(__name__)

@retry(stop=stop_after_attempt(3), wait=wait_exponential(multiplier=1, min=4, max=10))

def scrape_with_retry(url):

try:

session = get_session()

response = session.get(url, timeout=10)

response.raise_for_status()

return process_data(response.text)

except Exception as e:

logger.error(f"Error scraping {url}: {str(e)}")

raise

def process_data(html):

return extracted_data

For instance, in 2022, a data scientist at Airbnb boosted their scraper's performance by 800% by running 20 concurrent threads. This reduced the time to process 100,000 listings from 4 hours to just 30 minutes (Airbnb Tech Blog, 2022). Once your scraper is reliable, it's important to safely manage shared data across threads.

Managing Shared Data Between Threads

When multiple threads share data, synchronization is key to avoid conflicts. Here's how you can handle shared data effectively:

| Data Structure | Use Case | Thread Safety |

|---|---|---|

| Queue | Task distribution | Built-in thread safety |

| Lock | Resource protection | Requires manual locking |

| ThreadLocal | Per-thread data | Automatically isolated |

Here’s an example of managing shared data safely:

from queue import Queue

from threading import Lock

class ThreadSafeScraperResults:

def __init__(self):

self.data = []

self.lock = Lock()

self.error_queue = Queue()

def add_result(self, result):

with self.lock:

self.data.append(result)

def log_error(self, error):

self.error_queue.put(error)

sbb-itb-f2fbbd7

Speed and Performance Tuning

Boost the efficiency of your multi-threaded scraper by focusing on key performance factors.

Setting the Right Thread Count

Start with a thread count matching the number of physical CPU cores on your machine. Then, adjust based on performance metrics. For instance, on a quad-core machine with 16GB of RAM, you might begin with 4–8 threads.

import psutil

def calculate_initial_threads():

cpu_cores = psutil.cpu_count(logical=False)

return min(cpu_cores * 2, 8) # Use 2 threads per core, capped at 8

def adjust_thread_count(current_count, cpu_usage):

target_usage = 70 # Target CPU usage in percentage

if cpu_usage > target_usage + 10:

return max(1, current_count - 1)

elif cpu_usage < target_usage - 10:

return current_count + 1

return current_count

Once you've optimized your thread count, protect these gains by managing proxies effectively.

Proxy Management for Multiple Threads

To maintain performance, use thread-safe proxy rotation. Here's an example implementation:

from threading import Lock

from collections import deque

class ProxyRotator:

def __init__(self, proxy_list):

self.proxies = deque(proxy_list)

self.lock = Lock()

self.in_use = set()

self.dead_proxies = set()

def get_proxy(self):

with self.lock:

while self.proxies:

proxy = self.proxies.popleft()

if proxy not in self.dead_proxies:

self.in_use.add(proxy)

self.proxies.append(proxy)

return proxy

return None

For example, Profitero improved scraping speed by 73% using optimized thread counts and proxy rotation. Their system processed 1.2 million product pages daily, cutting scraping time from 18 hours to just 5 hours and lowering cloud computing costs by 28%.

With threading and proxy management in place, tackle network challenges to ensure smoother performance.

Fixing Network Issues and Errors

Address common network issues with targeted solutions:

| Issue Type | Solution | Implementation Details |

|---|---|---|

| Connection Timeouts | Exponential Backoff | Gradually increase delays between retries |

| Rate Limiting | Adaptive Delays | Adjust request timing based on response codes |

| DNS Failures | DNS Caching | Use a local DNS cache with a time-to-live (TTL) |

import time

from datetime import datetime

class AdaptiveDelay:

def __init__(self, initial_delay=1):

self.current_delay = initial_delay

self.last_request = datetime.now()

def wait(self, response_code):

if response_code == 429: # Too Many Requests

self.current_delay *= 2

elif response_code == 200:

self.current_delay = max(1, self.current_delay * 0.8)

time.sleep(self.current_delay)

self.last_request = datetime.now()

Track your scraper's performance with metrics like pages scraped per second, CPU usage, and error rates. These insights will help you fine-tune your setup and ensure your optimizations are working as intended.

Custom vs Ready-Made Solutions

After building multi-threaded scrapers, it's time to decide: should you create a custom solution or go with a ready-made option?

Custom Multi-threading: Pros and Cons

Custom multi-threaded scrapers give you complete control and allow for threading logic tailored to your needs. But this flexibility comes with challenges. A 2023 Bright Data analysis reveals that creating a custom scraper for a medium-complexity project takes 2–4 weeks and costs between $5,000 and $25,000 upfront.

| Aspect | Pros | Cons |

|---|---|---|

| Control | Full customization of threading logic | Complex error handling and ongoing upkeep |

| Integration | Direct access to internal systems | Requires specialized technical skills |

| Scalability | Designed for specific needs | Manual infrastructure management |

| Cost | Lower long-term costs for large-scale use | High initial development expenses |

For those looking for a simpler path, ready-made SaaS platforms offer a convenient alternative.

What SaaS Tools Bring to the Table

Modern SaaS platforms simplify multi-threading with built-in solutions. Tools like InstantAPI.ai handle thread operations, proxy rotation, and error recovery automatically, cutting down both development time and maintenance headaches.

In 2022, Profitero, an e-commerce analytics firm, transitioned from a custom scraper to a SaaS platform. The switch reduced their data collection time by 62%, boosted data accuracy from 91% to 98.5%, freed up developer resources, and saved them about $450,000 annually.

Some standout features of these platforms include:

- Automatic thread scaling based on workload

- CAPTCHA-solving integration

- Smart proxy rotation

- Real-time error handling

- Regular updates to adapt to website changes

These capabilities make SaaS tools a strong contender when comparing custom-built solutions to ready-made options.

Build vs Buy: Key Considerations

Deciding between a custom solution and a SaaS platform depends on your project's size, budget, and team expertise. Many enterprises lean towards SaaS platforms, which range from $50–$200 per month for basic plans to $500–$5,000+ for enterprise-level tools. While a custom solution costing around $20,000 can break even in 3–4 years, mid-tier SaaS plans average $500 monthly (about $6,000 annually). Bright Data's research shows organizations using SaaS solutions cut development costs by up to 70% compared to custom builds.

Hybrid options also exist. Platforms like InstantAPI.ai provide API access for custom integrations while managing threading and scraping complexities. This approach lets teams focus on using the data and driving insights, instead of worrying about scraper infrastructure.

Wrapping Up

Why Multi-Threading Makes a Difference

Multi-threaded scrapers can perform 5–20 times faster than single-threaded ones. This speed boost comes from making better use of system resources during tasks like network requests, which are often I/O-bound.

Beyond speed, multi-threading enhances CPU efficiency, scales better, and reduces delays. When done right, these benefits let organizations gather and refresh data more often, helping them stay ahead in service quality and competition.

Choosing the Right Scraping Approach

If you're short on resources, SaaS platforms offer quick setup and automatic thread management. On the other hand, custom-built solutions give you more control and can integrate smoothly with your existing systems. The trick is to weigh the effort needed to build against the long-term benefits.

To get the most out of your scraper, focus on these essentials: handle errors properly, monitor thread usage, enforce rate limits, use thread pooling, and explore hybrid strategies.

The future of web scraping lies in smart multi-threading that balances speed with reliability. Whether you go for a custom solution or a SaaS platform, the choice should fit your technical skills and meet your data goals.

FAQs

Here are answers to some common questions about multi-threading in web scraping.

What is multithreading in web scraping?

Multithreading allows your scraper to perform multiple tasks at the same time. This is especially useful for web scraping, where much of the time is spent waiting for network responses.

Here's a simple Python example using concurrent.futures:

import concurrent.futures

import requests

from bs4 import BeautifulSoup

def scrape_url(url):

response = requests.get(url)

soup = BeautifulSoup(response.text, 'html.parser')

return soup.title.string

urls = ['https://example1.com', 'https://example2.com', 'https://example3.com']

with concurrent.futures.ThreadPoolExecutor(max_workers=3) as executor:

results = list(executor.map(scrape_url, urls))

This code demonstrates how to efficiently scrape multiple URLs at once using a thread pool.

"For web scraping, which is primarily I/O-bound, both multithreading and asynchronous programming are generally more suitable than multiprocessing. Multithreading is often easier to implement and works well with existing synchronous code."

When using multithreading in production, make sure to:

- Use thread pools to manage threads effectively.

- Include strong error handling to deal with unexpected issues.

- Respect website rate limits to avoid being blocked.

- Enable connection pooling to reduce overhead.

- Keep an eye on system resource usage to prevent performance issues.