Looking for the perfect web scraping tool? Here's what you need to know:

- Match the tool to your skills: Beginners benefit from no-code tools like Octoparse, while developers might prefer code-based options like Scrapy.

- Define your goals: Identify the data you need (e.g., prices, reviews, social media posts) and how often you'll collect it.

- Consider costs and scalability: Choose tools that fit your budget and can grow with your needs.

- Key features to look for: Ease of use, proxy support, anti-ban features, and flexible data export options.

Quick Comparison Table:

| Tool | Best For | Technical Skill Level | Scalability |

|---|---|---|---|

| Scrapy | Advanced, custom projects | High | High |

| Octoparse | Simple, no-code tasks | Low | Medium |

| InstantAPI.ai | Automated, dynamic scraping | Medium | High |

Start with free trials, evaluate documentation, and pick a tool that aligns with your project needs and technical expertise.

The Best Web Scraping Tools of 2023

How to Identify Your Web Scraping Requirements

Knowing exactly what you need from web scraping is the first step to finding the right tool. Here's what you should focus on:

Define Your Project Goals

Start by outlining the scope of your project. What kind of data do you need? How often will you collect it? And what will you do with it?

For e-commerce projects, decide if you're tracking prices, product descriptions, reviews, or inventory levels. The size and complexity of the data will influence your tool choice.

For social media analysis, pinpoint the platforms, specific data points (like posts or comments), how frequently you'll extract the data, and the format you need it in.

Assess Your Technical Skills

Your technical expertise plays a big role in choosing a tool. Some tools require coding knowledge, while others are more user-friendly but may offer less flexibility.

| Tool Type | Skills Needed | Example Tool |

|---|---|---|

| Code-Based | Python, HTTP protocols | Scrapy |

| No-Code | Basic computer skills | Octoparse |

| Hybrid | Moderate technical knowledge | ParseHub |

Code-based tools give you more control but demand programming skills. No-code platforms are easier to use but may not handle complex tasks. Hybrid tools strike a balance between the two.

Weigh Costs and Scalability

Think beyond the upfront cost. Account for:

- The amount of data you'll be scraping

- Flexible pricing options (like pay-as-you-go plans)

- Infrastructure needs

- Features such as proxy management

"Total cost of ownership includes maintenance, additional features, and scalability", says a Zyte specialist.

For example, ParseHub's $189/month plan supports up to 10,000 pages per run. Make sure this fits both your current needs and future growth plans.

Once you've nailed down these requirements, you're ready to look into the specific features that will help you achieve your goals.

Features to Look for in a Web Scraping Tool

Ease of Use and Customization Options

The best web scraping tools strike a balance between being user-friendly and offering enough flexibility for customization. Here's a breakdown of how different types of tools cater to various needs:

| Feature | Basic Tools | Advanced Tools | Enterprise Solutions |

|---|---|---|---|

| Interface | Visual point-and-click | Code-based customization | Hybrid with AI assistance |

| Architecture | Fixed workflows | Modular, extensible | API-first design |

| Extra Features | Templates, basic automation | Custom scripts, middleware | AI extraction, real-time processing |

Proxy Support and Anti-Ban Features

A reliable scraping tool needs solid proxy management and anti-ban mechanisms. These features help avoid detection and ensure smooth operation. Many top-tier tools handle this automatically, so you can focus on gathering data instead of worrying about technical hurdles.

Data Processing and Export Options

After collecting data, how you process and export it can make or break your workflow. Modern tools should offer:

- Automated cleaning and standardization

- Support for multiple export formats like CSV, JSON, or API

- Custom field mapping

- Built-in error handling and validation

- Real-time data streaming options

For larger operations, enterprise tools take this further with features like custom API endpoints and automated workflows, making it easier to scale as your projects expand.

Next, we'll dive into how popular tools stack up against these features.

sbb-itb-f2fbbd7

Comparing Popular Web Scraping Tools

Here’s a look at three web scraping tools tailored for different levels of technical expertise and user needs.

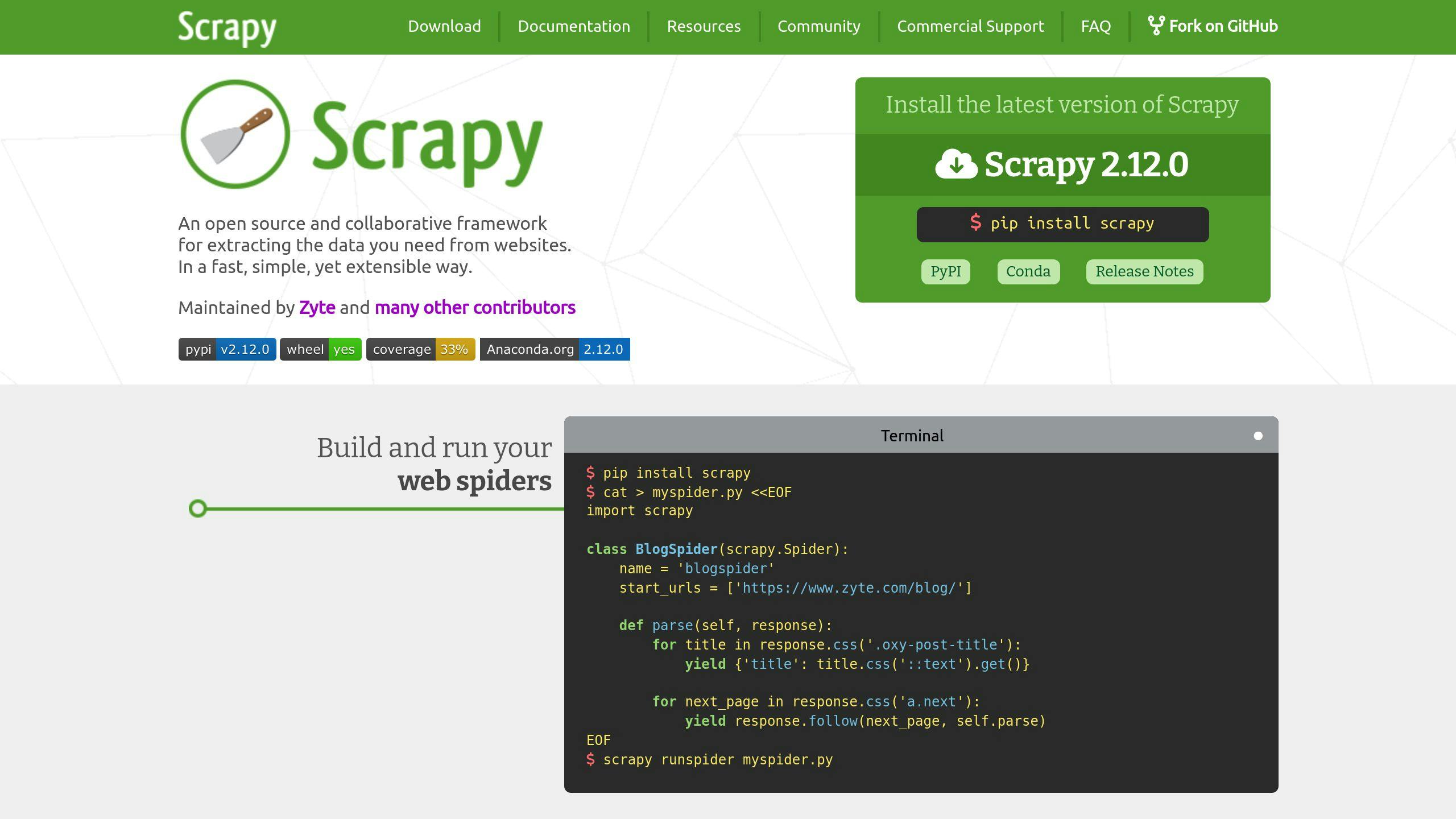

Scrapy: A Developer-Focused Framework

Scrapy is a Python-based framework built for developers who need full control over their web scraping projects. Its modular design allows for third-party integrations and custom features, making it ideal for creating scalable web crawlers.

| Feature | Details |

|---|---|

| Technical Level | Requires Python knowledge |

| Scalability | Handles millions of requests |

| Customization | Full control via code |

| Cost | Free and open-source |

| Best For | Advanced projects like enterprise e-commerce monitoring or research needing custom solutions |

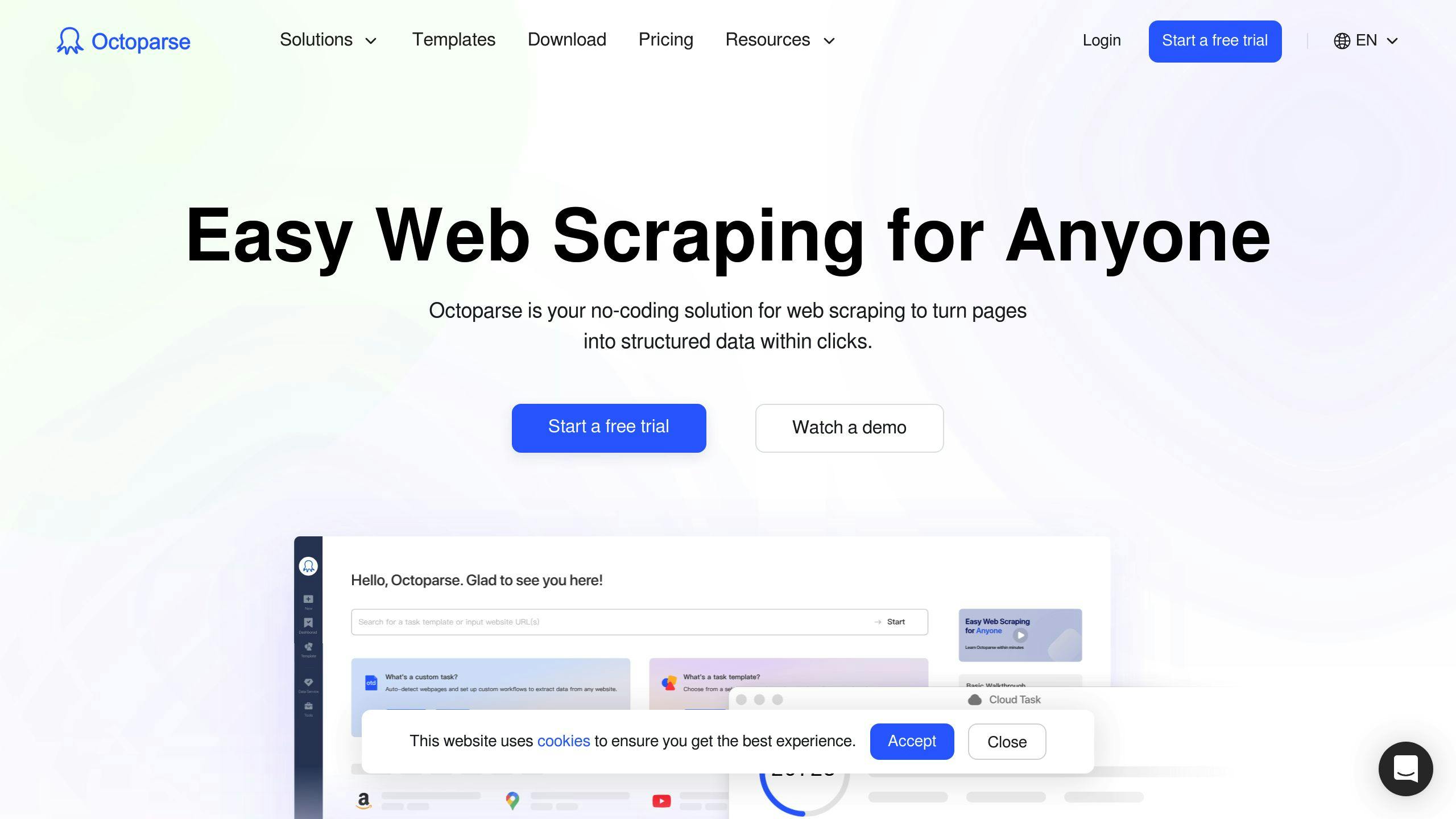

Octoparse: A Visual, No-Code Solution

Octoparse simplifies web scraping by offering a point-and-click interface and pre-built templates. It’s designed for users who want to extract data without writing any code.

| Feature | Details |

|---|---|

| Technical Level | Beginner-friendly |

| Interface | Visual drag-and-drop builder |

| Templates | Pre-built templates for common tasks |

| Limitations | Limited handling of complex data structures |

| Best For | Small businesses or basic data monitoring needs |

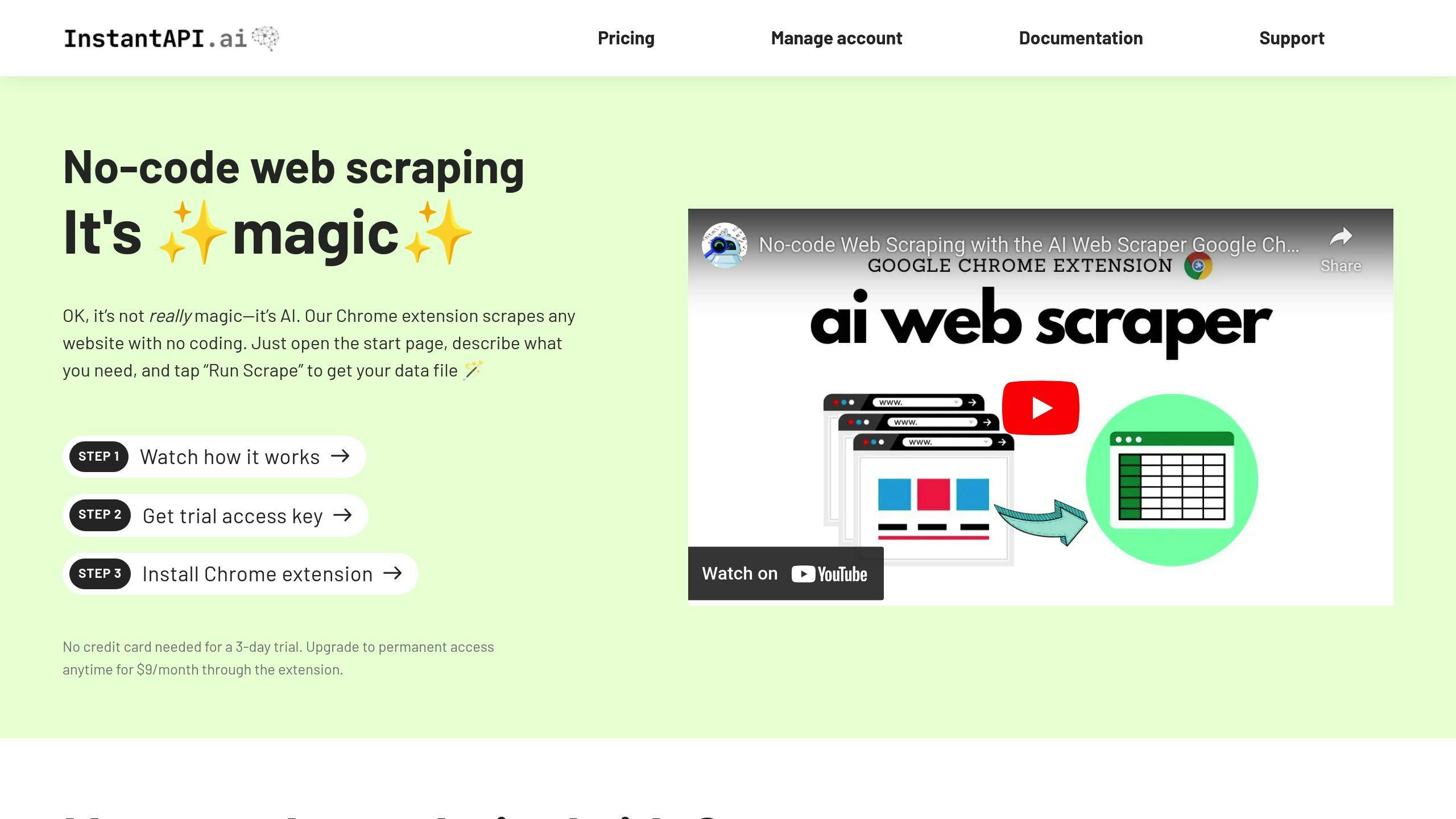

InstantAPI.ai: AI-Driven Web Scraping

InstantAPI.ai leverages AI to simplify web scraping, offering a low-maintenance solution that adapts to website changes automatically. Its plans cater to different project sizes and budgets.

| Plan | Features & Pricing |

|---|---|

| Evaluation | Free, includes AI extraction and JS rendering |

| Side Project | $49/month, adds premium proxies and AI extraction |

| Startup | $99/month, supports unlimited requests and advanced features |

| Business | $249/month, includes custom API support and enterprise-grade tools |

What sets InstantAPI.ai apart is its ability to handle complex scenarios without needing xPath configurations. This makes it a reliable option for users who want automated, hands-off data extraction.

When deciding among these tools, think about your technical skills and project needs. Scrapy is perfect for developers seeking flexibility, Octoparse is great for beginners with simple requirements, and InstantAPI.ai combines automation with robust features for more complex tasks.

Tips for Choosing the Best Tool for Your Needs

Use Free Trials or Evaluation Plans

Take advantage of free trials or evaluation plans to test tools like InstantAPI.ai or ParseHub without spending money upfront. For instance, ParseHub's free tier lets you scrape up to 200 pages in 40 minutes, making it ideal for small-scale testing. Use these trial periods to see how well each tool handles your specific tasks before deciding on a paid plan.

Check Support and Documentation

Good documentation, active user communities, and responsive support can make or break your experience with a web scraping tool. Take Scrapy as an example - it’s earned 52.5k stars on GitHub, largely because of its detailed documentation and engaged community. Look for tools that offer clear guides, active forums, and direct support options like email or live chat.

Read Reviews and Case Studies

User reviews and case studies provide insights into how tools perform in real-world scenarios. Focus on feedback that aligns with your technical needs and goals. For example, many Octoparse users praise its AI-based auto-detecting mode, which simplifies tasks for non-technical users.

When reading reviews, consider factors like:

- Scalability and performance

- Integration options

- Common challenges and how users solve them

- Success stories from real-world applications

"Total cost of ownership includes maintenance, additional features, and scalability", explains a Zyte specialist.

Match Your Needs to the Right Tool

Picking the right web scraping tool comes down to understanding your specific requirements. Developers might lean toward code-heavy solutions, while business users often prefer tools that are easier to use but still powerful.

Look for tools that offer proxy support and anti-ban features, especially if you're working with dynamic or more challenging websites. For example, AI-driven platforms like InstantAPI.ai are great for tackling complex data extraction while staying user-friendly.

Another key factor is scalability. Make sure the tool can grow with your needs, both in terms of technical capability and pricing flexibility.

Here’s a quick comparison of popular tools based on different needs:

| Tool | Best For | Technical Skill Level | Scalability |

|---|---|---|---|

| Scrapy | Large-scale, complex projects | Advanced | High |

| Octoparse | Simple to moderate scraping tasks | Beginner | Medium |

| InstantAPI.ai | Automated scraping for dynamic content | Intermediate | High |

Choose a tool that not only meets your current requirements but also has strong documentation, reliable support, and regular updates to ensure lasting success in your web scraping projects.

FAQs

"Here are answers to some common questions about web scraping tools to help you choose the right one for your needs."

What is the best tool for web scraping?

The best tool varies based on your specific requirements and technical skills. Here's a quick overview of popular options:

| Tool | Best For |

|---|---|

| BeautifulSoup | Basic scraping tasks, ideal for Python users |

| Puppeteer | Browser automation, suited for JavaScript developers |

| Mozenda | Business-friendly, structured data extraction |

| ScrapeHero Cloud | Large-scale, enterprise-level projects |

If you're just starting out, visual tools like Octoparse are user-friendly and require no coding. Developers often lean towards BeautifulSoup or Puppeteer for more customization, while enterprises tend to prefer robust solutions like ScrapeHero Cloud. Choose a tool that matches your goals and technical expertise.

Which AI tool is best for web scraping?

AI tools bring efficiency to web scraping by automating challenging tasks. Here's a look at some top options:

| Tool | Key Strength |

|---|---|

| Import.io | Scalable, enterprise-grade data extraction |

| InstantAPI.ai | Adapts quickly to website changes |

| Kadoa | Cleans and organizes unstructured data |

Each tool has its own strengths. Import.io is great for handling large-scale operations, InstantAPI.ai is effective for dynamic content, and Kadoa focuses on preparing messy data for analysis. Think about the scale and complexity of your project when deciding which AI-powered tool to use.

The right choice will depend on your immediate needs and how well the tool can grow with your project. Regularly assessing your tools ensures they stay aligned with your goals.