Web scraping helps you collect data from websites quickly and accurately. It's like automating the copy-paste process. Here's a quick overview:

Why Use Web Scraping?

- Saves time compared to manual data collection.

- Tracks prices, analyzes trends, and gathers large datasets easily.

- Used in industries like e-commerce, journalism, and research.

Beginner-Friendly Tools

- Beautiful Soup: Great for small projects and static websites.

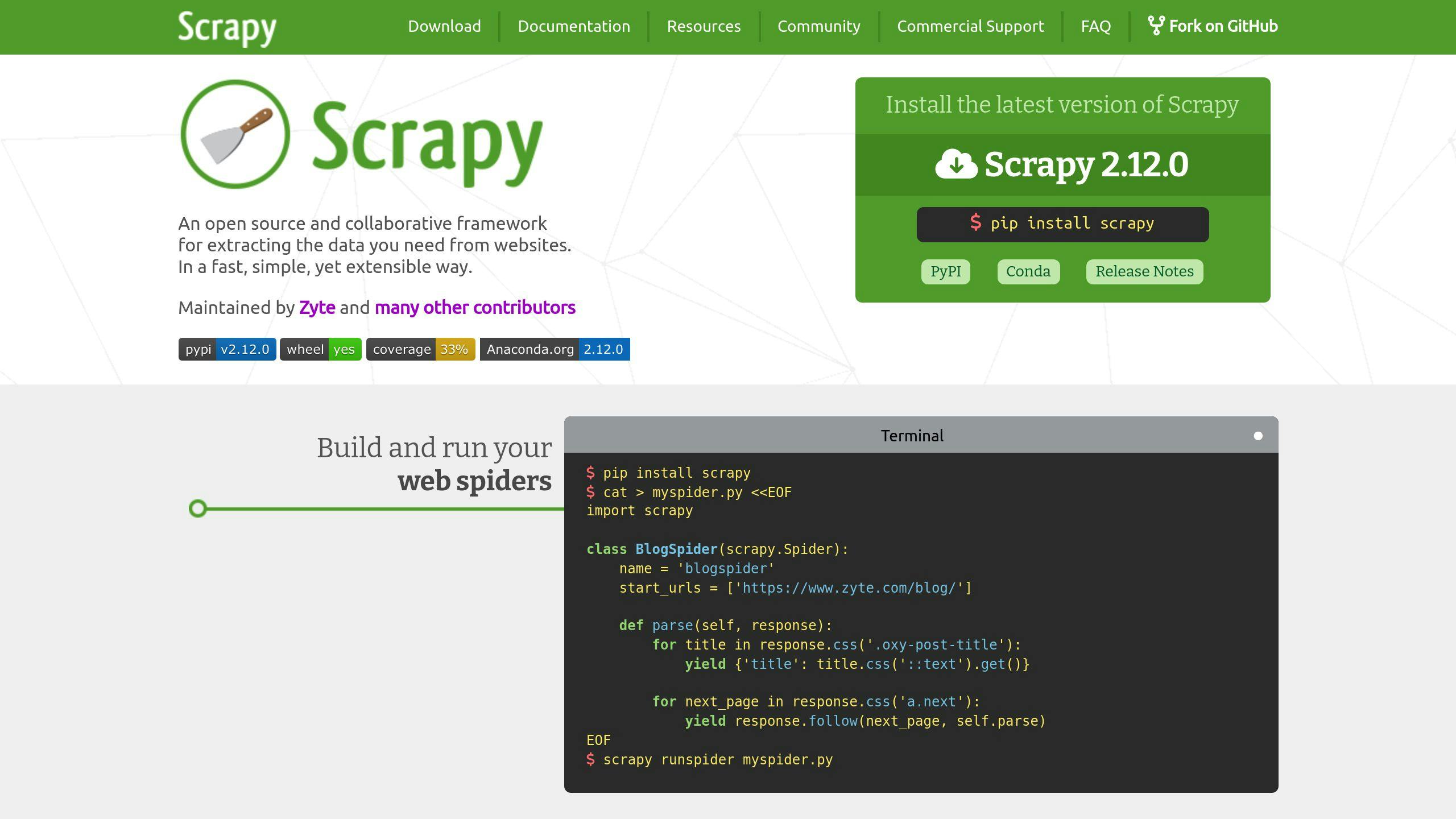

- Scrapy: Handles large-scale scraping and multiple pages.

- Selenium: Ideal for dynamic websites and interactive content.

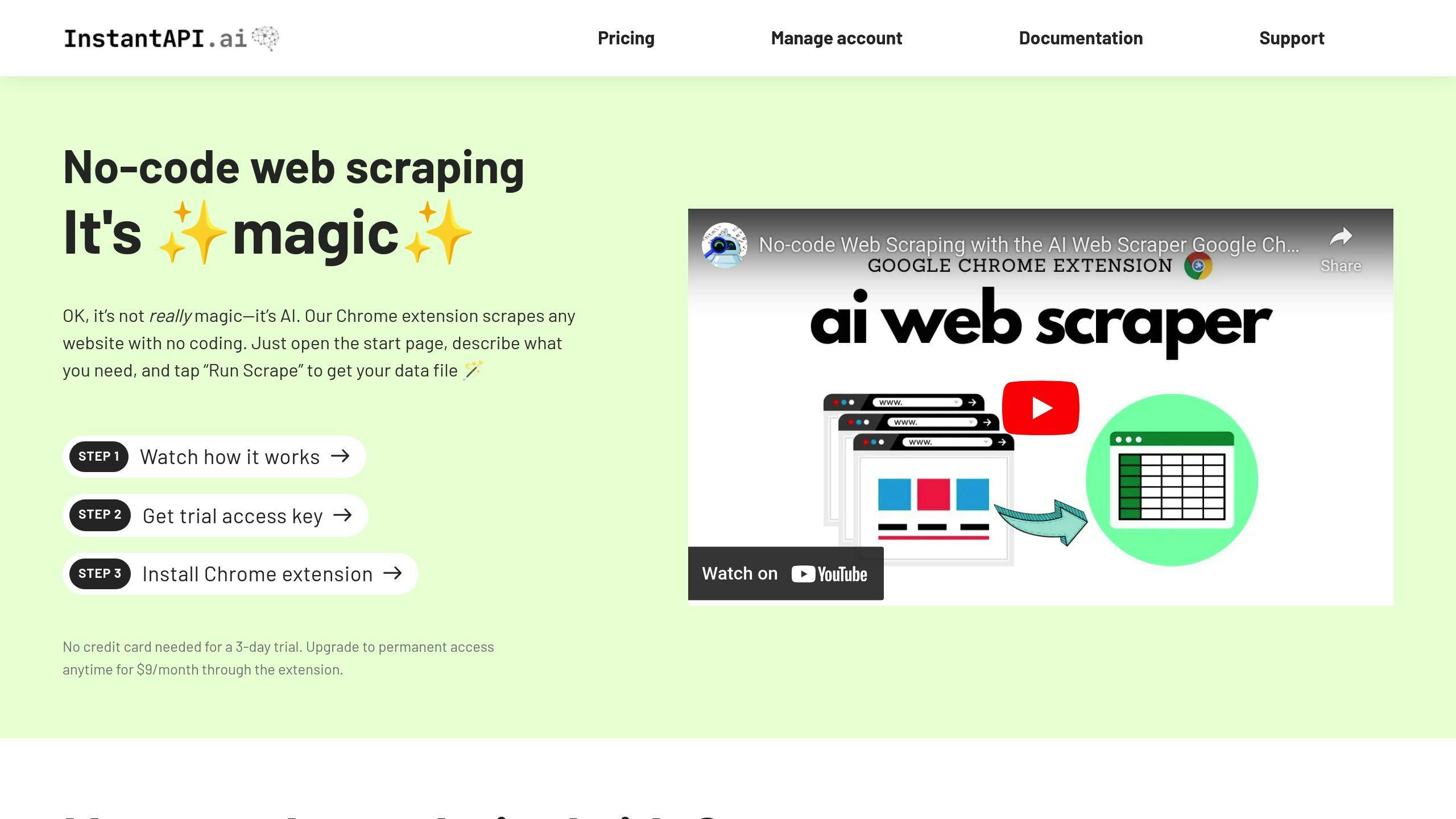

- AI Tools (e.g., InstantAPI.ai): Simplifies scraping for complex or changing websites.

Steps to Start Scraping

- Pick a Website: Check its

robots.txtfile for rules. - Understand the Structure: Inspect the HTML to locate data.

- Write a Script: Use Python libraries like Beautiful Soup or Scrapy.

- Handle Errors: Add timeout and error-handling code.

Ethical Scraping Tips

- Respect

robots.txtrules. - Limit requests to avoid overwhelming servers.

- Use proxies and delays to mimic human browsing.

Quick Comparison of Tools

| Tool | Best For | Learning Curve |

|---|---|---|

| Beautiful Soup | Small projects, static sites | Easy |

| Scrapy | Large-scale projects | Moderate |

| Selenium | Dynamic, interactive sites | Moderate to Complex |

| InstantAPI.ai | AI-powered, tricky websites | Easy to Moderate |

Key Takeaway: Start small with tools like Beautiful Soup, then explore advanced options like Scrapy or AI-based tools as your projects grow.

Related video from YouTube

Tools for Beginners in Web Scraping

Just starting with web scraping? Let's look at the tools that'll make your life easier.

Using Beautiful Soup

Beautiful Soup is your best friend when you're new to web scraping. It's a Python library that helps you pull data from HTML and XML files - even messy ones! It works great with static websites, like pulling product info from online catalogs.

Here's how simple it is to get started:

from bs4 import BeautifulSoup

import requests

url = "http://example.com"

response = requests.get(url)

soup = BeautifulSoup(response.text, 'html.parser')

Getting Started with Scrapy

Think of Scrapy as your Swiss Army knife for web scraping. It's built to handle big jobs - like pulling data from thousands of pages at once. Want to grab every listing from a real estate website? Scrapy's your tool.

What makes Scrapy stand out? It can follow links and handle multiple pages at the same time, perfect when you need to collect lots of data quickly.

Automating with Selenium

Ever tried to grab data from a website that keeps changing? That's where Selenium shines. It acts like a real person - clicking buttons, filling forms, and dealing with dynamic content. Need to check flight prices that only show up after you fill out a form? Selenium's got you covered.

Here's how these tools stack up:

| Tool | Best For | Learning Curve |

|---|---|---|

| Beautiful Soup | Static websites, single pages | Easy |

| Scrapy | Large-scale scraping, multiple pages | Moderate |

| Selenium | Dynamic content, interactive sites | Moderate to Complex |

How to Start Web Scraping

Want to dive into web scraping? Let's walk through the key steps to get you pulling data like a pro.

Choosing Websites to Scrape

Before writing any code, pick the right website for your project. Head to the site's robots.txt file (www.example.com/robots.txt) and check the terms of service - this tells you if scraping is allowed. For your first attempt, stick to beginner-friendly sites like Wikipedia or Reddit. These sites won't block your scraping attempts and offer lots of data to practice with.

Want to peek under a website's hood? Just right-click anywhere on the page and hit "Inspect." This opens your browser's developer tools, showing you the page's building blocks - super helpful for planning your scraping strategy.

Understanding Website Structure

You'll run into two types of websites: static and dynamic. Static sites show their content right in the HTML - perfect for beginners. Dynamic sites load content through JavaScript, which needs fancier tools to handle.

Look for patterns in the HTML code. Maybe all the prices sit in <span> tags with a 'product-price' class. These patterns are like treasure maps - they'll lead you straight to the data you want.

Writing and Running Your Script

Ready to code? Here's a simple example using Python and BeautifulSoup to grab book titles from a bookstore:

import requests

from bs4 import BeautifulSoup

url = "http://books.toscrape.com"

response = requests.get(url)

soup = BeautifulSoup(response.text, 'html.parser')

titles = soup.find_all('h3')

for title in titles:

print(title.text.strip())

Things can go wrong - websites time out or data goes missing. Here's how to handle those hiccups:

try:

response = requests.get(url, timeout=5)

response.raise_for_status()

except requests.exceptions.RequestException as e:

print(f"An error occurred: {e}")

Here's what to check before you start scraping:

| Element | What to Check | Why It Matters |

|---|---|---|

| Robots.txt | Allowed/Disallowed paths | Keeps you out of legal trouble |

| Page Structure | HTML patterns | Makes data extraction work |

| Load Time | Static vs Dynamic content | Helps pick the right tools |

sbb-itb-f2fbbd7

Tips for Ethical Web Scraping

Think of websites as someone's digital home - you wouldn't barge in uninvited, right? Let's look at how to scrape websites while being a good digital citizen.

Respecting Robots.txt Rules

The robots.txt file is like a website's house rules. It tells you which rooms you can enter and which ones are off-limits. For instance, Amazon says "no entry" to their review pages, while Wikipedia keeps most doors open.

Here's what a typical robots.txt looks like:

User-agent: *

Disallow: /private/

Allow: /public/

Crawl-delay: 10

Using Proxies and Limiting Requests

Hitting a website too hard is like repeatedly ringing someone's doorbell - it's annoying and might get you banned. Smart scraping means acting like a regular visitor.

| Request Type | Wait Time | Purpose |

|---|---|---|

| Product pages | 5-10 seconds | Acts like normal browsing |

| Search results | 15-20 seconds | Keeps server happy |

| API calls | 1-2 seconds | Stays within limits |

Want to make this easy? Use Scrapy and set DOWNLOAD_DELAY = 5 in your settings. Done!

Keeping Scripts Updated

Websites change their layout just like stores rearrange their shelves. Check your scripts weekly to catch problems early. Watch out for:

- Data that's gone missing

- Empty results

- HTTP 403 errors

- Different CSS selectors

Run a test script against a page you know well. If it breaks, you'll need to update your selectors. Tools like Assertible or UptimeRobot can watch your scripts and ping you when something's wrong.

"The key to sustainable web scraping is respecting the website's resources and terms of service. By implementing proper delays and following robots.txt guidelines, you ensure both your scraper's reliability and the website's stability", notes the documentation from Scrapy, a leading web scraping framework.

Advanced Tools and Methods

Let's look at how modern tools and AI can help you handle tougher web scraping jobs.

Comparing Web Scraping Tools

Not all scraping tools are created equal. Here's what you need to know about the main players:

| Tool | Best For | Key Features | Cost |

|---|---|---|---|

| Beautiful Soup | Small projects, static sites | HTML parsing | Free |

| Scrapy | Large-scale projects | Distributed crawling | Free |

| Selenium | Dynamic JavaScript sites | Browser automation | Free |

| InstantAPI.ai | Production environments | AI-powered extraction | Free to premium options |

When picking your tool, focus on:

- How big and complex your project is

- What coding skills you'll need

- What you can spend

AI-Based Tools like InstantAPI.ai

AI is changing the game for web scraping, especially when dealing with tricky websites. Take InstantAPI.ai - it's making scraping easier with smart features that handle the heavy lifting for you.

Here's what makes AI tools stand out: They work great with websites that give traditional scrapers headaches, like:

- Sites that update their content all the time

- Pages with weird layouts

- Websites that try to block scrapers

Think of AI scraping tools as your smart assistant that learns and adjusts on the fly. When websites change (and they always do), these tools figure it out without you having to rewrite your code. InstantAPI.ai comes packed with premium proxies and can handle JavaScript - stuff that would normally give you a headache to set up yourself.

Who should use what? If you're just starting out, Beautiful Soup is your friend. But if you're running a business that depends on reliable data collection, AI tools like InstantAPI.ai might be worth the investment. They'll save you time and stress in the long run.

The bottom line? Pick your tool based on what you actually need to do, not what everyone else is using. AI tools are getting better every day at handling the tough stuff, and they're showing us what's possible in web scraping.

Conclusion

Web scraping has grown from a niche technical skill into a practical tool that anyone can use to collect web data. Let's look at what we've learned about the tools and methods that make data gathering easier than ever.

You've got plenty of tools to choose from, no matter your experience level. Beautiful Soup works great for beginners working with simple websites. Scrapy shines when you need to handle big projects. For websites heavy on JavaScript? That's where Selenium comes in handy. And if you're dealing with sites that try to block scrapers, tools like InstantAPI.ai can help you out with smart features and automatic proxy switching.

Here's a practical guide to picking your first tool:

| Experience Level | Tool to Try | Start With This |

|---|---|---|

| New to Scraping | Beautiful Soup | Pull data from one webpage |

| Some Experience | Scrapy | Collect data from multiple pages |

| Tech-Savvy | Selenium/AI Tools | Extract data from interactive sites |

Think of web scraping like learning to cook - start with simple recipes (like grabbing data from a single page with Beautiful Soup) before moving on to more complex dishes. As you get better, you can try out fancier techniques and tools.

Quick Tips for Success:

- Check the robots.txt file before you start

- Space out your requests to be kind to websites

- Pick projects that match your skill level

The world of web data is waiting for you. Pick a tool, start with something small, and remember to play nice with the websites you're scraping.

FAQs

Which AI tool is best for web scraping?

AI web scraping tools pack a punch when it comes to pulling data from complex websites. They're smart enough to handle tricky layouts, roll with website changes, and grab info from multiple places at once - all while keeping things accurate.

These tools work great for both newbies and pros, especially when you're dealing with big projects that might give you a headache otherwise.

But here's the thing: when picking your tool, you need to think about more than just what it can do. Make sure it plays nice with website rules, doesn't hammer servers too hard, and follows those robots.txt files. Being a good internet citizen matters!

Here's a quick look at some top tools:

| Tool | Best For |

|---|---|

| Import.io | Big business data needs |

| Parsehub | Beginners and simple projects |

| Kadoa | Making sense of messy data |

| InstantAPI.ai | Growing projects that need speed |

Is AI used in web scraping?

You bet it is! AI has become a game-changer in web scraping. Think of AI scrapers as super-smart assistants that can figure out how websites are built and grab exactly what you need - no hand-holding required.

Here's what makes AI scraping tools really shine:

- They turn messy web data into clean, usable information

- They can juggle multiple websites at once

- They keep doing their job accurately, even when handling tons of data

- They spot and adjust to website changes before you even notice

Just remember: pick a tool that matches what you're trying to do. Think about how much data you need to grab, how complex your project is, and what you can spend. The right tool will make your life way easier!