Struggling with scraping JavaScript-heavy websites? Here's a quick guide to choosing between Selenium, headless browsers, and InstantAPI.ai.

Dynamic websites built with frameworks like React or Angular make traditional scraping tools ineffective. To extract data efficiently, you need tools that can handle JavaScript rendering, AJAX calls, and anti-bot measures. Here’s a breakdown:

- Selenium: Best for complex interactions and multi-browser support. Handles advanced user actions but is slower and resource-intensive.

- Headless Browsers (e.g., Puppeteer, Playwright): Faster and lightweight, ideal for basic JavaScript rendering but struggles with complex tasks.

- InstantAPI.ai: Combines AI-powered scraping with ease of use, excelling in CAPTCHA handling and proxy management.

Quick Comparison

| Factor | Selenium | Headless Browsers | InstantAPI.ai |

|---|---|---|---|

| Performance | Slower, resource-heavy | Faster, lightweight | Efficient, AI-optimized |

| Dynamic Content | Excellent | Good | Excellent |

| Setup Complexity | High | Low | Very Low |

| Anti-Bot Handling | Strong | Limited | Strong |

| Best Use Case | Complex interactions | High-speed scraping | Easy, AI-driven scraping |

Choose the tool based on your project’s needs: Selenium for intricate workflows, headless browsers for speed, or InstantAPI.ai for simplicity and AI-driven efficiency.

What is a headless browser? How do you run Headless Chrome?

JavaScript-Heavy Websites: Challenges and Impacts

Grasping these challenges is key to understanding how tools like Selenium and headless browsers can help tackle them.

Characteristics of JavaScript-Heavy Websites

Websites that rely heavily on JavaScript come with unique complexities when it comes to scraping. Frameworks like React and Angular use client-side rendering, meaning the content is generated in the browser instead of being delivered as pre-rendered HTML. Additionally, these sites often load data dynamically, triggered by user actions.

| Feature | Scraping Challenge |

|---|---|

| Client-side Rendering | Content isn't available in the initial HTML source |

| AJAX Calls | Data loads dynamically after the page is loaded |

| Single Page Applications | Updates occur without a full page reload |

| Lazy Loading | Content appears only after user interaction |

Why Traditional Scraping Falls Short

Traditional scrapers are designed to work with static HTML, making them ineffective for JavaScript-heavy sites. They can't execute JavaScript, process AJAX requests, or mimic user actions, which means they often miss dynamic content.

The situation gets tougher when websites deploy anti-scraping defenses, such as:

- Monitoring unusual access patterns

- CAPTCHA challenges

- IP blocking and rate limiting

- Requiring user interactions to access content

To navigate these hurdles, modern scraping tools need to handle JavaScript execution, dynamic content updates, and simulate real user behavior. Selenium and headless browsers are particularly helpful in these scenarios, offering the functionality required to interact with and extract data from complex web pages effectively.

Selenium: Handling Dynamic Websites

Selenium is a go-to tool for tackling JavaScript-heavy websites that rely on dynamic content and user interactions. By using WebDriver, a standardized API, Selenium allows you to interact with fully loaded webpage elements. This makes it a solid choice for handling websites with complex JavaScript.

Selenium works seamlessly across various browsers like Chrome, Firefox, and Safari, using browser-specific drivers. Here's a quick overview of the supported browsers and their drivers:

| Browser | Driver |

|---|---|

| Chrome | ChromeDriver |

| Firefox | GeckoDriver |

| Safari | SafariDriver |

| Edge | EdgeDriver |

| Opera | OperaDriver |

Strengths and Weaknesses

Strengths

- Works with multiple programming languages, including Python, Java, JavaScript, C#, and Ruby.

- Supports cross-browser testing.

- Handles advanced user interactions like scrolling, clicking, and form submissions.

- Backed by a large community with plenty of resources and documentation.

Weaknesses

- Uses a lot of memory and CPU, which can slow down performance.

- Installation and setup can be complicated.

- Slower execution compared to headless browser tools.

- Requires regular updates for drivers and dependencies.

Selenium's WebDriverWait feature ensures that dynamic content is fully loaded before any action is taken. For example, it’s particularly useful for scraping e-commerce or social media platforms:

WebDriverWait(driver, 20).until(EC.presence_of_all_elements_located((By.CLASS_NAME, 'product-name')))

While Selenium can be resource-intensive, its extensive capabilities make it a dependable tool for complex web automation tasks. If your project requires simulating sophisticated user interactions, Selenium is hard to beat. However, for simpler tasks, headless browsers might be a more lightweight and efficient option.

Headless Browsers: A Lightweight Option

Headless browsers differ from Selenium by running without a graphical interface. This makes them faster and less demanding on system resources for straightforward tasks.

What Are Headless Browsers?

Headless browsers, such as Puppeteer and Playwright, are browsers that function in the background, without displaying a visual interface. They can execute JavaScript and process web content efficiently, making them a great fit for working with modern web applications and extracting data from dynamic pages.

Strengths and Weaknesses of Headless Browsers

To understand their capabilities, let’s break down the pros and cons of headless browsers:

| Aspect | Strengths | Weaknesses |

|---|---|---|

| Performance | Faster and uses fewer resources | Struggles with complex interactions |

| Setup | Easy to install and configure | Fewer browser options available |

| Automation | Great for basic scraping tasks | Less effective against advanced anti-bot measures |

Headless browsers are ideal for tasks like quickly scraping data from dynamic sites. For instance, when gathering product information from e-commerce platforms, they can handle JavaScript-rendered content efficiently while using fewer resources compared to full browsers.

However, they may run into difficulties with sophisticated anti-bot measures, such as CAPTCHAs or advanced detection systems. To get the most out of headless browsers, consider these strategies:

- Analyze network traffic to find API endpoints for direct data retrieval.

- Use proxy rotation to avoid rate limits.

- Add retry mechanisms to handle failed requests effectively.

When deciding between headless browsers and Selenium, think about the complexity of the task and the resources available. While headless browsers might not handle intricate automation as well as Selenium, they shine when speed and efficiency are the main priorities. Comparing the two tools highlights their unique strengths and limitations for different use cases.

sbb-itb-f2fbbd7

Selenium vs. Headless Browsers: Comparison

When dealing with JavaScript-heavy websites, your choice between Selenium and headless browsers hinges on the specifics of your project.

Comparison Factors

Several factors play a role in determining which tool works best for handling JavaScript-heavy websites:

| Factor | Selenium | Headless Browsers |

|---|---|---|

| Performance & Resource Use | Slower and uses more resources | Faster and uses fewer resources |

| Browser Support | Works with multiple browsers | Mostly limited to Chromium-based browsers |

| Dynamic Content Handling | Great for complex interactions | Works well for basic JavaScript rendering |

| Setup Complexity | Requires more configuration | Easier to set up and deploy |

| Scalability | Harder to scale due to resource demands | Scales better in containerized setups |

| Anti-Bot Systems | Handles anti-bot challenges effectively | Struggles with advanced anti-bot measures |

Application Examples

Selenium shines in tasks that involve intricate workflows, like scraping job listings on LinkedIn, managing authentication, or navigating infinite scrolling. On the other hand, headless browsers are perfect for fast data extraction tasks, such as collecting headlines from JavaScript-heavy news sites. Their ability to run multiple processes at once makes them ideal for large-scale operations.

Here’s how they perform in common scenarios:

1. Product Catalog Scraping

Headless browsers are great for quickly extracting product details from sites built with modern JavaScript frameworks. Their lightweight nature allows for simultaneous processing of multiple pages.

2. User Session Management

Selenium is better suited for maintaining login states and handling authentication flows, making it the go-to choice for scraping data requiring logged-in access.

3. Price Monitoring Systems

Both tools work, but headless browsers tend to perform better for frequent price checks across many retailers. However, Selenium is more reliable when dealing with sites that have strict anti-scraping protections.

For even more advanced capabilities, combining these tools with platforms like InstantAPI.ai can take your data collection to the next level.

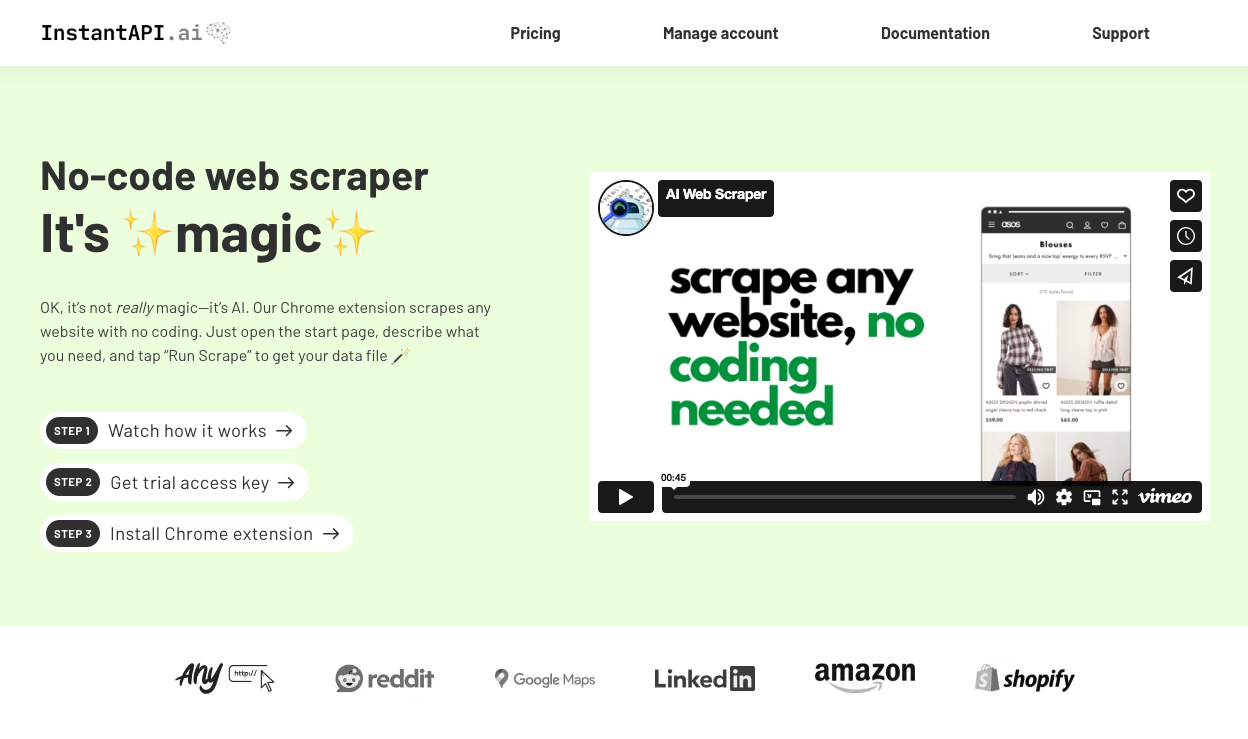

Integrating InstantAPI.ai for Data Extraction

InstantAPI.ai blends the control of Selenium with the speed of headless browsers, making it a practical choice for scraping JavaScript-heavy websites. By addressing the weaknesses of both Selenium and headless browsers, it offers a hybrid solution to tackle modern web scraping challenges.

Features of InstantAPI.ai

InstantAPI.ai takes a unique dual approach to web scraping, providing powerful JavaScript rendering without the hassle of complex setup. Here are some key technical highlights:

| Feature | What It Does |

|---|---|

| AI-Powered Scraping | Handles dynamic content and adapts to site changes |

| Premium Proxies | Built-in rotation system avoids IP bans |

| JavaScript Rendering | Easily processes dynamic and interactive content |

| Simplified Selection | Removes the need for xPath, making it easier to use |

| Automatic Updates | Keeps scrapers functional as websites evolve |

The platform also features a Chrome extension for easy, point-and-click data extraction, making it beginner-friendly. For developers, it offers an API that allows advanced customization for more complex scraping needs.

Use Cases and Pricing of InstantAPI.ai

The Chrome extension is priced at $9/month, making it suitable for small to mid-sized projects. Larger enterprises can opt for custom API solutions, which include dedicated support and tailored implementation.

InstantAPI.ai shines in several real-world applications:

| Use Case | How It Helps |

|---|---|

| E-commerce Monitoring | Tracks prices and inventory in real-time on JavaScript-heavy sites |

| Social Media Analytics | Extracts data from dynamic feeds and infinite scroll pages |

| Market Research | Automates competitor data collection from modern web platforms |

| Content Aggregation | Gathers articles efficiently from dynamic news websites |

For JavaScript-heavy sites, InstantAPI.ai bridges the gap between Selenium's detailed control and the efficiency of headless browsers. Its AI-driven features simplify tasks like CAPTCHA handling and proxy rotation, ensuring consistent and reliable data extraction.

With InstantAPI.ai, choosing between Selenium, headless browsers, or a hybrid approach becomes much easier.

Choosing the Right Tool

Picking the right tool for working with JavaScript-heavy websites isn't a decision to take lightly. The choice between Selenium, headless browsers, and InstantAPI.ai can make a big difference in how smoothly your project runs.

Factors to Consider

When deciding on a tool, think about the specific needs of your project and the unique features each tool offers. Here's how InstantAPI.ai stands out:

| Factor | InstantAPI.ai Strength |

|---|---|

| Resource Management | Uses AI to allocate resources efficiently |

| Implementation | Easy setup through a Chrome extension |

| Dynamic Content | Handles JavaScript and interactive elements effectively |

| Execution Speed | Designed for quick, dynamic tasks |

| Maintenance | Automatically updates to adapt to site changes |

The right choice depends on your project's technical demands. For example, Selenium is great for tackling complex interactions, while headless browsers are known for their speed. InstantAPI.ai strikes a balance by offering powerful features without the steep learning curve. In some cases, combining these tools might be the smartest move for achieving a mix of efficiency, control, and scalability.

Combining Tools

A hybrid strategy can be incredibly effective. For instance, you could use Selenium for intricate login processes, headless browsers for quick data extraction, and InstantAPI.ai to manage anti-bot defenses. This approach lets you capitalize on the strengths of each tool while working around their weaknesses.

The trick is knowing when and where to use each tool. Modern web scraping often requires this kind of adaptable approach to ensure you get the best results without overloading your resources or compromising reliability.

Conclusion: Selecting the Best Tool

Picking the right tool for JavaScript-heavy websites boils down to what your project needs. Selenium is ideal for handling complex tasks, headless browsers focus on speed and efficiency, and InstantAPI.ai simplifies the process with its AI-powered features.

Selenium is a go-to for intricate web automation and testing. It works across multiple browsers and supports languages like Python, Java, and C#. Its advanced features make it perfect for projects requiring detailed user interaction simulations.

Headless browsers, like Puppeteer and Playwright, shine in efficiency. Puppeteer boasts 89.3k GitHub stars and 3.1M weekly downloads, while Playwright has 68.3k stars and 8.7M downloads a week. These numbers underscore their popularity for lightweight and high-volume scraping tasks.

InstantAPI.ai, on the other hand, offers a straightforward, AI-powered option. Its Chrome extension makes scraping accessible without the hassle of complex setups, making it a great choice for teams looking for a no-fuss solution.

Here’s a quick reference for choosing the right tool:

| Scenario | Recommended Tool | Key Benefit |

|---|---|---|

| Complex Scenarios & Multi-Browser Use | Selenium | Advanced automation capabilities |

| High-Volume Data Extraction | Headless Browsers | Faster, resource-efficient scraping |

| Quick Prototyping & AI-Based Tasks | InstantAPI.ai | Easy-to-use, no-code functionality |

Success in web scraping comes from knowing how to use each tool effectively. Selenium excels in automation, headless browsers prioritize speed, and InstantAPI.ai brings an AI-driven ease to the table. The best choice depends on your specific needs - whether it's handling JavaScript, managing resources, or reducing maintenance efforts.

For more insights, check out the FAQs, which address common questions about speed, efficiency, and more.

FAQs

Is headless faster than Selenium?

Yes, headless browsers are much faster than Selenium when using real browsers. They can complete tasks roughly twice as quickly. This speed advantage comes from their efficiency - they skip visual rendering, which reduces resource use and speeds up command execution.

Headless browsers shine in certain scenarios:

| Use Case | Performance Advantage |

|---|---|

| Large-scale Web Scraping | Higher throughput with lower resource consumption |

| Automated Testing | Cuts test execution times by about 30% |

That said, while headless browsers are great for speed, they may struggle with complex interactions. In such cases, you might need to use Selenium or a combination of tools, depending on whether speed or full browser automation is more important for your needs.