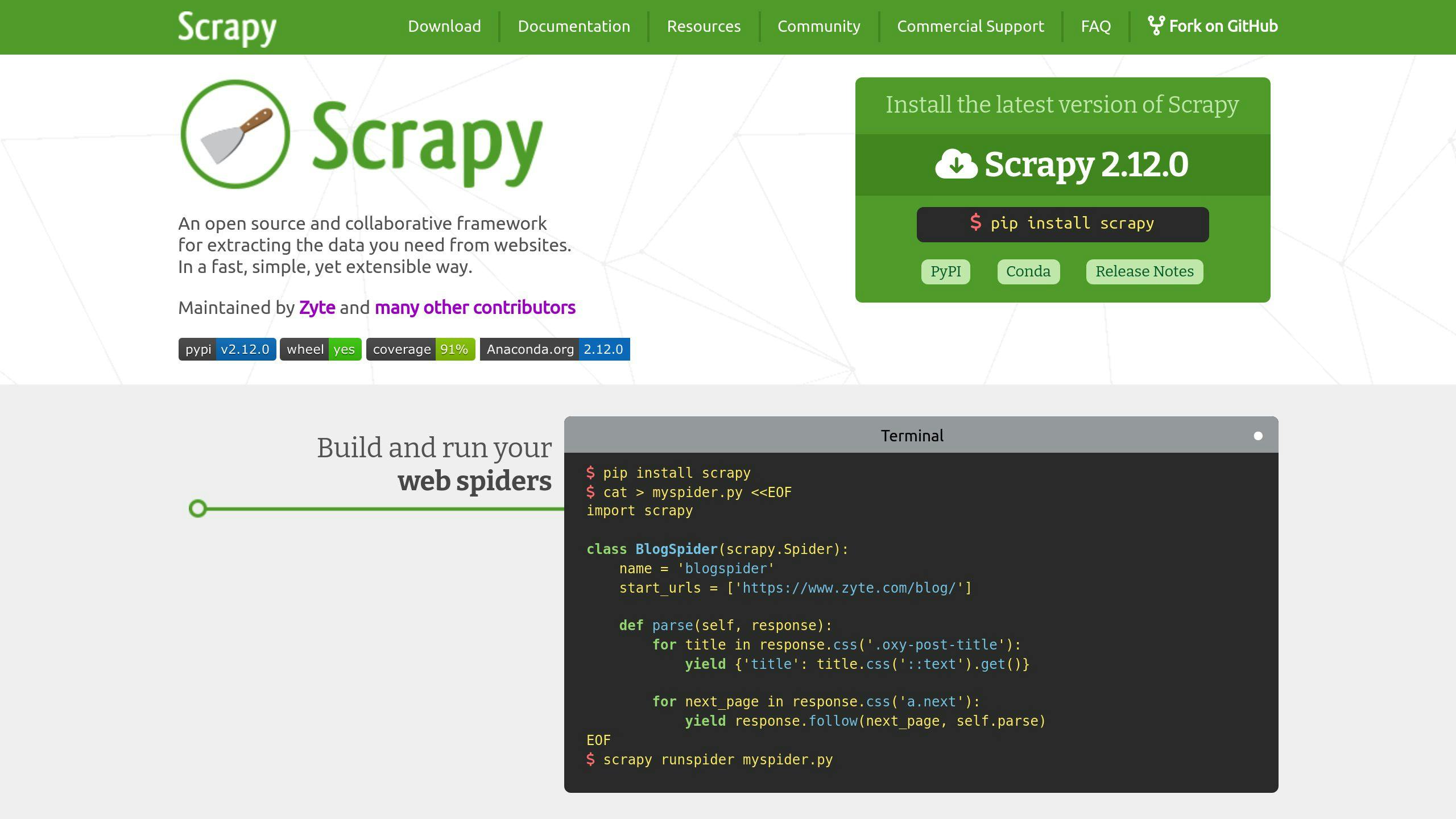

Web scraping is evolving with AI. By pairing Scrapy, a powerful Python-based scraping framework, with AI technologies like NLP, machine learning, and computer vision, you can extract data smarter and faster - even from complex, dynamic websites.

Scrapy Course – Python Web Scraping for Beginners

Key Highlights:

- Scrapy Basics: A Python tool for fast, large-scale web scraping with asynchronous capabilities.

- AI Integration: Enhances scraping with features like:

- NLP: Understands text context for better data extraction.

- Machine Learning: Adapts to website changes automatically.

- Computer Vision: Extracts data from images and layouts.

- Setup: Install libraries like TensorFlow, PyTorch, and spaCy alongside Scrapy in a structured environment.

- Applications:

- E-commerce price tracking

- Social media sentiment analysis

- Large-scale web indexing

- Challenges Solved by AI:

- Handling JavaScript-heavy content

- Bypassing anti-bot measures

- Smarter proxy management

Quick Comparison: AI Features in Web Scraping

| Feature | Function | Use Case |

|---|---|---|

| NLP | Context-aware text analysis | Product names, reviews |

| Machine Learning | Adapts to changes | Dynamic websites |

| Computer Vision | Visual data extraction | Product images, OCR |

| Proxy Management | Optimized IP rotation | Reduces bans, speeds up tasks |

Scrapy + AI is transforming data extraction, making it smarter, faster, and more adaptable to modern web challenges. Dive in to learn how to set up and use this powerful combination effectively.

Setting Up an AI-Powered Scrapy Environment

Setting up a Scrapy environment with AI capabilities involves a few steps to ensure smooth integration of these tools. Here's a breakdown of what you need to do.

Installing Scrapy and AI Libraries

First, create and activate a virtual environment to keep your dependencies organized. Then, install the necessary libraries:

# Install Scrapy and AI libraries

pip install scrapy==2.12.0 tensorflow torch scikit-learn spacy opencv-python

Configuring Scrapy for AI Integration

To incorporate AI into your Scrapy project, update the configuration files. These adjustments help Scrapy work seamlessly with AI components:

# settings.py

CONCURRENT_REQUESTS = 16

ROBOTSTXT_OBEY = True

ITEM_PIPELINES = {

'yourproject.pipelines.AIPipeline': 300,

}

Using AI Frameworks with Scrapy

Enhance your Scrapy project by leveraging AI frameworks like spaCy for text analysis, OpenCV for image processing, TensorFlow for machine learning, and PyTorch for deep learning. Keep your AI-related code separate from Scrapy's core functionality to simplify updates and scaling:

your_project/

├── spiders/

├── ai_modules/

│ ├── text_processor.py

│ ├── image_analyzer.py

│ └── ml_classifier.py

├── pipelines.py

└── settings.py

Here’s how different frameworks can be applied:

| Framework | Use Case | Integration Example |

|---|---|---|

| spaCy | Text Analysis | Extracting entities, classifying text |

| OpenCV | Image Processing | Analyzing product images |

| TensorFlow | Machine Learning | Recognizing patterns |

| PyTorch | Deep Learning | Handling dynamic content |

Once your environment is ready, you can begin integrating AI techniques into Scrapy to tackle complex data extraction tasks.

AI Techniques for Data Extraction

Combining AI with Scrapy unlocks smarter ways to gather data. By using advanced AI methods, it's possible to overcome common scraping challenges and extract information with improved accuracy and understanding.

NLP for Smarter Contextual Scraping

Natural Language Processing (NLP) helps Scrapy pull out meaningful, context-aware data. It can identify things like product names, assess customer sentiment in reviews, and sort content based on relevance. This is especially handy when dealing with messy, unstructured data across various website formats.

"The NLP skills you develop will be applicable to much of the data that data scientists encounter as the vast proportion of the world's data is unstructured data and includes a great deal of text." - Hugo Bowne, Data Scientist

Machine Learning for Flexible Scraping

Machine learning allows Scrapy to adapt to changes in website structures automatically. This flexibility can:

- Spot and adjust to new content patterns

- Adapt scraping methods when site layouts shift

- Improve efficiency by learning from past patterns

Computer Vision for Visual Data Extraction

With computer vision, Scrapy can process images, videos, and other multimedia content. This is crucial for extracting data from visually rich websites. Some practical uses include:

| Component | Purpose | Example Use Case |

|---|---|---|

| Image Recognition | Classify and identify images | Scraping product catalogs |

| OCR Integration | Extract text from visuals | Pulling data from screenshots |

| Pattern Recognition | Locate specific visual elements | Detecting logos |

"Image acquisition is one of the most under-talked about subjects in the computer vision field!" - Adrian Rosebrock, Author of PyImageSearch

Overcoming Web Scraping Challenges with AI

AI doesn't just improve data extraction techniques - it also tackles some of the toughest challenges in web scraping today. Here's how AI is changing the game for modern web scraping workflows.

Handling Dynamic Content and JavaScript

Websites with dynamic, JavaScript-heavy content can be tricky to scrape. AI steps in with smarter methods like predictive loading and advanced rendering techniques.

Take the integration of scrapy-playwright, for example. Here's how AI adds value:

| Feature | AI Function | Advantage |

|---|---|---|

| Content Detection | Predictive modeling | Ensures all content is fully loaded |

| JavaScript Execution | Neural network analysis | Speeds up rendering by optimizing paths |

| Resource Management | Adaptive prioritization | Focuses resources on critical elements |

Navigating Anti-Bot Measures and CAPTCHAs

AI-powered tools are now capable of solving CAPTCHAs automatically, ensuring scraping remains efficient while adhering to ethical standards.

"One of the most effective ways to handle CAPTCHAs when scraping data is to utilize a CAPTCHA solver service that employs advanced algorithms and machine learning techniques to automatically solve challenges." - HackerNoon Author

Capsolver, for instance, integrates with Scrapy via custom middleware, using machine learning to handle CAPTCHA challenges seamlessly.

Smarter Proxy Management and IP Rotation

AI has revolutionized proxy management, making it more efficient and reliable. Here's how:

- Pattern recognition: AI analyzes usage patterns to optimize IP rotation, cutting blocking rates by 75%.

- Real-time proxy analysis: Monitors performance to ensure speed and reliability.

- Smart routing: Distributes requests intelligently across different geographic locations.

| Proxy Feature | AI Role | Benefit |

|---|---|---|

| Dynamic IP Rotation | Usage pattern analysis | Reduces detection risks |

| Performance Monitoring | Predictive analysis | Keeps speeds consistent |

| Geographic Distribution | Intelligent routing | Ensures stable access across regions |

"AI + proxies = ❤️" - HackerNoon Author

With these AI-driven advancements, developers can confidently tackle even the most complex web scraping challenges, opening doors to new possibilities in data extraction.

sbb-itb-f2fbbd7

Applications of Scrapy with AI

AI-powered Scrapy is changing the game for businesses needing precise and efficient web data extraction. Here's a look at how this integration is making waves across industries.

E-Commerce Price Monitoring

Scrapy, combined with AI, offers businesses a smarter way to monitor competitor pricing. By integrating machine learning with web scraping, companies can track prices across various e-commerce platforms with improved precision.

| Feature | AI Integration | Business Benefit |

|---|---|---|

| Dynamic Price Detection | Machine learning identifies subtle pricing trends | Quick detection of competitor price changes |

| Product Matching | NLP analyzes descriptions to match products | Reliable cross-platform comparisons |

| Trend Analysis | Predictive models anticipate pricing shifts | Informed pricing strategies |

This setup ensures retailers can stay competitive while protecting their profit margins. For example, Zyte API's AI-driven scraping tools use features like automatic proxy rotation and anti-ban mechanisms, ensuring uninterrupted data collection even from highly protected websites. It's a powerful way to gain insights into competitive pricing.

Social Media Sentiment Analysis

By combining Scrapy with Natural Language Processing (NLP), businesses can dive deep into public opinion and sentiment. This integration allows them to:

- Analyze sentiment data to understand brand perception.

- Monitor customer complaints and how they're addressed.

- Spot trends in user feedback that could impact strategy.

"AI tools adapt dynamically to different websites, improving efficiency." - X-Byte, Guide on AI Data Scraping Quality Ethics and Challenges

AI's ability to grasp the context and subtleties of social media posts helps businesses make smarter, data-driven decisions based on customer sentiment.

Large-Scale Web Indexing

For organizations managing massive datasets, AI-enhanced Scrapy streamlines web indexing by making the process smarter and more efficient. Here's how:

| Capability | Function | Result |

|---|---|---|

| AI-driven Crawling | Focuses on relevant content and resources | Faster, more accurate data collection |

| Content Relevance | Automatically classifies data by industry | Organized and structured datasets |

| Tailored Indexing | Categorizes content for specific markets | Optimized for niche needs |

This approach is particularly useful for large-scale web data collection, ensuring that only the most relevant content is prioritized. AI helps allocate resources effectively, maintaining high data quality even in extensive operations.

These applications highlight how AI-powered Scrapy is reshaping web data extraction. At the same time, they emphasize the need for ethical practices in data collection.

Best Practices and Ethical Considerations

Compliance with Robots.txt and Policies

The scrapy-robotparser library simplifies compliance with robots.txt files, helping manage requests more effectively and align with website policies.

| Compliance Element | Implementation | AI Integration |

|---|---|---|

| Rate Limiting | AutoThrottle extension | AI-driven request adjustments |

| Access Rules | Parsing robots.txt | Automated detection of rules |

| Request Timing | Off-peak scheduling | Dynamic load management |

Sticking to these rules ensures ethical scraping practices. At the same time, robust security measures are necessary to maintain data integrity.

Data Privacy and Security

Using MongoDB with SSL/TLS provides a secure foundation for storing data. To further safeguard information, encryption, role-based access controls, and AI-powered anonymization techniques protect sensitive data. These measures ensure that data is handled responsibly and securely.

It's not just about security, though. Efficiency must also be balanced with ethical considerations for effective AI-powered scraping.

Balancing Efficiency and Ethics

AI can make scraping more efficient while staying within ethical boundaries. Distributed systems help reduce the load on individual servers, ensuring smooth operations without causing disruptions.

| Strategy | Purpose | Implementation |

|---|---|---|

| Predictive Analysis | Improves request timing | AI forecasts traffic peaks |

| Load Distribution | Lightens server load | Multiple endpoints with AI-based routing |

| Content Filtering | Safeguards sensitive data | NLP tools classify content |

Future Trends in AI-Powered Web Scraping

New AI Tools for Data Extraction

AI models are now reaching levels of understanding that closely resemble human capabilities, especially when it comes to analyzing unstructured text. Advances in Natural Language Processing (NLP) are at the forefront of this progress. Generative AI tools like Gemini, Copilot, and Claude are making it possible to process web data using natural language commands.

Julius Černiauskas, CEO of Oxylabs, highlights the importance of CDP browser tools for more advanced scraping:

"These tools allow scraping with real-like browsers not easily detectable by the ever-improving anti-bot systems."

| AI Technology | Use Cases |

|---|---|

| NLP Models | Extracting context-aware data |

| Computer Vision | Analyzing multimodal content |

| Generative AI | Automating entire workflows |

These developments could push tools like Scrapy toward becoming smarter and more autonomous scraping solutions.

Scrapy's AI-Driven Evolution

Scrapy is already moving toward smarter automation and improved data handling thanks to AI integration. Juras Juršėnas, Chief Operations Officer at Oxylabs, shares his vision:

"A new generation of AI-assisted tools for developers will go mainstream. This will affect many industries, including web scraping."

AI-powered scrapers will soon predict when certain data points are likely to change, making resource allocation more efficient. These tools will also adapt automatically to website updates, reducing the need for constant manual adjustments.

Tackling Web Scraping Challenges

With regulatory bodies like the SEC and FTC proposing stricter rules, data collection is facing new challenges. Developers can address these hurdles by adopting innovative solutions:

| Challenge | Solution | Implementation |

|---|---|---|

| Anti-Bot Systems | AI-driven evasion | Dynamic browser fingerprinting |

| Data Privacy | Automated compliance | Real-time anonymization |

| Scaling Operations | Distributed architecture | AI-optimized load balancing |

These technologies are already enhancing applications like real-time price tracking and sentiment analysis. Interestingly, two-thirds of companies now rely on generative AI-based tools. To stay competitive, developers should prioritize ethical practices and implement strong security measures.

The fusion of AI and web scraping is reshaping how data is gathered and used, with more changes on the horizon.

Conclusion

Integrating AI with Scrapy has made web scraping smarter and more efficient, tackling modern data challenges head-on. Research from PwC highlights that basic AI-driven extraction methods can cut data processing time by 30-40%. These tools not only simplify workflows but also address significant hurdles in today’s web scraping tasks.

AI has brought notable improvements in areas like proxy management, parsing, and handling dynamic content. The benefits are clear:

- Automation: AI adapts to website changes, increasing efficiency by 67%.

- Data Quality: Better parsing accuracy minimizes unstructured data issues by 95%.

- Scalability: Efficient resource management supports over 100,000 concurrent requests.

Beyond technical advancements, AI-powered scraping tools have become critical for businesses aiming to stay ahead. With 35% of organizations already using AI and 85% viewing it as a strategic priority, these tools are reshaping how data is collected and utilized.

As AI tools evolve, they are becoming essential for smarter, more streamlined web scraping across industries. Organizations are focusing on areas like intelligent proxy management, advanced CAPTCHA solutions, and handling dynamic content effectively.

To fully leverage AI-powered Scrapy, businesses must strike a balance between innovation and ethics. Adhering to robots.txt policies and implementing strong security measures ensures responsible and compliant data collection practices.

FAQs

Can I use Selenium with Scrapy?

Yes, you can combine Selenium with Scrapy to scrape websites that rely heavily on JavaScript. This setup lets you handle dynamic content and interact with complex page elements. The scrapy-selenium package makes this process easier by enabling Scrapy spiders to render pages using Selenium.

Here’s a simple example of how to use Selenium with Scrapy:

import scrapy

from selenium import webdriver

class MySpider(scrapy.Spider):

name = 'myspider'

def parse(self, response):

driver = webdriver.Chrome()

driver.get(response.url)

# Extract data from JavaScript-rendered content

data = driver.find_element_by_class_name('dynamic-content').text

driver.quit()

yield {'data': data}

Things to keep in mind when using Selenium with Scrapy:

- Performance Trade-offs: Using a browser for rendering can slow down your scraping and increase resource usage.

- Best Use Cases: Ideal for websites that require JavaScript rendering or mimic complex user interactions.

- Advanced Applications: Selenium can be useful for feeding machine learning models with dynamic content that isn’t accessible through static scraping.

To get started, install the scrapy-selenium package and set up the necessary middleware. If JavaScript rendering isn’t critical for your project, you might want to explore other solutions, such as AI-powered tools, for faster and more efficient scraping.

While Selenium adds flexibility to Scrapy, always weigh its advantages against the potential performance costs. For some projects, an alternative approach might work better.