Machine learning has transformed data parsing, making it more efficient and adaptable for complex datasets like text, images, APIs, and documents. Here's what you'll learn:

-

Why Machine Learning for Parsing?

ML improves accuracy, handles ambiguity, and processes large datasets better than traditional methods. -

Key Techniques:

- NLP: Extracts structured data from text (e.g., emails, social media).

- Computer Vision: Parses images for OCR and feature detection.

- API Parsing: Adapts to schema changes and handles anomalies automatically.

-

Tools to Use:

- TensorFlow/Keras: For deep learning-based parsing.

- Scikit-Learn: For preprocessing and feature engineering.

- InstantAPI.ai: AI-driven web scraping for dynamic websites.

-

Applications:

- Web scraping, document parsing, and automating workflows in industries like healthcare and finance.

Takeaway: Machine learning revolutionizes data parsing by handling diverse formats and improving efficiency. However, organizations must prioritize data privacy and compliance when implementing these systems.

Python AI Web Scraper Tutorial - Use AI To Scrape ANYTHING

Machine Learning Techniques for Data Parsing

Machine learning makes it possible to parse complex, unstructured data with precision. Below, we look at how specific ML techniques tackle different data formats.

NLP for Text Parsing

Natural Language Processing (NLP) models transform raw text into structured data by recognizing context and patterns. Here's how they handle different text sources:

| Text Source | Parsing Challenge | ML Solution |

|---|---|---|

| Legal Documents | Complex terminology | Named Entity Recognition |

| Social Media | Informal language | Sentiment Analysis |

| Technical Reports | Domain-specific jargon | Specialized NLP Models |

| Email Communications | Mixed formats | Text Classification |

NLP is particularly effective at capturing the subtleties of language, ensuring high accuracy when parsing a wide range of documents.

Image Parsing with Computer Vision

Convolutional Neural Networks (CNNs) have transformed how visual data is processed. They excel at tasks like:

- Feature Detection: Spotting objects, text, or patterns in images.

- Data Extraction: Converting visual elements into structured formats.

- Format Recognition: Handling different image types and varying quality levels.

Unlike traditional OCR, computer vision models can manage more complex visuals, such as handwritten notes or intricate diagrams. This makes them a game-changer for tasks like digitizing documents or automating inspections.

API Data Parsing with Machine Learning

Machine learning simplifies parsing data from APIs by adapting to schema changes, handling anomalies, and extracting relevant information. Key capabilities include:

1. Adaptive Schema Handling

ML models dynamically adjust to changes in API response structures, minimizing the need for manual updates.

2. Intelligent Data Extraction

By identifying patterns in formats like JSON or XML, ML makes it easier to extract data, even when the structure changes.

3. Error Handling

ML-powered systems can detect and correct anomalies in API responses, learning from past issues to improve future accuracy.

These techniques ensure reliable and efficient parsing, even in the face of evolving data structures.

Tools and Frameworks for Parsing with Machine Learning

Machine learning has made data parsing more efficient, thanks to advanced tools and frameworks. Below are some of the most effective options available today.

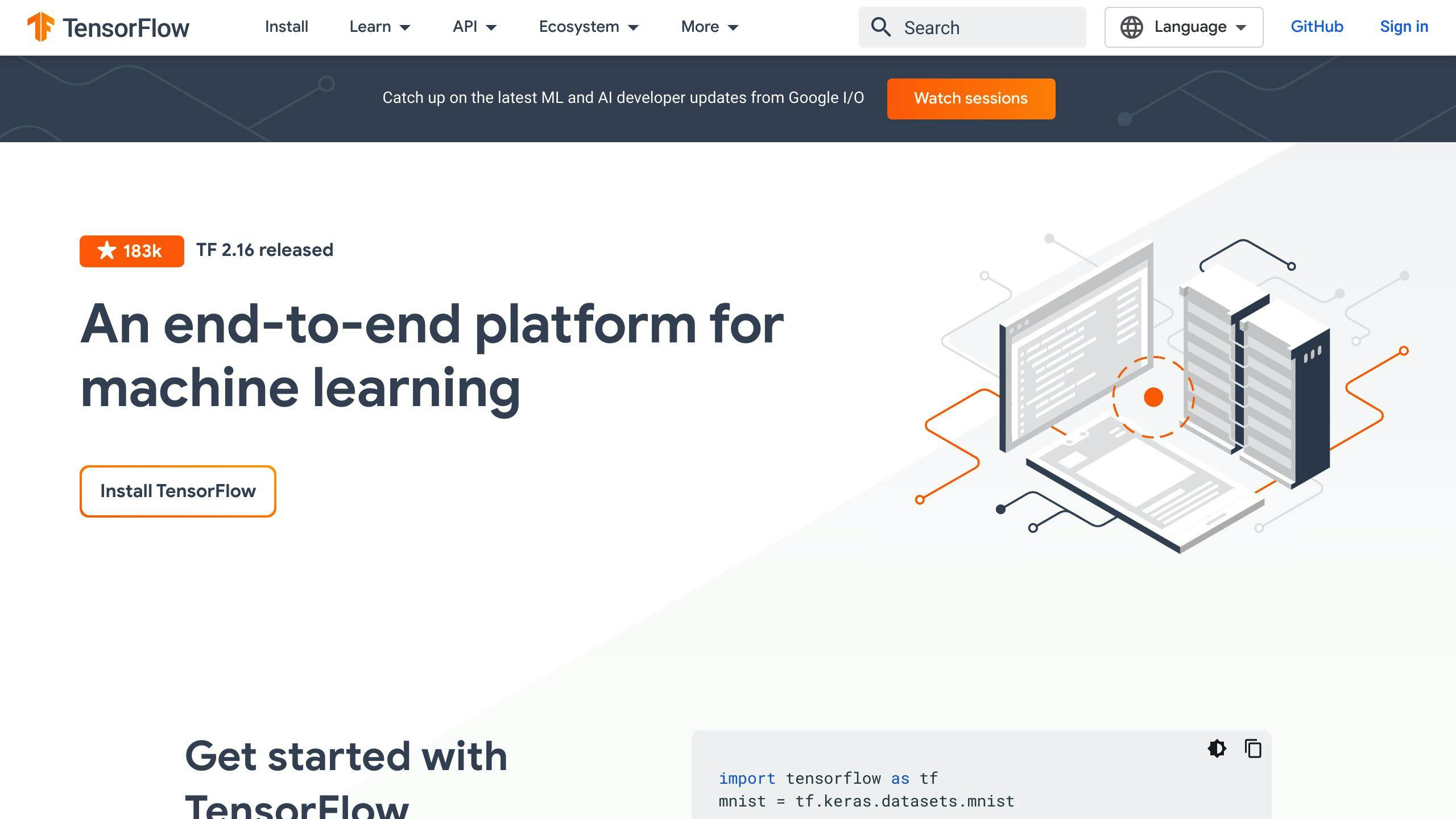

TensorFlow and Keras for Deep Learning

TensorFlow, along with its high-level API Keras, is widely used for building advanced parsing models. These tools offer:

| Feature | Application and Use Case |

|---|---|

| Custom Architectures | Build tailored models or use pre-trained ones for parsing complex documents. |

| Distributed Training | Handle massive datasets efficiently, ideal for large-scale enterprise needs. |

| Optimized Performance | Fine-tune models for seamless integration into production workflows. |

TensorFlow is particularly useful for creating custom classifiers that work well with unstructured data. Its deep learning capabilities make it a top choice for tasks requiring sophisticated parsing methods.

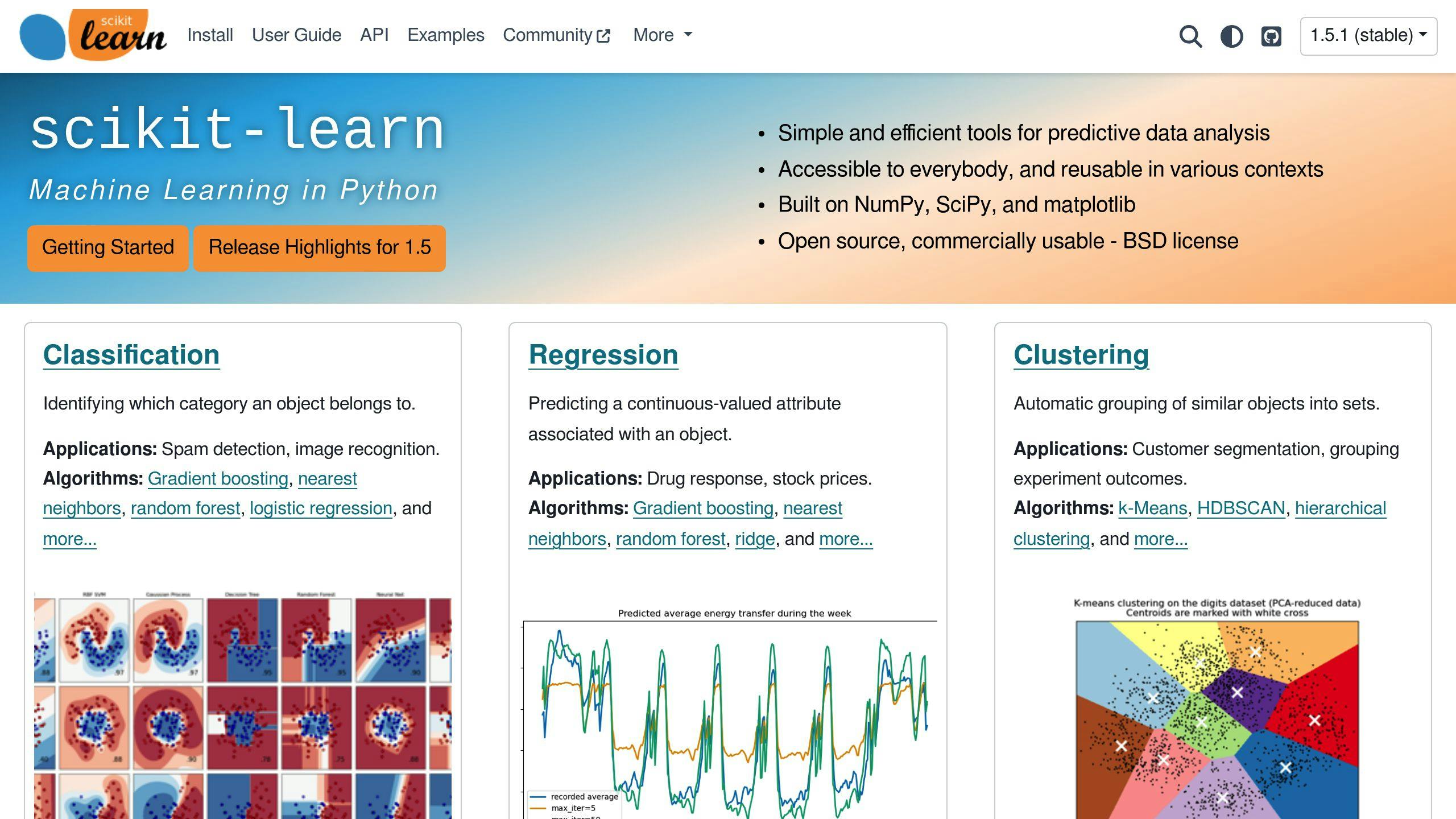

On the other hand, Scikit-Learn complements TensorFlow by simplifying preprocessing and feature engineering, essential steps in parsing workflows.

Scikit-Learn for Data Preparation

Scikit-Learn is a go-to tool for preprocessing and feature extraction. It offers utilities for tasks like normalization, encoding, and feature selection. One standout feature is its pipeline functionality, which allows you to connect preprocessing steps directly with machine learning models. This creates a streamlined workflow for consistent parsing tasks.

By combining TensorFlow's deep learning power with Scikit-Learn's preprocessing tools, you can build a complete end-to-end parsing solution.

For web-specific parsing tasks, tools like InstantAPI.ai offer specialized solutions.

InstantAPI.ai: AI-Powered Web Scraping

InstantAPI.ai, created by Anthony Ziebell, is transforming web scraping through AI. This tool eliminates the need for manual updates and maintenance, making it a practical choice for modern data parsing.

| Feature | Benefit |

|---|---|

| AI-Driven Extraction | Automatically adjusts to website changes, saving time and effort. |

| JavaScript Rendering | Accurately captures content from dynamic, JavaScript-heavy sites. |

| Customization Options | Provides tailored parsing solutions to meet specific project requirements. |

The platform offers unmetered scraping and dedicated support, with plans ranging from trial use to enterprise-level setups. It’s a solid choice for handling complex web scraping tasks efficiently.

sbb-itb-f2fbbd7

Applications and Considerations for Data Parsing

Web Scraping with AI

AI-driven web scraping simplifies the process of gathering data from dynamic and complex websites, making it a valuable tool for tasks like market research and competitive analysis. Platforms like InstantAPI.ai showcase this capability with their smart parsing systems. These systems adjust automatically to website changes, eliminating the need for manual updates. With features like an AI engine and JavaScript rendering, they handle data extraction from even the most intricate websites, making them ideal for large-scale data projects.

But the potential of machine learning doesn't stop at web scraping - it also transforms how we handle document parsing.

Document Parsing with OCR and AI

AI-powered OCR (Optical Character Recognition) technology takes document parsing to the next level. By recognizing patterns and learning over time, these systems can process scanned documents and PDFs across various formats with impressive accuracy. Plus, they don’t require manual configuration, saving time and effort.

This is particularly important in sectors like healthcare and finance, where precise document processing is critical for meeting compliance standards and ensuring smooth operations.

However, with these technological advancements come important ethical and privacy challenges.

Ethics and Data Privacy in Parsing

As machine learning becomes a go-to tool for parsing, organizations must navigate ethical practices and adhere to privacy regulations. Protecting data and ensuring security are non-negotiable.

Parsing systems need to comply with laws like GDPR, implement strong security protocols, and maintain clear consent management processes. For industries like healthcare and finance, where sensitive data is involved, additional safeguards are necessary. These sectors must meet strict regulatory requirements while reaping the benefits of machine learning-based parsing systems.

Conclusion

Key Points

Machine learning is changing how organizations handle data, making it easier to process even the most complex datasets. By combining TensorFlow's deep learning strengths with Scikit-learn's preprocessing features, businesses can tackle a variety of data formats effectively. These tools are particularly strong in handling unstructured data, delivering impressive results in tasks like text classification and pattern recognition.

While adopting these solutions, organizations need to keep data privacy and security at the forefront. This guide has shown how machine learning not only solves traditional parsing challenges but also creates new opportunities for managing diverse datasets.

Steps for Implementation

1. Assessment and Planning

Start by analyzing your current parsing requirements. Identify the machine learning techniques that align with your data types and processing needs.

2. Tool Selection and Setup

Select tools that fit your specific use case - like TensorFlow for natural language processing or computer vision tasks. Ensure these tools comply with your organization’s data security and privacy standards.

3. Model Development and Training

Begin with small datasets to test your models, using Scikit-learn for preprocessing. Track performance metrics such as accuracy and precision to refine your approach before scaling.

4. Integration and Scaling

Incorporate your parsing solutions into existing workflows, including robust error handling and validation. Set up monitoring systems and schedule regular retraining to keep performance levels high.

FAQs

Which technique is used in AI to handle large amounts of unstructured data?

Machine learning plays a key role in managing unstructured data. It utilizes several methods, including:

- Natural Language Processing (NLP): Breaks down unstructured text for tasks like sentiment analysis and entity recognition.

- Computer Vision: Analyzes and interprets visual data, such as images or videos.

- Deep Learning: Detects patterns and extracts insights from diverse and complex data formats.

These methods are widely applied across industries. For instance, healthcare uses them to process medical records, finance employs them to automate document workflows, and legal services rely on them for contract analysis. When adopting machine learning for parsing, organizations need to assess their data's complexity, decide between real-time or batch processing, and ensure smooth integration with existing systems.

Often, combining techniques yields the best results. For example, processing scanned documents might involve using computer vision for OCR (Optical Character Recognition) alongside NLP for analyzing the extracted text. This combined approach helps organizations manage complex data efficiently while maintaining accuracy.