Want cleaner, faster, and more reliable data for your projects? APIs can transform how you collect and manage data compared to traditional web scraping. Here's why:

- Structured Data: APIs deliver data in ready-to-use formats like JSON or XML, eliminating messy HTML parsing.

- Reliability: APIs are less likely to break when websites update, thanks to consistent endpoints.

- Policy Compliance: APIs come with clear usage guidelines and authentication, reducing legal risks.

- Scalability: APIs handle high-volume requests efficiently, perfect for large-scale data collection.

Quick Comparison

| Feature | Traditional Scraping | API Integration |

|---|---|---|

| Data Structure | Unstructured HTML | Clean JSON/XML |

| Reliability | Breaks with HTML changes | Stable endpoints |

| Legal Considerations | Risky and unclear | Clear guidelines |

By using APIs like the Twitter API, OpenWeather API, or InstantAPI.ai, you can streamline your workflows, reduce maintenance, and ensure compliance. Whether you're gathering social media data, weather updates, or automating scraping, APIs make the process faster and more efficient.

Keep reading to learn how to integrate APIs securely, manage rate limits, and automate your data collection with Python.

Benefits of APIs in Web Scraping

Clean, Structured Data Access

APIs simplify the process of gathering data by offering it in ready-to-use, organized formats like JSON or XML. This eliminates the need to parse raw HTML, saving both time and reducing the chances of errors. With APIs, the data you receive is already structured, making it easier to integrate into your projects while ensuring consistent quality.

Easier Scaling and Performance

When it comes to large-scale data collection, APIs shine. They come with server-side infrastructure that handles complex tasks, so developers can focus on analyzing and using the data instead of worrying about how to extract it. APIs are built to process high-volume requests efficiently, making them a reliable choice for scaling up operations.

"APIs provide direct access to specific data subsets via dedicated endpoints, enabling frequent data syncs and reliable performance at scale."

Policy Compliance

APIs help navigate legal and ethical considerations in data collection. They come with clear usage guidelines, rate limits to prevent overloading servers, and secure authentication through API keys or tokens. This framework ensures compliant and secure data access, which is especially crucial for enterprise-level projects. By using APIs, organizations can maintain professional relationships with data providers and adopt a more sustainable approach to data collection.

With these advantages in mind, let’s dive into some popular APIs and how they’re used in web scraping.

Working With APIs in Python - Pagination and Data Extraction

Common APIs for Data Collection

APIs serve diverse data needs, from gathering social media insights to weather forecasting and automated scraping. Here's a look at some key APIs that can boost your web scraping efforts.

Twitter API for Social Media Data

The Twitter API allows access to tweets, user profiles, hashtags, and engagement metrics through structured endpoints. Using OAuth for authentication and tools like Tweepy, developers can efficiently interact with these endpoints. This makes it a powerful tool for analyzing social media trends and audience behaviors.

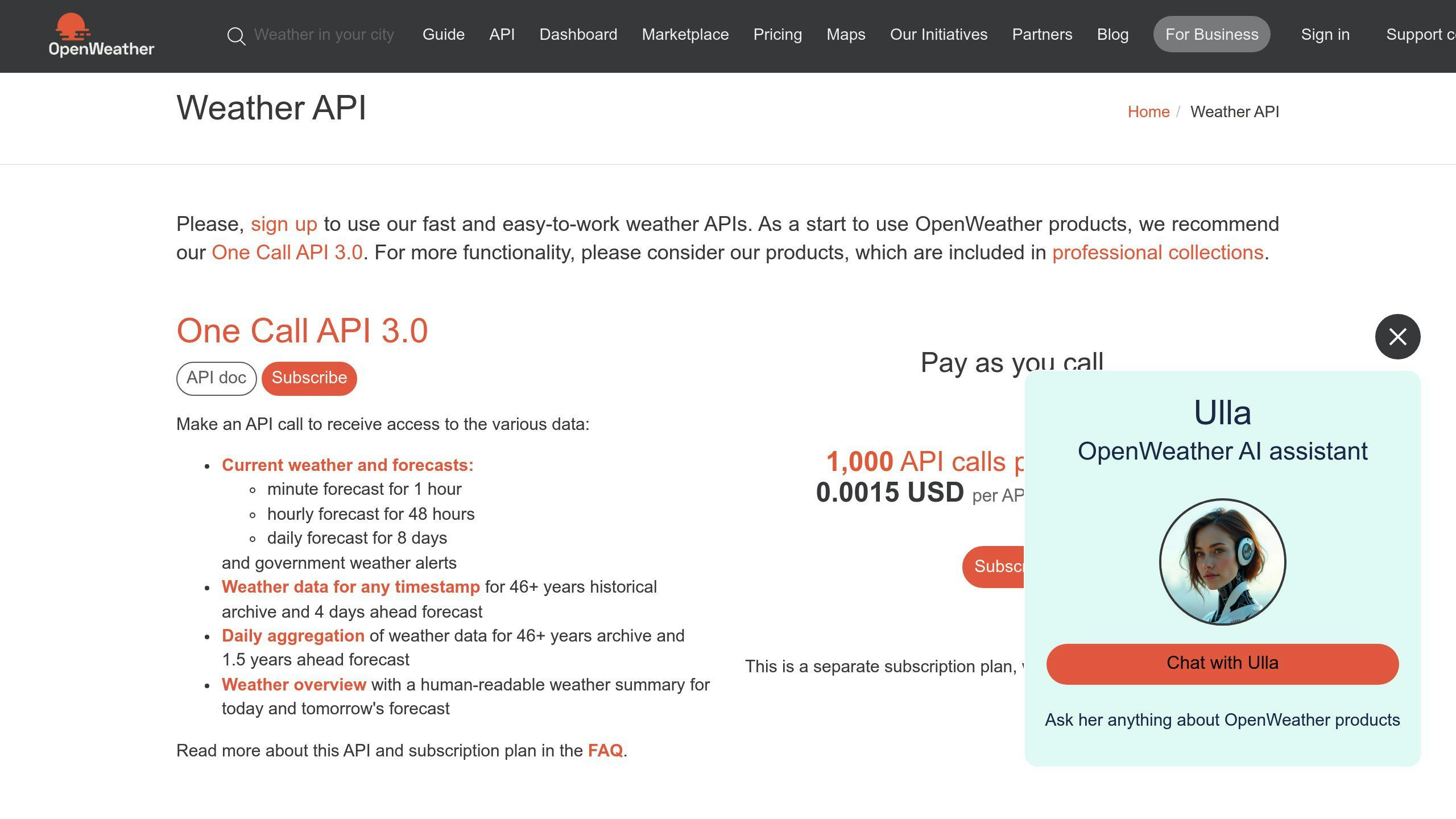

OpenWeather API for Weather Data

The OpenWeather API delivers detailed weather information collected from thousands of weather stations worldwide. It offers flexible pricing plans, ranging from free options for smaller projects to enterprise-level solutions for handling large-scale data needs.

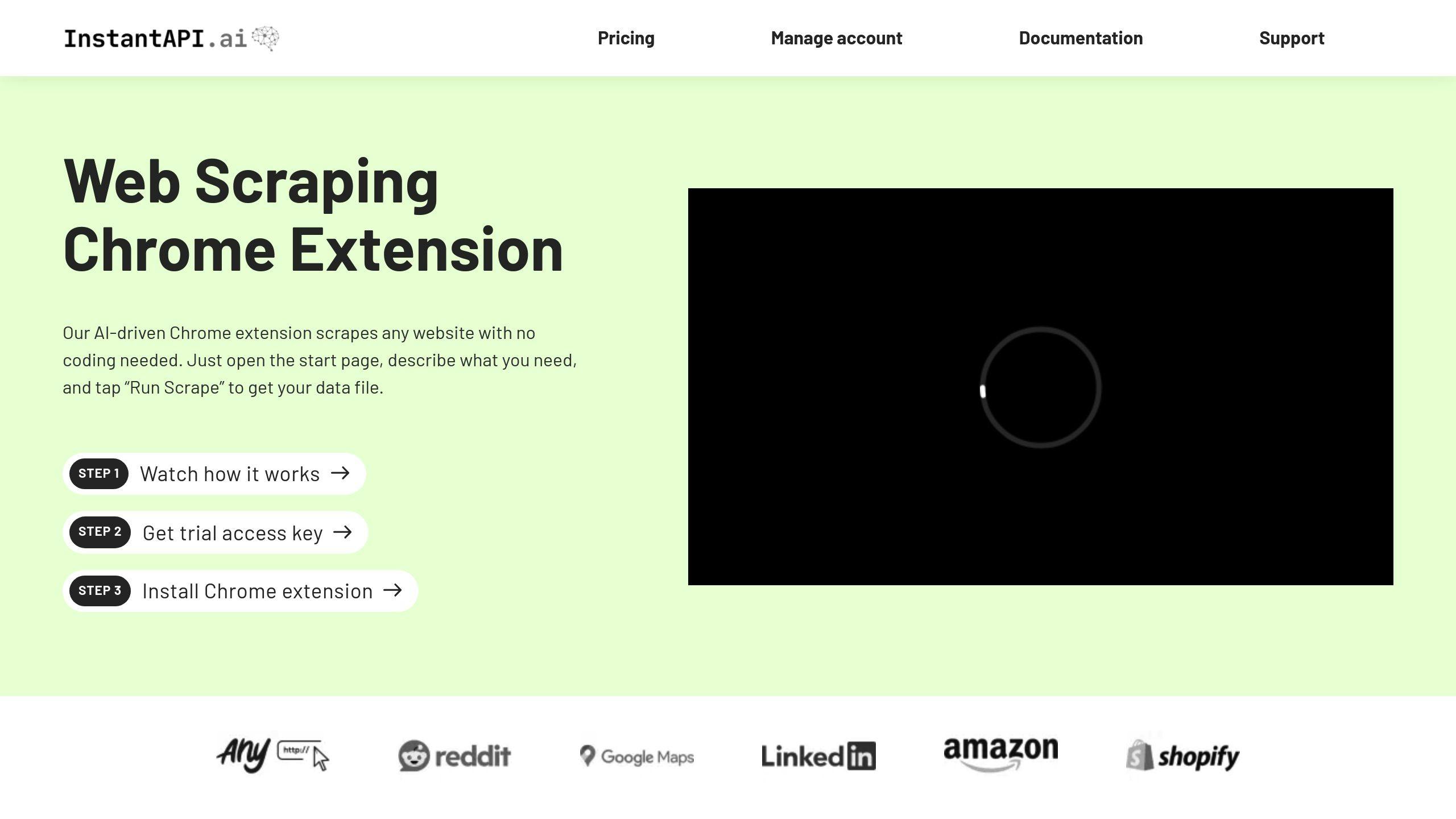

InstantAPI.ai for Automated Scraping

InstantAPI.ai combines artificial intelligence with an easy-to-use interface for scraping tasks. It features a Chrome extension for no-code scraping and an enterprise API with advanced capabilities. What sets it apart is its AI-driven approach, which adjusts to website changes automatically, ensuring consistent data collection without manual intervention.

This automated system is especially useful for long-term scraping projects, as it maintains stable performance even when source websites are frequently updated. It also reduces the need for ongoing maintenance while ensuring reliable data quality.

With these APIs in mind, the next step is learning how to integrate them seamlessly into your web scraping workflows.

sbb-itb-f2fbbd7

API Integration Guidelines

Integrating APIs into your workflows can greatly enhance web scraping capabilities. However, doing this securely and efficiently is key to success.

API Security Setup

Keeping API credentials safe is a top priority. Avoid embedding API keys directly in your code. Instead, use a proxy server or environment variables to separate sensitive data from client-side code.

# Example of secure API key handling using environment variables

import os

from dotenv import load_dotenv

load_dotenv()

api_key = os.getenv('API_KEY')

api_secret = os.getenv('API_SECRET')

For added security, services like AWS Secrets Manager or HashiCorp's Vault offer features like key rotation, encryption, and access logging, making API integration safer and more reliable.

Once your credentials are protected, the next step is managing API usage effectively.

Rate Limit Management

APIs often enforce rate limits to regulate usage. To handle these limits, strategies like exponential backoff can help prevent disruptions and optimize resource use.

import time

import requests

from requests.exceptions import RequestException

def make_api_request(url, max_retries=3):

for attempt in range(max_retries):

try:

response = requests.get(url)

if response.status_code == 429: # Too Many Requests

retry_after = int(response.headers.get('Retry-After', 60))

time.sleep(retry_after)

continue

response.raise_for_status()

return response.json()

except RequestException as e:

if attempt == max_retries - 1:

raise e

time.sleep(2 ** attempt)

With rate limits managed, you can focus on processing the retrieved data efficiently.

Data Processing Methods

Transforming API data into structured formats like DataFrames makes it easier to analyze and integrate. Python's json module is a great tool for handling API responses.

import json

import pandas as pd

def process_api_data(api_response):

if not isinstance(api_response, dict):

raise ValueError("Invalid API response format")

df = pd.DataFrame(api_response['data'])

df.to_csv('processed_data.csv', index=False)

return df

For larger datasets, consider using caching and parallel processing to boost performance. Libraries like dask can speed up data operations significantly.

"Rate limiting helps control costs. This means you can make a judicious distribution of your resources among your users." - Kinsta

API Web Scraping Tutorial

This guide walks you through using APIs for web scraping, making data collection easier while staying compliant with regulations.

Tools and Setup

Python is a great choice for API integration, especially with libraries like requests and pandas. Start by installing the necessary packages:

pip install requests pandas python-dotenv

Organize your project into separate files for better structure:

.env: Store your API credentials securely.config.py: Manage configuration settings.api_client.py: Handle API logic.data_process.py: Process and analyze the data.

Once your setup is ready, you'll need API accounts to access the data.

API Account Setup

For example, to use Twitter's API, create a developer account, set up a project, and generate your API credentials (API Key, Secret, Access Token). Save these details in a .env file for security:

TWITTER_API_KEY=your_api_key

TWITTER_API_SECRET=your_api_secret

TWITTER_ACCESS_TOKEN=your_access_token

TWITTER_ACCESS_SECRET=your_access_token_secret

With your credentials in place, you can start automating data collection.

Automation Scripts

Automation makes data collection efficient. Here’s a simple script for fetching data from the Twitter API:

import tweepy

import pandas as pd

from datetime import datetime

def fetch_twitter_data(query, count=100):

auth = tweepy.OAuthHandler(API_KEY, API_SECRET)

auth.set_access_token(ACCESS_TOKEN, ACCESS_SECRET)

api = tweepy.API(auth, wait_on_rate_limit=True)

tweets = []

try:

for tweet in tweepy.Cursor(api.search_tweets, q=query).items(count):

tweets.append({

'created_at': tweet.created_at,

'text': tweet.text,

'user': tweet.user.screen_name,

'retweets': tweet.retweet_count

})

return pd.DataFrame(tweets)

except tweepy.TweepError as e:

print(f"Error: {str(e)}")

return None

For projects involving multiple APIs, you can use a configuration dictionary to manage settings like base URLs, rate limits, and retry delays:

api_configs = {

'twitter': {

'base_url': 'https://api.twitter.com/2',

'rate_limit': 300, # requests per 15-minute window

'retry_delay': 60 # seconds

},

'weather': {

'base_url': 'https://api.openweathermap.org/data/3.0',

'rate_limit': 60, # requests per minute

'retry_delay': 30 # seconds

}

}

For advanced scraping, tools like InstantAPI.ai can simplify the process with features like automated maintenance and premium proxies for $9/month via a Chrome extension.

To handle rate limits in your scripts, you can implement a simple mechanism:

def handle_rate_limits(response, config):

if response.status_code == 429:

retry_after = int(response.headers.get('Retry-After',

config['retry_delay']))

time.sleep(retry_after)

return True

return False

This ensures your scripts remain functional even under strict API rate limits.

Conclusion

Key Points

Using APIs in web scraping projects can make data collection more efficient and reliable. APIs offer a structured way to access data, making it easier to scale and stay compliant with rules and regulations. Compared to traditional web scraping, APIs are typically more dependable, providing consistent access patterns and formats while reducing the need for constant upkeep.

Because APIs deliver clean, structured data through dedicated endpoints, they eliminate many of the headaches of traditional scraping, such as dealing with HTML parsing or adapting to changes in website layouts. This reliability ensures smoother data collection, even when websites update or evolve.

Here’s how you can incorporate API-based data collection into your projects effectively.

Next Steps

To get the most out of API-based data collection, keep these steps in mind:

- Audit your data sources: Look for APIs, like Twitter's, that provide structured data access. This removes the need for HTML parsing and simplifies the process.

- Prioritize critical data: Focus on APIs for essential, high-volume data needs. For instance, the OpenWeather API is perfect for collecting large-scale weather data.

- Handle errors and rate limits: Implement retry systems and manage rate limits to ensure smooth operation, as discussed earlier.

- Secure your API usage: Protect your credentials by using environment variables and proper authentication methods.

- Stay updated: Regularly check API documentation for changes and adjust your implementation to keep things running smoothly.

FAQs

How to use an API for web scraping?

Using an API for web scraping involves a few key steps:

- Set up your HTTP client: Configure it with the API endpoint, headers, and any required authentication details. Then, send requests using methods like GET or POST.

- Process the response: APIs usually return data in formats like JSON or XML. You’ll need to parse this data and organize it into the structure you need.

For example, when using the Twitter API, you can make authenticated requests to endpoints like /tweets/search to gather social media data. The API delivers structured JSON data, which simplifies processing and ensures compliance with platform rules.

What is rate limiting in web scraping?

Rate limiting restricts how many requests you can make to an API within a specific timeframe. For example:

- The Twitter API (basic tier) allows up to 500,000 tweets per month.

- OpenWeather API offers 60 calls per minute for free accounts.

- InstantAPI.ai provides custom limits based on your subscription plan.

Does using an API count as web scraping?

No, using an API is different from web scraping. Web scraping involves extracting data from raw HTML, while APIs deliver structured data through defined endpoints. This makes APIs more reliable and easier to work with. According to MuleSoft's 2023 report, 72% of companies favor APIs over web scraping for better data quality and dependability.

"APIs drive digital transformation, enabling new revenue streams and better customer experiences." - Ross Mason, Co-Founder and VP of Product Strategy at MuleSoft

Understanding these differences can help you decide how to integrate APIs into your data collection strategies.