Data extraction is the first step in turning raw information into actionable insights, helping businesses make smarter decisions. Here's why it matters:

- Saves Time: Tools like Octoparse and Scrapy automate data collection, cutting weeks of manual work into minutes.

- Boosts Profits: Companies using data-driven strategies see an 8% profit increase and 10% cost reduction (BARC research).

- Tracks Trends and Competitors: Stay ahead by spotting industry trends and monitoring competitors.

- Improves Workflow: AI-powered tools like InstantAPI.ai handle complex data and adapt to website changes, saving time and reducing errors.

Quick Comparison of AI Data Extraction Tools:

| Tool | Ease of Use | Scalability | Dynamic Content Handling | AI Integration |

|---|---|---|---|---|

| InstantAPI.ai | High | High | Excellent | Built-in |

| Selenium | Medium | Medium | Good | Integratable |

| Scrapy | Low | High | Moderate | Integratable |

| BeautifulSoup | High | Low | Poor | None |

Takeaway: Data extraction is essential for market research, automation, and staying competitive. Use ethical practices, follow laws like GDPR, and start with AI tools to simplify processes and make better decisions.

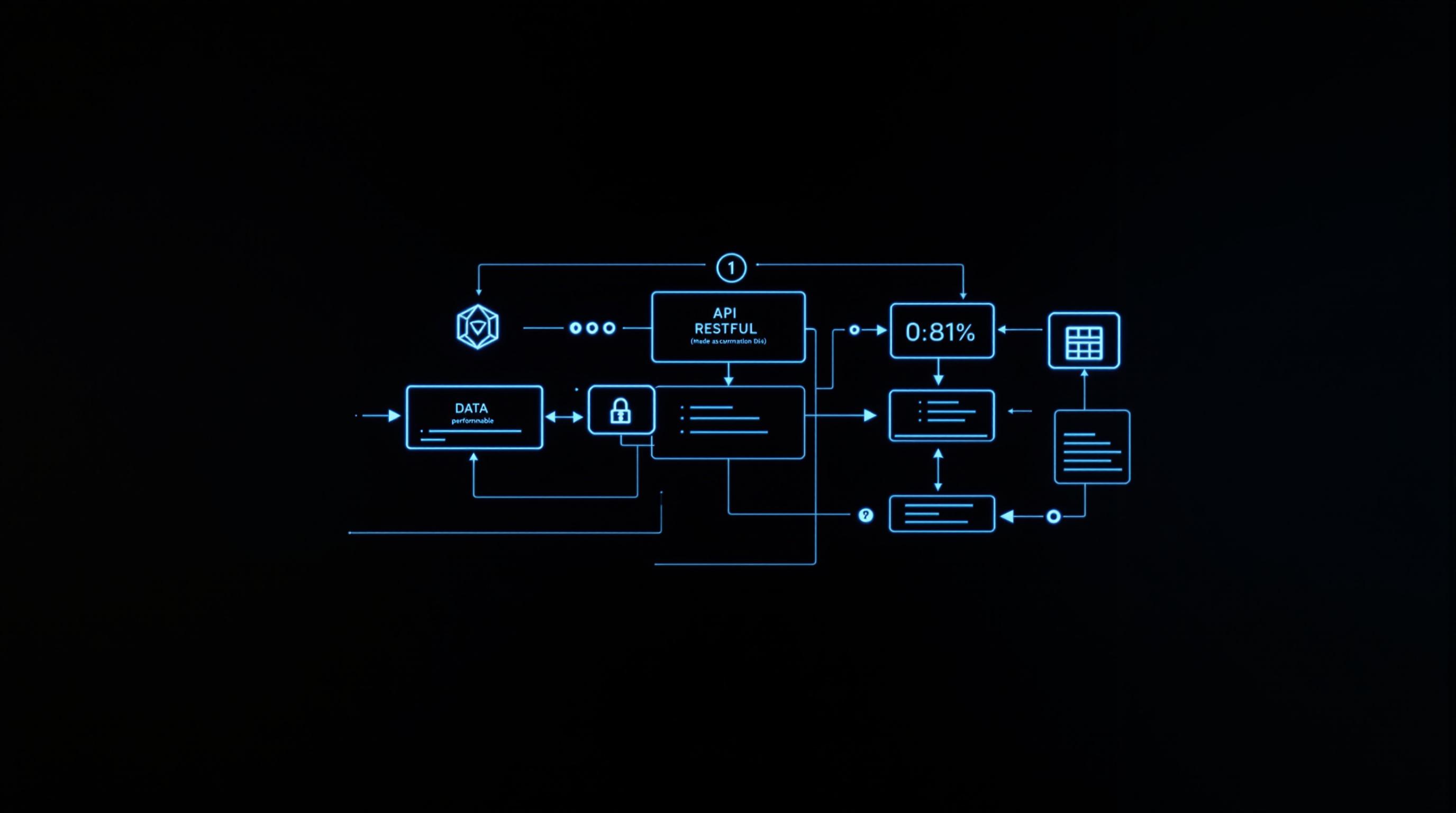

Related video from YouTube

Understanding the Basics of Data Extraction

Data extraction powers every data-driven strategy. Let's look at how it works today and what challenges companies need to overcome.

Common Challenges in Data Extraction

Even with today's tools, businesses run into several key issues when pulling data:

Messy Data Everywhere Web pages, emails, social posts, and PDFs don't follow neat patterns - they're all over the place. But tools are catching up: Amazon Comprehend helps make sense of text, while WebHarvy can track changing info like flight prices in real-time.

Playing by the Rules With GDPR and CCPA watching closely, companies must handle data carefully or face big fines. Take ZoomInfo - they won't touch data without getting clear permission first.

Getting Clean Data Raw data often comes with mistakes or missing pieces. That's where cleanup tools like Talend Data Preparation step in, helping companies fix errors before analysis begins.

Comparing Old and New Methods

The old ways of getting data? They still work, but AI has changed the game completely.

Python tools like Scrapy and Beautiful Soup haven't gone away - they've just gotten smarter with AI. Modern tools like ParseHub and Diffbot can now handle the tough stuff: JavaScript-heavy pages, blog posts, product listings - you name it.

Here's a real example: Zillow pulls data from thousands of places at once - property listings, tax files, market stats. They crunch all this data to tell you what homes are worth and where the market's heading. Try doing that with old-school methods!

What makes today's AI tools better:

- They handle complex data sources on their own

- You get insights right away, not next week

- Fewer mistakes slip through

- They adapt when websites change

- They save both money and time

Think of it like upgrading from a manual coffee grinder to a smart coffee maker. Both get the job done, but one does it faster, better, and with less effort on your part.

AI-Powered Web Scraping and Its Impact

AI-powered web scraping is changing how businesses get and use data from the web. It helps companies pull data faster and more accurately while dealing with common scraping headaches.

AI's Role in Web Scraping

Getting data from websites used to be tough - especially from sites with content that changes often or those that try to block scrapers. AI is fixing these problems. Here's what makes it different:

AI can spot patterns automatically. When you're trying to pull product info or customer reviews, AI figures out what's important without someone having to program every detail. This works even on tricky sites where content loads through JavaScript or updates frequently.

Think of it like having a smart assistant that learns and adjusts on its own. When websites change their layout, AI tools keep working without needing fixes. They're also smart about getting around blocks like CAPTCHA and IP restrictions.

Take InstantAPI.ai - it skips the complicated setup other tools need. Instead of writing complex rules to find data, the AI finds it automatically.

Top Tools for AI-Based Data Extraction

| Tool | Ease of Use | Scalability | Dynamic Content Handling | AI Integration |

|---|---|---|---|---|

| InstantAPI.ai | High | High | Excellent | Built-in |

| Selenium | Medium | Medium | Good | Integratable |

| Scrapy | Low | High | Moderate | Integratable |

| BeautifulSoup | High | Low | Poor | None |

Let's look at what makes each tool special:

- InstantAPI.ai: Works right out of the box with smart features like JavaScript support

- Selenium: Great for complex web interactions if you know how to code

- Scrapy: Perfect for big projects but needs programming skills

- BeautifulSoup: Easy to use but best for simple jobs

Comparing Popular Web Scraping Tools

InstantAPI.ai shines when you want something that just works. Real estate companies love it for pulling data from sites like Zillow - it handles those tricky JavaScript-heavy pages without breaking a sweat.

If you're a developer who likes to tinker, Selenium or Scrapy might be your speed. They let you customize everything, but you'll need to know your way around code. BeautifulSoup is like a bicycle in a world of cars - great for quick trips but not for the long haul.

The bottom line? AI-powered scraping tools are now must-haves for businesses that want to stay ahead. They turn web data into business gold - helping companies make smarter moves based on real information.

sbb-itb-f2fbbd7

Best Practices and Ethical Guidelines

Tips for Reliable Data Extraction

Want to get better results from your web scraping? Here's what actually works:

Keep your scraping tools up-to-date - websites change all the time, and outdated tools can miss important data. According to IBM, bad data hits businesses hard, costing them about $12.9 million each year.

Premium proxies are your friend. Companies like Oxylabs and Bright Data offer high-quality proxy services that help you avoid getting blocked while scraping at scale.

For smarter scraping, AI tools like InstantAPI.ai can help. They make the process smoother and help filter out data you shouldn't be collecting in the first place.

Staying Legal and Ethical in Web Scraping

Let's talk about keeping things above board. Web scraping isn't the Wild West - there are rules you need to follow.

First up: know your laws. GDPR and CCPA aren't just fancy acronyms - they're serious business. Just ask the European company that had to pay €1.2 million in 2021 for scraping user data without permission.

Here's what you need to do:

- Read those Terms of Service carefully - many websites say "no scraping allowed"

- Be open about what you're doing - PromptCloud does this well with clear data collection policies

- Keep personal info off-limits - no scraping names, social security numbers, or health records without explicit permission

"Ensuring that data extraction complies with all legal and ethical standards is paramount", states PromptCloud in their guide on ethical data practices

Remember: just because you can scrape something doesn't mean you should. When in doubt, ask for permission first.

Before you start any scraping project, double-check your data validation process. Look for missing info, duplicates, and anything that seems off. Bad data can mess up your whole project.

How Businesses Use Data Extraction

Data extraction helps companies turn raw data into practical insights that guide their decisions in market research and make their daily operations run smoother.

Using Data for Market Research

Market research helps companies make smart business moves, and data extraction is a key player in getting the right information. Companies pull data from many sources - competitor websites, social media, and public databases - to spot patterns, figure out what customers want, and fine-tune their approach.

Take Starbucks, for example. They use Geographic Information System (GIS) tech to gather and study data about local populations, how people move around, and what businesses are nearby. This smart approach to picking store locations helped bump up their global same-store sales by 7% in 2022.

Amazon and smaller shops use web scraping to stay competitive, but in different ways. Amazon keeps tabs on their competitors' prices and what customers are saying to adjust their own prices. Meanwhile, smaller businesses turn to tools like InstantAPI.ai to find hot products and spots in the market that others might have missed.

Here's something interesting about market research with data extraction: During COVID-19, Zoom used data to spot the growing need for video calls before everyone else, helping them get ready for the huge jump in users.

Improving Workflows with Automation

Data extraction isn't just about market insights - it's also about making work easier. Tools like InstantAPI.ai do the heavy lifting of collecting data and can handle website changes on their own. The numbers speak for themselves: a 2023 survey found that 72% of business leaders cut their operating costs by about 15% using AI-powered automation.

Let's look at some real examples:

Zillow, the real estate platform, uses automatic data scraping to keep their property listings fresh. This means house hunters always see the newest prices and availability.

FedEx puts automated data extraction to work in their shipping operations. They track packages and pick the best delivery routes, which means fewer delays and lower costs.

Here's a real-world win: In 2021, a medium-sized retail chain saved more than 1,200 hours in just one year by using data extraction tools to track their inventory automatically. That's time their team could spend on more important tasks instead of counting stock.

Conclusion: Using Data Extraction for Better Decisions

Data extraction helps businesses turn raw data into clear insights they can act on right away. Let's look at what we've learned and what you can do next.

Three key things to remember:

- Stay on the right side of the law and ethics. This builds trust and protects your business.

- Look at real success stories - companies like Zillow and FedEx show how data extraction can improve operations and customer service.

- Tools like InstantAPI.ai make it easier with AI-powered scraping and automatic updates.

Ready to get started? Here's your action plan:

Start with AI tools that fit your needs. InstantAPI.ai works well whether you're just starting out or running a large company.

Make ethics and quality your top priorities:

- Only collect data from public sources

- Follow each website's rules

- Check your data quality often

- Keep detailed records

Connect your data to your business goals. Use real-time information to spot trends and make smarter choices before your competitors do.

Keep learning as technology changes. New tools and methods pop up all the time - staying informed helps you pick the best ones for your needs.

Pro tip: Start small, test your approach, and scale up what works. It's better to do a few things well than to try everything at once.

FAQs

Do companies use web scraping?

Web scraping isn't just a tech buzzword - it's a key tool that companies use every day to get ahead. Let's look at how real businesses put web scraping to work.

Take ZoomInfo and LinkedIn - these B2B giants use web scraping to build massive contact databases. ZoomInfo's scrapers have helped them collect over 100 million business contacts, making it easier for sales teams to find and reach the right people.

Want to see web scraping in action? Look at Starbucks. They mix GIS with scraped data to pick the perfect spots for new stores. This smart approach helped them boost their revenue by 9% in 2023.

And then there's Amazon - they're constantly watching their competitors' prices and adjusting their own in real-time. It's part of why they stay at the top of the e-commerce game.

Digital marketing agencies love web scraping too. They use it to keep tabs on what's hot in their industry and how well ads are performing. Instead of spending hours gathering data manually, they let automation do the heavy lifting.

Here's something cool: Even small businesses and startups are getting in on the action. Thanks to budget-friendly tools and APIs, they can now access the same kind of market data as the big players. It's like having a corporate research team without the corporate price tag.

But here's the catch: Companies need to play by the rules. Web scraping comes with legal and ethical standards - you can't just grab data however you want. That means:

- Following website terms of service

- Being gentle with server requests

- Using the collected data responsibly