Want to store web-scraped data efficiently? Here's the quick answer:

- SQL databases like PostgreSQL are great for structured data with clear relationships (e.g., product prices, user reviews).

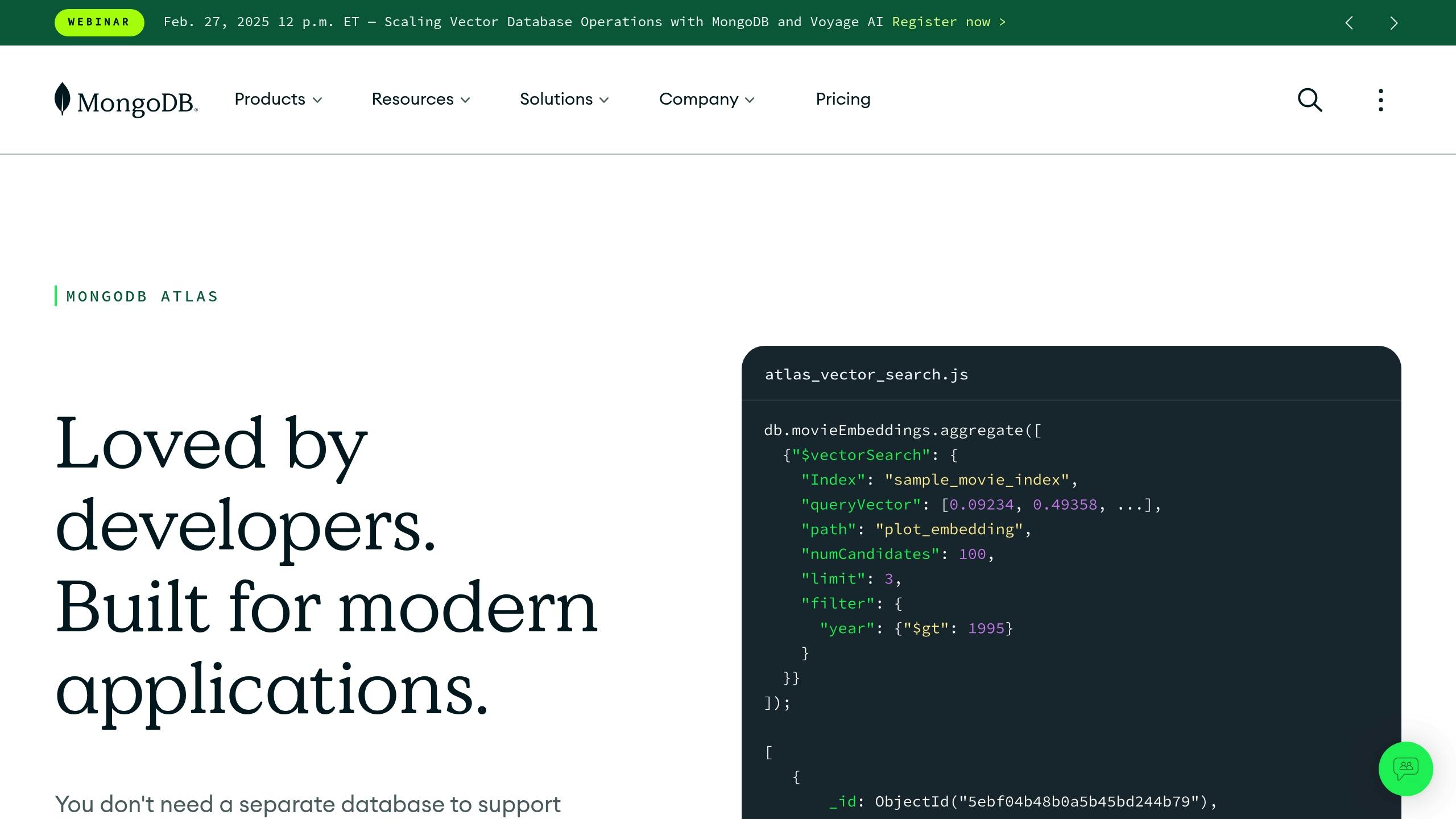

- NoSQL databases like MongoDB handle unstructured or semi-structured data (e.g., dynamic content, JSON).

Key Differences:

- SQL: Structured, relational. Best for complex queries and transactional accuracy.

- NoSQL: Flexible, scalable. Ideal for large, diverse datasets.

Steps to Get Started:

- Choose a Scraping Tool: Scrapy, ScrapingBee, Oxylabs, etc.

- Set Up Your Database: Create schemas for SQL or document structures for NoSQL.

- Connect and Store Data: Use Python libraries like SQLAlchemy or PyMongo.

- Optimize Performance: Use indexing, batch processing, and sharding for large datasets.

Quick Comparison Table:

| Feature | SQL Databases (e.g., PostgreSQL) | NoSQL Databases (e.g., MongoDB) |

|---|---|---|

| Data Structure | Structured, relational | Flexible, unstructured |

| Scalability | Vertical (add power) | Horizontal (add servers) |

| Query Complexity | Handles complex joins well | Limited for complex queries |

| Best Use Case | E-commerce pricing, analytics | Dynamic web data, JSON storage |

Guide to Scraping and Storing Data to MongoDB Using Python

Setup and Tool Selection

When choosing a database, make sure your tools and setup work smoothly together for easier integration.

Web Scraping Tool Options

Pick a scraping tool that suits your technical requirements and data goals. Here are some popular options:

| Tool | Key Features | Best Database Integration |

|---|---|---|

| Scrapy | Advanced crawling, built-in pipelines | PostgreSQL, MongoDB |

| ScrapingBee | JavaScript rendering, CAPTCHA handling | SQL databases via API |

| Oxylabs | AI-powered parsing, large proxy pool | NoSQL databases |

| InstantAPI.ai | No-code option, AI automation | SQL and NoSQL via API |

Once you've chosen your tool, set up your environment to connect it with your database system.

Environment Setup Steps

To link your scraping tools with your database, follow these steps:

-

Set Up Your Development Environment

If you're using Python, create virtual environments to manage dependencies more easily. -

Establish Database Connections

Use environment variables to store credentials securely and test the connection with sample data before moving forward. -

Configure Proxies

Rotating proxies help avoid IP blocks. Tools like Oxylabs and ScrapingBee include built-in proxy rotation features.

Data Size and Schedule Planning

Now that your tools and environment are ready, think about your current and future data needs.

-

Plan for Storage

Estimate how much storage you'll need, account for annual growth, and decide how to archive older data. -

Set a Scraping Schedule

Run scraping tasks during off-peak hours to avoid conflicts with database maintenance. Decide between real-time or batch processing based on your project's requirements.

For large datasets, consider breaking them into monthly partitions and use indexing to keep queries fast. This method helps you handle growing data volumes efficiently while maintaining performance.

SQL Database Implementation

Build an efficient SQL system to store and manage scraped data effectively.

SQL Database Setup for Scraped Content

Start by designing a clear and logical database schema that aligns with your scraped data. Here's an example using PostgreSQL:

CREATE TABLE product_data (

id SERIAL PRIMARY KEY,

url_hash VARCHAR(64) UNIQUE,

product_name VARCHAR(255),

price DECIMAL(10,2),

description TEXT,

scraped_at TIMESTAMP DEFAULT CURRENT_TIMESTAMP

);

CREATE INDEX idx_url_hash ON product_data(url_hash);

CREATE INDEX idx_scraped_at ON product_data(scraped_at);

Using a hashed URL as a unique key prevents duplicate entries. For instance, Ahmetkocadinc applied this method in a real estate scraping project, transferring property listings from emlakjet.com into a SQL database.

SQL Data Pipeline Creation

Here’s a basic Python pipeline for storing data:

from sqlalchemy import create_engine

from sqlalchemy.orm import sessionmaker

engine = create_engine('postgresql://user:password@localhost:5432/scraper_db')

Session = sessionmaker(bind=engine)

def store_data(items):

session = Session()

try:

session.bulk_insert_mappings(ProductData, items)

session.commit()

except Exception as e:

session.rollback()

raise e

finally:

session.close()

This pipeline can be enhanced with performance improvements, as outlined below.

SQL Performance Improvements

Boost your database's efficiency with these strategies:

| Method | Benefit | How to Apply |

|---|---|---|

| Batch Processing | Faster data insertion | Group multiple records per transaction |

| Indexed Queries | Quicker query execution | Add indexes to frequently searched columns |

| Partitioning | Faster data retrieval | Divide data by date or other criteria |

Additional tips for better performance:

- Select only the required columns instead of using

SELECT *. - Use

INNER JOINfor combining tables instead of outdated comma-separated syntax. - Apply

WHEREfilters beforeGROUP BYto reduce unnecessary processing.

For large-scale operations, consider implementing a queuing system to handle database writes. This can help avoid overloading the server during peak scraping times and maintain consistent performance levels.

sbb-itb-f2fbbd7

NoSQL Database Implementation

NoSQL databases are a great choice for managing unstructured web data, especially when dealing with web scraping. Their flexible schemas and scalability make them well-suited for handling dynamic, complex datasets. Here's a breakdown of how to implement NoSQL databases effectively.

NoSQL Data Structure Design

When creating NoSQL schemas for scraped data, tailor the design to your access patterns. For instance, here's a MongoDB document structure for e-commerce data:

{

"_id": ObjectId("507f1f77bcf86cd799439011"),

"product_url": "https://store.example.com/product-123",

"url_hash": "b5d1d0b794",

"metadata": {

"scrape_timestamp": "2025-02-27T08:30:00-05:00",

"source": "AmazonUS"

},

"data": {

"title": "Wireless Headphones",

"price": 99.99,

"reviews": [

{

"rating": 4.5,

"comment": "Great sound quality",

"date": "2025-02-26T14:20:00-05:00"

}

]

}

}

For time-series data, such as tracking price changes, Cassandra's column-family model works well. Here's an example:

| Row Key (Product ID) | Timestamp | Price | Stock |

|---|---|---|---|

| PROD123 | 2025-02-27 08:00:00 | $99.99 | 45 |

| PROD123 | 2025-02-27 12:00:00 | $89.99 | 42 |

| PROD123 | 2025-02-27 16:00:00 | $94.99 | 38 |

NoSQL Connection Setup

Setting up connections to NoSQL databases is straightforward. Here's how to connect to MongoDB using PyMongo:

from pymongo import MongoClient

from datetime import datetime

class ScraperDB:

def __init__(self):

self.client = MongoClient('mongodb://localhost:27017/',

maxPoolSize=50,

waitQueueTimeoutMS=2500)

self.db = self.client.scraper_database

def store_product(self, product_data):

product_data['metadata'] = {

'scrape_timestamp': datetime.now(),

'version': '2.1'

}

return self.db.products.insert_one(product_data)

def __del__(self):

self.client.close()

For Cassandra, you can use the DataStax Python driver:

from cassandra.cluster import Cluster

from cassandra.policies import DCAwareRoundRobinPolicy

cluster = Cluster(

['10.0.0.1', '10.0.0.2'],

load_balancing_policy=DCAwareRoundRobinPolicy(local_dc='US_EAST')

)

session = cluster.connect('scraper_keyspace')

These setups provide a solid foundation for handling large-scale data.

Managing Large Datasets

When working with massive datasets, efficiency is key. Here are some strategies:

- Sharding: Distribute data across nodes to balance the load. MongoDB's automatic sharding can simplify this process.

-

Batch Operations: Combine multiple operations to minimize network overhead. For example:

batch_ops = [ UpdateOne( {'url_hash': item['url_hash']}, {'$set': item}, upsert=True ) for item in scraped_items ] db.products.bulk_write(batch_ops, ordered=False) -

Indexing: Optimize queries by creating indexes tailored to your data access patterns:

db.products.create_index([ ('metadata.source', 1), ('metadata.scrape_timestamp', -1) ])

These strategies ensure your system can handle high-velocity data efficiently.

Implementation Tips and Standards

Building on database integration strategies, these tips will help ensure your web scraping workflow stays efficient and reliable. A well-executed scraping process requires maintaining data accuracy, managing processing loads, and keeping an eye on system performance.

Data Quality Control

Maintaining data quality is essential when dealing with large-scale web scraping. One way to enforce this is by using structured validation methods. For example, here's how you can implement JSON schema validation:

from jsonschema import validate

schema = {

"type": "object",

"properties": {

"product_id": {"type": "string", "pattern": "^[A-Z]{2}[0-9]{6}$"},

"price": {"type": "number", "minimum": 0.01},

"availability": {"type": "boolean"},

"last_updated": {"type": "string", "format": "date-time"}

},

"required": ["product_id", "price", "availability"]

}

def validate_product_data(data):

try:

validate(instance=data, schema=schema)

return True

except Exception as e:

log_error(f"Validation failed: {str(e)}")

return False

To catch numerical anomalies, you can use Pandas' IQR (Interquartile Range) method, which is a useful statistical tool for identifying outliers.

Instant vs Scheduled Processing

The choice between instant and scheduled processing can have a big impact on system performance. To prevent overloading servers, use rate limiting. Here's an example:

from ratelimit import limits, sleep_and_retry

@sleep_and_retry

@limits(calls=60, period=60)

def scrape_with_rate_limit(url):

return requests.get(url)

By controlling how often requests are sent, you can ensure smoother operations. At the same time, monitoring the frequency of these processes is key to avoiding performance issues.

System Performance Tracking

Keep tabs on how your scraping and database operations are performing by using monitoring tools like Prometheus. Here's a quick example:

from prometheus_client import Counter, Histogram

scrape_duration = Histogram('scrape_duration_seconds', 'Time spent scraping URLs')

failed_scrapes = Counter('failed_scrapes_total', 'Number of failed scraping attempts')

def track_scraping_performance():

with scrape_duration.time():

try:

return requests.get(url)

except Exception:

failed_scrapes.inc()

Regularly track metrics like scraping duration and failure rates to identify and address potential bottlenecks before they become serious issues. This helps maintain smooth and reliable workflows.

Common Technical Problems and Solutions

Integrating web scraping with databases often presents technical challenges. Addressing these efficiently can strengthen your scraping-to-database workflow.

JavaScript and Dynamic Content Scraping

Modern websites frequently use JavaScript to load dynamic content. For static JavaScript data embedded in <script> tags, you can extract it directly with a straightforward approach:

import json

from bs4 import BeautifulSoup

def extract_js_data(html_content):

soup = BeautifulSoup(html_content, 'html.parser')

script_tag = soup.find('script', {'id': 'product-data'})

json_data = json.loads(script_tag.string)

return json_data

When dealing with more complex scenarios involving dynamic rendering, browser automation tools become indispensable. Here's how some popular tools compare:

| Tool | Best For | Key Feature | Performance Impact |

|---|---|---|---|

| Playwright | Large-scale scraping | Multi-browser support, async API | Medium resource usage |

| Puppeteer | Chrome-specific needs | Deep Chrome integration | Lower resource usage |

| Selenium | Legacy systems | Broad language support | Higher resource usage |

Access Limits and IP Management

Once you’ve tackled dynamic content, rate limits and IP restrictions are the next hurdles. A well-thought-out IP management strategy is essential for uninterrupted scraping. Here's an example of how to handle requests intelligently:

from time import sleep

import random

import requests

def smart_request(url, proxy_list):

proxy = random.choice(proxy_list)

headers = generate_random_headers()

sleep(random.uniform(1.5, 3.0))

return requests.get(url, proxies=proxy, headers=headers)

Different proxy types cater to specific needs. Here's a quick comparison:

| Proxy Type | Cost Range | Reliability | Best Use Case |

|---|---|---|---|

| Residential | $15-50/GB | Very High | E-commerce scraping |

| Datacenter | $2-10/GB | Moderate | Basic web scraping |

| Rotating | $5-20/GB | High | Mixed workloads |

Legal Requirements and Ethics

Technical solutions must be paired with legal and ethical considerations to ensure responsible scraping. Key practices include:

- Respect robots.txt files.

- Introduce delays between requests.

- Collect only the data you need.

- Monitor and log your access patterns.

Here’s a code snippet for validating compliance with robots.txt:

from urllib.parse import urlparse

import urllib.robotparser

def validate_scraping_compliance(url):

robots_parser = urllib.robotparser.RobotFileParser()

base_url = f"{urlparse(url).scheme}://{urlparse(url).netloc}/robots.txt"

robots_parser.set_url(base_url)

robots_parser.read()

return {

'can_fetch': robots_parser.can_fetch('*', url),

'crawl_delay': robots_parser.crawl_delay('*'),

'request_rate': robots_parser.request_rate('*')

}

Conclusion: Summary and Implementation Guide

Combining web scraping with databases involves selecting either SQL or NoSQL based on your specific requirements. This guide has outlined the main methods for creating effective data pipelines.

For projects with structured data and clear relationships, databases like PostgreSQL or MySQL are solid options. As Dr. Olivia Pearson explains:

"PostgreSQL's robustness and scalability make it a strong contender for large-scale, complex web scraping tasks. However, for smaller tasks, a lighter, simpler database may be more suitable."

If you're working with unstructured or semi-structured data, NoSQL databases such as MongoDB are often better suited. Alex Smith highlights:

"MongoDB's performance and flexibility make it a strong choice for web scraping projects. Its document-oriented storage and indexing capabilities can handle the diverse and large-scale data typical in these projects."

To help you integrate these techniques effectively, here's a quick roadmap:

| Phase | Key Actions | Tools/Technologies |

|---|---|---|

| Setup | Create a virtual environment and install libraries | requests, beautifulsoup4, pymongo |

| Development | Develop scraping logic and add error handling | Scrapy |

| Database Integration | Set up connections and design your schema | SQLAlchemy, PyMongo |

| Production | Deploy scraper with logging and rate limits | - |

Always respect website terms, follow robots.txt guidelines, use delays, and implement proxies to avoid server overload.

As covered earlier, matching your database choice and processing strategies with your project's needs is critical. Dr. Amelia Richardson puts it well:

"Understanding your database system is crucial for effective web scraping. PostgreSQL, MySQL, and MongoDB each offer unique capabilities and strengths. The key is to understand your project requirements and choose the system that best meets those needs."