Save time and reduce errors by combining Google Sheets and web scraping to automate data collection and storage. This guide shows you how to:

- Use Google Sheets' functions like

IMPORTXMLandIMPORTHTMLto pull data directly from websites. - Set up a Google Sheets API to automate data imports.

- Choose the right web scraping tools based on your needs and budget.

- Write Python scripts for advanced scraping and integration.

- Schedule regular updates and ensure compliance with web scraping rules.

Quick Benefits:

- Time-Saving: Automates repetitive tasks.

- Accuracy: Reduces manual errors.

- Real-Time Updates: Keeps data fresh.

- Collaboration: Enables team access via cloud storage.

- Scalability: Handles large datasets with ease.

Whether you're tracking competitor prices, curating news feeds, or managing large datasets, this workflow simplifies the process and ensures your data is always up-to-date.

Preparing Google Sheets for Data Import

Setting Up Your Sheet Structure

To efficiently store scraped data in Google Sheets, it's essential to organize your sheet properly. A well-structured sheet helps maintain consistency and makes analysis easier.

- Create and name a new sheet: Start fresh with a clear purpose for your data.

- Add clear column headers: Define headers that describe the data, like "Product Name" or "Price."

- Format cells for data types: Adjust formatting to match data types (e.g., currency, date).

- Freeze the header row: Keep your headers visible as you scroll.

- Enable filter views: Simplify sorting and filtering for specific data.

| Function | Purpose | Best Used For |

|---|---|---|

| IMPORTXML | Extracts specific elements | Product details, prices |

| IMPORTHTML | Pulls tables and lists | Financial data, rankings |

| IMPORTDATA | Imports CSV/TSV files | Structured datasets |

| IMPORTFEED | Retrieves RSS feeds | News, blog updates |

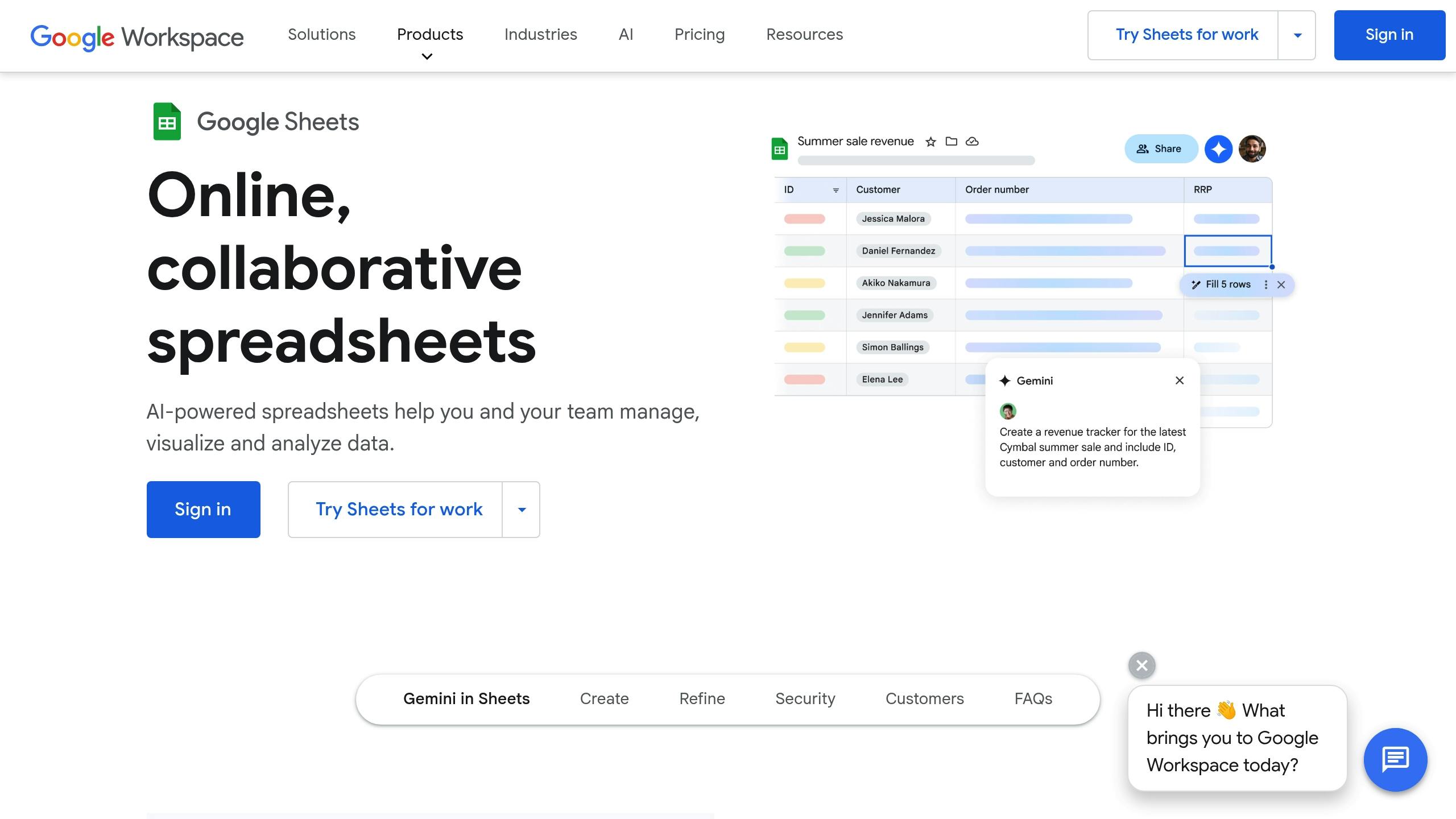

Setting Up Google Sheets API Access

Once your sheet is ready, you can configure the Google Sheets API to automate data imports. Vytenis Kaubrė, Technical Copywriter at Oxylabs, highlights its potential:

"Google Sheets web scraping can be an effective technique... All you need to do is use a built-in function of Google Sheets. Thus, it acts as a basic web scraper."

Follow these steps to get started with the API:

1. Create Your Project

Go to the Google Developer Console and set up a new project.

2. Enable the API

In the API Library, activate the Google Sheets API for your project.

3. Set Up Authentication

Create a service account to act as your digital identity.

4. Configure Permissions

Share your Google Sheet with the service account's email address.

| Access Level | Best For |

|---|---|

| Viewer | Read-only data collection |

| Editor | Automated updates and modifications |

| Commenter | Collaborative data validation |

Be mindful of sensitive data. Use the "anyone with link" setting with viewer access only for public datasets. For private data, restrict access to specific team members.

Example in Action

In November 2022, Oxylabs used this setup to extract book titles from books.toscrape.com. They structured their sheet with dedicated columns for titles, prices, and availability. Using the XPath query //h3/a/@title, they populated the data automatically with the IMPORTXML function. This streamlined their workflow and provided organized, actionable data.

Selecting a Web Scraping Tool

Common Web Scraping Tools

Choosing the right web scraping tool is key to automating tasks in Google Sheets. Traditional tools often rely on CSS selectors or XPath for precise data extraction. For instance, Scrapy, a Python-based framework, is well-suited for large-scale operations, thanks to its extensive crawling features and active community.

| Tool Type | Features | Best For | Price |

|---|---|---|---|

| Traditional (Scrapy) | Advanced crawling, Python-based | Developers, custom solutions | Free, open-source |

| AI-Powered (Kadoa) | No-code setup, automated updates | Enterprise-level projects | $39/month |

| AI-Powered (Browse.ai) | Visual interface, basic scheduling | Small-medium businesses | $48.75/month |

| Browser Extension (InstantAPI.ai) | Chrome extension, no-code | Quick, small-scale tasks | $15/30 days |

"ParseHub is an intuitive and easy-to-learn data scraping tool. There are a variety of tutorials to get you started with the basics and then progress on to more advanced extraction projects."

With so many tools to choose from, focus on what aligns with your project's specific requirements.

How to Pick the Right Tool

The right tool makes Google Sheets integration smoother and more efficient. When evaluating options, keep these factors in mind:

- JavaScript rendering capabilities and direct integration with Google Sheets

- Support for common data formats like CSV and JSON

- Features like proxy management, handling large data volumes, and scheduling frequency

- The complexity of the target website and your budget

Juan from Scalista GmbH shares his experience:

"After trying other options, we were won over by the simplicity of InstantAPI.ai's Web Scraping API. It's fast, easy, and allows us to focus on what matters most - our core features."

For those new to web scraping, tools with user-friendly interfaces, such as ParseHub or Browse.ai, are great starting points. David Shuck, Managing Editor at Heddels, highlights:

"Setting up your projects has a bit of a learning curve, but that's a small investment for how powerful their service is. It's the perfect tool for non-technical people looking to extract data, whether that's for a small one-off project or an enterprise-level scrape running every hour."

Experienced users may prefer more customizable options like Scrapy or Zyte. These frameworks offer advanced features for complex tasks, including:

- Custom middleware

- Sophisticated proxy rotation

- Extensive data transformation tools

- Advanced error handling

For seamless integration with Google Sheets, look for tools that support direct connections or easy export options. Modern solutions like Automatio.ai even come with built-in Google Sheets compatibility, simplifying the entire process from data extraction to storage.

Creating Your Scraper and Google Sheets Connection

Building a Simple Web Scraper

Here’s how you can set up a basic web scraper using Python and BeautifulSoup:

import requests

from bs4 import BeautifulSoup

import gspread

from oauth2client.service_account import ServiceAccountCredentials

# Initialize scraper

url = 'https://example.com'

response = requests.get(url)

soup = BeautifulSoup(response.text, 'html.parser')

# Extract data

data = soup.find_all('div', class_='content-element')

This serves as a starting point and can be expanded for more advanced scraping tasks.

Handling JavaScript Content and Formatting Data

For websites with dynamic content, use Selenium to handle JavaScript-rendered elements:

from selenium import webdriver

from selenium.webdriver.common.by import By

from selenium.webdriver.support.ui import WebDriverWait

from selenium.webdriver.support import expected_conditions as EC

driver = webdriver.Chrome()

driver.get('https://dynamic-site.com')

# Wait for dynamic content to load

element = WebDriverWait(driver, 10).until(

EC.presence_of_element_located((By.CLASS_NAME, "dynamic-content"))

)

To clean and organize your scraped data, use a transformation function:

from datetime import datetime

def standardize_data(raw_data):

# Extract and format data

return {

'title': raw_data.get('title', '').strip(),

'price': float(raw_data.get('price', 0)),

'date_added': datetime.now().strftime('%m/%d/%Y')

}

Once your data is ready, you can integrate it with Google Sheets.

Integrating with Google Sheets

-

Set Up a Google Cloud Project

- Create a new project in the Google Cloud Console.

- Enable the Google Sheets API.

- Download the service account credentials (JSON file).

-

Configure Authentication

Use the downloaded credentials to authenticate:

scope = ['https://spreadsheets.google.com/feeds', 'https://www.googleapis.com/auth/drive'] credentials = ServiceAccountCredentials.from_json_keyfile_name( 'your-credentials.json', scope) client = gspread.authorize(credentials) -

Write Data to Your Google Sheet

Append your standardized data to a Google Sheet:

# Open your spreadsheet sheet = client.open('Scraped Data').sheet1 # Append new data sheet.append_row([data['title'], data['price'], data['date_added']])

Be mindful of website policies and add delays between requests to avoid overloading servers. For more reliable scraping in production, tools like ZenRows can help manage JavaScript rendering and proxy rotation.

sbb-itb-f2fbbd7

Making Your System Run Better

Setting Up Regular Data Updates

Automating your web scraping schedule can save time and ensure consistency. Tools like GitHub Actions make this process seamless. Here's a simple GitHub Actions workflow to schedule scraping tasks:

name: Scheduled Scraping

on:

schedule:

- cron: '0 */1 * * *' # Runs hourly

jobs:

scrape:

runs-on: Ubuntu

steps:

- uses: actions/checkout@v2

- name: Set up Python

uses: actions/setup-python@v2

with:

python-version: '3.9'

- name: Run scraper

env:

SHEETS_API_KEY: ${{ secrets.SHEETS_API_KEY }}

run: python scraper.py

Prefer working locally? Python's schedule library is a great option:

import schedule

import time

def scrape_and_update():

# Call your scraping function

pass

schedule.every(1).hour.do(scrape_and_update)

while True:

schedule.run_pending()

time.sleep(60)

If coding isn't your thing, there are no-code SaaS tools available to simplify scheduling. Just make sure to check the legal guidelines to keep your scraping activities compliant.

Following Scraping Rules and Laws

Legal clarity around web scraping has improved in recent years. For example, the 2024 Meta v. Bright Data case confirmed that scraping publicly accessible data is lawful in the U.S., as long as you follow these key practices:

| Requirement | Implementation |

|---|---|

| Review ToS | Check website terms before scraping |

Respect robots.txt |

Parse and follow crawl rules |

| Rate Limiting | Add delays between requests (5–10 seconds) |

| Data Privacy | Avoid collecting personal information |

| API Priority | Use official APIs when available |

Sticking to these rules helps you avoid legal trouble while maintaining ethical practices.

Fixing Common Problems

Even with a solid setup, technical hiccups can occur. Here’s how to handle common issues like website changes and rate limits.

Retry Requests with Exponential Backoff:

If a request fails, retrying with exponential backoff can help:

from time import sleep

from random import uniform

import requests

def retry_request(url, max_retries=3):

for attempt in range(max_retries):

try:

response = requests.get(url)

return response

except requests.exceptions.RequestException:

if attempt == max_retries - 1:

raise

sleep_time = (2 ** attempt) + uniform(0, 1)

sleep(sleep_time)

Standardize Your Data:

Clean and structure your data to keep it consistent:

from datetime import datetime

def clean_data(raw_data):

return {

'date': datetime.now().strftime('%m/%d/%Y'),

'value': float(raw_data.get('value', 0)),

'status': raw_data.get('status', '').strip()

}

Monitor Changes with Logging:

Track errors and website changes using logs:

import logging

logging.basicConfig(

filename='scraper.log',

level=logging.INFO,

format='%(asctime)s - %(levelname)s - %(message)s'

)

def check_data_integrity(data):

if not data:

logging.error('No data retrieved from source')

return False

return True

Automate Google Sheets With Python - Google Sheets API Tutorial

Summary and Next Steps

Automated data collection can save time and improve accuracy. Here's how to structure your workflow effectively:

Start with Google Sheets' built-in functions like IMPORTHTML and IMPORTXML for straightforward data extraction. These are great for pulling data from simple HTML tables or structured web content.

For more advanced needs, consider this progression:

| Stage | Tools | Key Points |

|---|---|---|

| Initial Setup | Google Sheets + IMPORTHTML | Begin with small datasets to test accuracy |

| Basic Automation | Google Apps Script | Schedule updates for consistent performance |

| Scaling Up | Python + BeautifulSoup | Manage larger datasets with better control |

| Enterprise Level | APIs + Custom Scripts | Handle high-volume data and rate limits |

This approach takes you from simple setups to more robust, scalable solutions.

Steps to Get Started:

- Set up a clear structure in Google Sheets and experiment with its native import functions.

- Organize your data with well-defined columns for tracking.

- Use logging tools to monitor performance and identify any issues.

- Gradually scale your system while ensuring it complies with website terms of service and rate limits.

Don't forget to use the logging and troubleshooting techniques we covered earlier to keep your system running smoothly. If you hit roadblocks, check your logs and revisit the troubleshooting section. As your requirements expand, move to more advanced solutions like Python scripts or APIs, which can handle dynamic content and larger datasets more efficiently.